Supercharging the ASUS Xtion PRO Live and other OpenNI-compliant depth cameras

ASUS introduced the Xtion PRO Live in 2012 as an input device for motion-controlled games, natural user interfaces (NUIs), and computer vision research. It is one of six similar cameras based on sensors designed by PrimeSense, an Israeli company that Apple acquired and shut down in 2013. For a brief comparison between the Xtion PRO Live and the other devices that use PrimeSense sensors, see the following table:

|

Name |

Price and Availability |

Highest Res NIR Mode |

Highest Res Color Mode |

Highest Res Depth Mode |

Depth Range |

|---|---|---|---|---|---|

|

Microsoft Kinect for Xbox 360 |

$135 Available |

640x480 @ 30 FPS? |

640x480 @ 30 FPS |

640x480 @ 30 FPS |

0.8m to 3.5m? |

|

ASUS Xtion PRO |

$200 Discontinued |

1280x1024 @ 60 FPS? |

None |

640x480 @ 30 FPS |

0.8m to 3.5m |

|

ASUS Xtion PRO Live |

$230 Available |

1280x1024 @ 60 FPS |

1280x1024 @ 60 FPS |

640x480 @ 30 FPS |

0.8m to 3.5m |

|

PrimeSense Carmine 1.08 |

$300 Discontinued |

1280x960 @ 60 FPS? |

1280x960 @ 60 FPS |

640x480 @ 30 FPS |

0.8m to 3.5m |

|

PrimeSense Carmine 1.09 |

$325 Discontinued |

1280x960 @ 60 FPS? |

1280x960 @ 60 FPS |

640x480 @ 30 FPS |

0.35m to 1.4m |

|

Structure Sensor |

$380 Available |

640x480 @ 30 FPS? |

None |

640x480 @ 30 FPS |

0.4m to 3.5m |

All of these devices include a NIR camera and a source of NIR illumination. The light source projects a pattern of NIR dots, which might be detectable at a distance of 0.8m to 3.5m, depending on the model. Most of the devices also include an RGB color camera. Based on the appearance of the active NIR image (of the dots) and the passive RGB image, the device can estimate distances and produce a so-called depth map, containing distance estimates for 640x480 points. Thus, the device has up to three modes: NIR (a camera image), color (a camera image), and depth (a processed image).

Note

For more information on the types of active illumination or structured light that are useful in depth imaging, see the following paper:

David Fofi, Tadeusz Sliwa, Yvon Voisin, "A comparative survey on invisible structured light", SPIE Electronic Imaging - Machine Vision Applications in Industrial Inspection XII, San José, USA, pp. 90-97, January, 2004.

The paper is available online at http://www.le2i.cnrs.fr/IMG/publications/fofi04a.pdf.

The Xtion, Carmine, and Structure Sensor devices as well as certain versions of the Kinect are compatible with open source SDKs called OpenNI and OpenNI2. Both OpenNI and OpenNI2 are available under the Apache license. On Windows, OpenNI2 comes with support for many cameras. However, on Linux and Mac, support for the Xtion, Carmine, and Structure Sensor devices is provided through an extra module called PrimeSense Sensor, which is also open source under the Apache license. The Sensor module and OpenNI2 have separate installation procedures and the Sensor module must be installed first. Obtain the Sensor module from one of the following URLs, depending on your operating system:

After downloading this archive, decompress it and run install.sh (inside the decompressed folder).

Note

For Kinect compatibility, instead try the SensorKinect fork of the Sensor module. Downloads for SensorKinect are available at https://github.com/avin2/SensorKinect/downloads. SensorKinect only supports Kinect for Xbox 360 and it does not support model 1473. (The model number is printed on the bottom of the device.) Moreover, SensorKinect is only compatible with an old development build of OpenNI (and not OpenNI2). For download links to the old build of OpenNI, refer to http://nummist.com/opencv/.

Now, on any operating system, we need to build the latest development version of OpenNI2 from source. (Older, stable versions do not work with the Xtion PRO Live, at least on some systems.) The source code can be downloaded as a ZIP archive from https://github.com/occipital/OpenNI2/archive/develop.zip or it can be cloned as a Git repository using the following command:

$ git clone –b develop https://github.com/occipital/OpenNI2.git

Let's refer to the unzipped directory or local repository directory as <openni2_path>. This path should contain a Visual Studio project for Windows and a Makefile for Linux or Mac. Build the project (using Visual Studio or the make command). Library files are generated in directories such as <openni2_path>/Bin/x64-Release and <openni2_path>/Bin/x64-Release/OpenNI2/Drivers (or similar names for another architecture besides x64). On Windows, add these two folders to the system's Path so that applications can find the dll files. On Linux or Mac, edit your ~/.profile file and add lines such as the following to create environment variables related to OpenNI2:

export OPENNI2_INCLUDE="<openni2_path>/Include" export OPENNI2_REDIST="<openni2_path>/Bin/x64-Release"

At this point, we have set up OpenNI2 with support for the Sensor module, so we can create applications for the Xtion PRO Live or other cameras that are based on the PrimeSense hardware. Source code, Visual Studio projects, and Makefiles for several samples can be found in <openni2_path>/Samples.

Note

Optionally, OpenCV's videoio module can be compiled with support for capturing images via OpenNI or OpenNI2. However, we will capture images directly from OpenNI2 and then convert them for use with OpenCV. By using OpenNI2 directly, we gain more control over the selection of camera modes, such as raw NIR capture.

The Xtion devices are designed for USB 2.0 and their standard firmware does not work with USB 3.0 ports. For USB 3.0 compatibility, we need an unofficial firmware update. The firmware updater only runs in Windows, but after the update is applied, the device is USB 3.0-compatible in Linux and Mac, too. To obtain and apply the update, take the following steps:

- Download the update from https://github.com/nh2/asus-xtion-fix/blob/master/FW579-RD1081-112v2.zip?raw=true and unzip it to any destination, which we will refer to as

<xtion_firmware_unzip_path>. - Ensure that the Xtion device is plugged in.

- Open Command Prompt and run the following commands:

> cd <xtion_firmware_unzip_path>\UsbUpdate > !Update-RD108x!

If the firmware updater prints errors, these are not necessarily fatal. Proceed to test the camera using our demo application shown here.

To understand the Xtion PRO Live's capabilities as either an active or passive NIR camera, we will build a simple application that captures and displays images from the device. Let's call this application Infravision.

Note

Infravision's source code and build files are in this book's GitHub repository at https://github.com/OpenCVBlueprints/OpenCVBlueprints/tree/master/chapter_1/Infravision.

This project needs just one source file, Infravision.cpp. From the C standard library, we will use functionality for formatting and printing strings. Thus, our implementation begins with the following import statements:

#include <stdio.h> #include <stdlib.h>

Infravision will use OpenNI2 and OpenCV. From OpenCV, we will use the core and imgproc modules for basic image manipulation as well as the highgui module for event handling and display. Here are the relevant import statements:

#include <opencv2/core.hpp> #include <opencv2/highgui.hpp> #include <opencv2/imgproc.hpp> #include <OpenNI.h>

Note

The documentation for OpenNI2 as well as OpenNI can be found online at http://structure.io/openni.

The only function in Infravision is a main function. It begins with definitions of two constants, which we might want to configure. The first of these specifies the kind of sensor data to be captured via OpenNI. This can be SENSOR_IR (monochrome output from the IR camera), SENSOR_COLOR (RGB output from the color camera), or SENSOR_DEPTH (processed, hybrid data reflecting the estimated distance to each point). The second constant is the title of the application window. Here are the relevant definitions:

int main(int argc, char *argv[]) {

const openni::SensorType sensorType = openni::SENSOR_IR;

// const openni::SensorType sensorType = openni::SENSOR_COLOR;

// const openni::SensorType sensorType = openni::SENSOR_DEPTH;

const char windowName[] = "Infravision";Based on the capture mode, we will define the format of the corresponding OpenCV matrix. The IR and depth modes are monochrome with 16 bpp. The color mode has three channels with 8 bpp per channel, as seen in the following code:

int srcMatType;

if (sensorType == openni::SENSOR_COLOR) {

srcMatType = CV_8UC3;

} else {

srcMatType = CV_16U;

}Let's proceed by taking several steps to initialize OpenNI2, connect to the camera, configure it, and start capturing images. Here is the code for the first step, initializing the library:

openni::Status status;

status = openni::OpenNI::initialize();

if (status != openni::STATUS_OK) {

printf(

"Failed to initialize OpenNI:\n%s\n",

openni::OpenNI::getExtendedError());

return EXIT_FAILURE;

}Next, we will connect to any available OpenNI-compliant camera:

openni::Device device;

status = device.open(openni::ANY_DEVICE);

if (status != openni::STATUS_OK) {

printf(

"Failed to open device:\n%s\n",

openni::OpenNI::getExtendedError());

openni::OpenNI::shutdown();

return EXIT_FAILURE;

}We will ensure that the device has the appropriate type of sensor by attempting to fetch information about that sensor:

const openni::SensorInfo *sensorInfo =

device.getSensorInfo(sensorType);

if (sensorInfo == NULL) {

printf("Failed to find sensor of appropriate type\n");

device.close();

openni::OpenNI::shutdown();

return EXIT_FAILURE;

}We will also create a stream but not start it yet:

openni::VideoStream stream;

status = stream.create(device, sensorType);

if (status != openni::STATUS_OK) {

printf(

"Failed to create stream:\n%s\n",

openni::OpenNI::getExtendedError());

device.close();

openni::OpenNI::shutdown();

return EXIT_FAILURE;

}We will query the supported video modes and iterate through them to find the one with the highest resolution. Then, we will select this mode:

// Select the video mode with the highest resolution.

{

const openni::Array<openni::VideoMode> *videoModes =

&sensorInfo->getSupportedVideoModes();

int maxResolutionX = -1;

int maxResolutionIndex = 0;

for (int i = 0; i < videoModes->getSize(); i++) {

int resolutionX = (*videoModes)[i].getResolutionX();

if (resolutionX > maxResolutionX) {

maxResolutionX = resolutionX;

maxResolutionIndex = i;

}

}

stream.setVideoMode((*videoModes)[maxResolutionIndex]);

}We will start streaming images from the camera:

status = stream.start();

if (status != openni::STATUS_OK) {

printf(

"Failed to start stream:\n%s\n",

openni::OpenNI::getExtendedError());

stream.destroy();

device.close();

openni::OpenNI::shutdown();

return EXIT_FAILURE;

}To prepare for capturing and displaying images, we will create an OpenNI frame, an OpenCV matrix, and a window:

openni::VideoFrameRef frame; cv::Mat dstMat; cv::namedWindow(windowName);

Next, we will implement the application's main loop. On each iteration, we will capture a frame via OpenNI, convert it to a typical OpenCV format (either grayscale with 8 bpp or BGR with 8 bpp per channel), and display it via the highgui module. The loop ends when the user presses any key. Here is the implementation:

// Capture and display frames until any key is pressed.

while (cv::waitKey(1) == -1) {

status = stream.readFrame(&frame);

if (frame.isValid()) {

cv::Mat srcMat(

frame.getHeight(), frame.getWidth(), srcMatType,

(void *)frame.getData(), frame.getStrideInBytes());

if (sensorType == openni::SENSOR_COLOR) {

cv::cvtColor(srcMat, dstMat, cv::COLOR_RGB2BGR);

} else {

srcMat.convertTo(dstMat, CV_8U);

}

cv::imshow(windowName, dstMat);

}

}Note

OpenCV's highgui module has many shortcomings. It does not allow for handling of a standard quit event, such as the clicking of a window's X button. Thus, we quit based on a keystroke instead. Also, highgui imposes a delay of at least 1ms (but possibly more, depending on the operating system's minimum time to switch between threads) when polling events such as keystrokes. This delay should not matter for the purpose of demonstrating a camera with a low frame rate, such as the Xtion PRO Live with its 30 FPS limit. However, in the next section, Supercharging the GS3-U3-23S6M-C and other Point Gray Research cameras, we will explore SDL2 as a more efficient alternative to highgui.

After the loop ends (due to the user pressing a key), we will clean up the window and all of OpenNI's resources, as shown in the following code:

cv::destroyWindow(windowName); stream.stop(); stream.destroy(); device.close(); openni::OpenNI::shutdown(); }

This is the end of the source code. On Windows, Infravision can be built as a Visual C++ Win32 Console Project in Visual Studio. Remember to right-click on the project and edit its Project Properties so that C++ | General | Additional Include Directories lists the path to OpenCV's and OpenNI's include directories. Also, edit Linker | Input | Additional Dependencies so that it lists the paths to opencv_core300.lib and opencv_imgproc300.lib (or similarly named lib files for other OpenCV versions besides 3.0.0) as well as OpenNI2.lib. Finally, ensure that OpenCV's and OpenNI's dll files are in the system's Path.

On Linux or Mac, Infravision can be compiled using a Terminal command such as the following (assuming that the OPENNI2_INCLUDE and OPENNI2_REDIST environment variables are defined as described earlier in this section):

$ g++ Infravision.cpp -o Infravision \ -I include -I $OPENNI2_INCLUDE -L $OPENNI2_REDIST \ -Wl,-R$OPENNI2_REDIST -Wl,-R$OPENNI2_REDIST/OPENNI2 \ -lopencv_core -lopencv_highgui -lopencv_imgproc -lOpenNI2

Note

The -Wl,-R flags specify an additional path where the executable should search for library files at runtime.

After building Infravision, run it and observe the pattern of NIR dots that the Xtion PRO Live projects onto nearby objects. When reflected from distant objects, the dots are sparsely spaced, but when reflected from nearby objects, they are densely spaced or even indistinguishable. Thus, the density of dots is a predictor of distance. Here is a screenshot showing the effect in a sunlit room where NIR light is coming from both the Xtion and the windows:

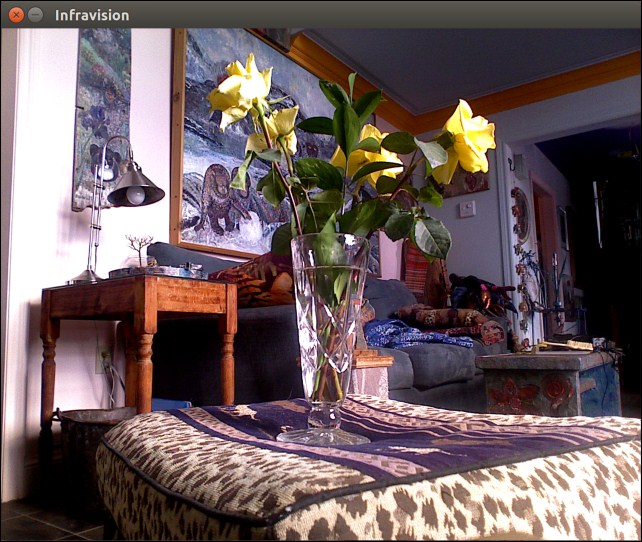

Alternatively, if you want to use the Xtion as a passive NIR camera, simply cover up the camera's NIR emitter. Your fingers will not block all of the emitter's light, but a piece of electrical tape will. Now, point the camera at a scene that has moderately bright NIR illumination. For example, the Xtion should be able to take a good passive NIR image in a sunlit room or beside a campfire at night. However, the camera will not cope well with a sunlit outdoor scene because this is vastly brighter than the conditions for which the device was designed. Here is a screenshot showing the same sunlit room as in the previous example but this time, the Xtion's NIR emitter is covered up:

Note that all the dots have disappeared and the new image looks like a relatively normal black-and-white photo. However, do any objects appear to have a strange glow?

Feel free to modify the code to use SENSOR_DEPTH or SENSOR_COLOR instead of SENSOR_IR. Recompile, rerun the application, and observe the effects. The depth sensor provides a depth map, which appears bright in nearby regions and dark in faraway regions or regions of unknown distance. The color sensor provides a normal-looking image based on the visible spectrum, as seen in the following screenshot of the same sunlit room:

Compare the previous two screenshots. Note that the leaves of the roses are much brighter in the NIR image. Moreover, the printed pattern on the footstool (beneath the roses) is invisible in the NIR image. (When designers choose pigments, they usually do not think about how the object will look in NIR!)

Perhaps you want to use the Xtion as an active NIR imaging device—capable of night vision at short range—but you do not want the pattern of NIR dots. Just cover the illuminator with something to diffuse the NIR light, such as your fingers or a piece of fabric.

As an example of this diffused lighting, look at the following screenshot, showing a woman's wrists in NIR:

Note that the veins are more distinguishable than they would be in visible light. Similarly, active NIR cameras have a superior ability to capture identifiable details in the iris of a person's eye, as demonstrated in Chapter 6, Efficient Person Identification Using Biometric Properties. Can you find other examples of things that look much different in the NIR and visible wavelengths?

By now, we have seen that OpenNI-compatible cameras can be configured (programmatically and physically) to take several kinds of images. However, these cameras are designed for a specific task—depth estimation in indoor scenes—and they do not necessarily cope well with alternative uses such as outdoor NIR imaging. Next, we will look at a more diverse, more configurable, and more expensive family of cameras.