Cloud-based applications can be built on low-level infrastructure pieces or can use higher-level services that provide abstraction from the management, architecting, and scaling requirements of core infrastructure. In the following section, you will learn about the different cloud-computing models.

The serverless paradigm

The cloud-computing evolution

Cloud providers offer their services according to four main models: IaaS, PaaS, CaaS, and FaaS. All the aforementioned models are just thousands of servers, disks, routers, and cables under the hood. They just add layers of abstraction on top to make management easier and increase the development velocity.

Infrastructure as a Service

Infrastructure as a Service (IaaS), sometimes abbreviated to IaaS, is the basic cloud-consumption model. It exposes an API built on top of a virtualized platform to access compute, storage, and network resources. It allows customers to scale out their application infinitely (no capacity planning).

In this model, the cloud provider abstracts the hardware and physical servers, and the cloud user is responsible for managing and maintaining the guest operating systems and applications on top of it.

AWS is the leader according to Gartner's Infrastructure as a Service Magic Quadrant. Irrespective of whether you're looking for content delivery, compute power, storage, or other service functionality, AWS is the most advantageous of the various available options when it comes to the IaaS cloud-computing model. It dominates the public cloud market, while Microsoft Azure is gradually catching up with to Amazon, followed by Google Cloud Platform and IBM Cloud.

Platform as a Service

Platform as a Service (PaaS) provides developers with a framework in which they can develop applications. It simplifies, speeds up, and lowers the costs associated with the process of developing, testing, and deploying applications while hiding all implementation details, such as server management, load balancers, and database configurations.

PaaS is built on top of IaaS and thus hides the underlying infrastructure and operating systems, to allow developers to focus on delivering business values and reduce operational overhead.

Among the first to launch PaaS was Heroku, in 2007; later, Google App Engine and AWS Elastic Beanstalk joined the fray.

Container as a Service

Container as a Service (CaaS) became popular with the release of Docker in 2013. It made it easy to build and deploy containerized applications on on-premise data centers or over the cloud.

Containers changed the unit of scale for DevOps and site reliability engineers. Instead of one dedicated VM per application, multiple containers can run on a single virtual machine, which allows better server utilization and reduces costs. Also, it brings developer and operation teams closer together by eliminating the "worked on my machine" joke. This transition to containers has allowed multiple companies to modernize their legacy applications and move them to cloud.

To achieve fault-tolerance, high-availability, and scalability, an orchestrations tool, such as Docker Swarm, Kubernetes, or Apache Mesos, was needed to manage containers in a cluster of nodes. As a result, CaaS was introduced to build, ship, and run containers quickly and efficiently. It also handles heavy tasks, such as cluster management, scaling, blue/green deployment, canary updates, and rollbacks.

The most popular CaaS platform in the market today is AWS as 57% of the Kubernetes workload is running on Amazon Elastic Container Service (ECS), Elastic Kubernetes Service (EKS), and AWS Fargate, followed by Docker Cloud, CloudFoundry, and Google Container Engine.

This model, CaaS, enables you to split your virtual machines further to achieve higher utilization and orchestrate containers across a cluster of machines, but the cloud user still needs to manage the life cycle of containers; as a solution to this, Function as a Service (FaaS) was introduced.

Function as a Service

The FaaS model allows developers to run code (called functions) without provisioning or maintaining a complex infrastructure. Cloud Providers deploy customer code to fully-managed, ephemeral, time-boxed containers that are live only during the invocation of the functions. Therefore, business can grow without customers having to worry about scaling or maintaining a complex infrastructure; this is called going serverless.

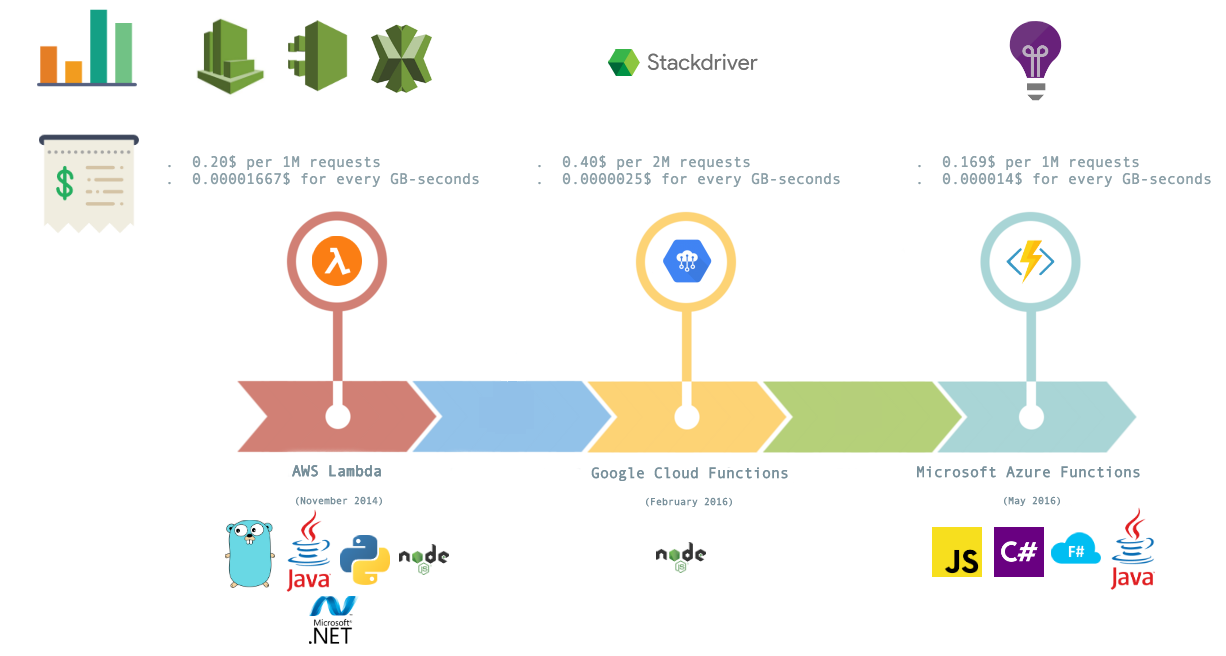

Amazon kicked off the serverless revolution with AWS Lambda in 2014, followed by Microsoft Azure Functions and Google Cloud Functions.

Serverless architecture

Serverless computing, or FaaS, is the fourth way to consume cloud computing. In this model, the responsibility for provisioning, maintaining, and patching servers is shifted from the customer to cloud providers. Developers can now focus on building new features and innovating, and pay only for the compute time that they consume.

Benefits of going serverless

There are a number of reasons why going serverless makes sense:

- NoOps: The server infrastructure is managed by the cloud provider, and this reduces the overhead and increases developer velocity. OS updates are taken care of and patching is done by the FaaS provider. This results in decreased time to market and faster software releases, and eliminates the need for a system administrator.

- Autoscaling and high-availability: Function as a unit of scale leads to small, loosely-coupled, and stateless components that, in the long run, lead to scalable applications. It is up to the service provider to decide how to use its infrastructure effectively to serve requests from the customers and horizontally scale functions-based on the load.

- Cost-optimization: You pay only for the compute time and resources (RAM, CPU, network, or invocation time) that you consume. You don't pay for idle resources. No work indicates no cost. If the billing period on a Lambda function, for example, is 100 milliseconds, then it could significantly reduce costs.

- Polygot: One benefit that the serverless approach brings to the table is that, as a programmer, you can choose between different language runtimes depending on your use case. One part of the application can be written in Java, another in Go, another in Python; it doesn't really matter as long as it gets the job done.

Drawbacks of going serverless

On the other hand, serverless computing is still in its infancy; hence, it is not suitable for all use cases and it does have its limitations:

- Transparency: The infrastructure is managed by the FaaS provider. This is in exchange for flexibility; you don't have full control of your application, you cannot access the underlying infrastructure, and you cannot switch between platform providers (vendor lock-in). In future, we expect increasing work toward the unification of FaaS; this will help avoid vendor lock-in and allow us to run serverless applications on different cloud providers or even on-premise.

- Debugging: Monitoring and debugging tools were built without serverless architecture in mind. Therefore, serverless functions are hard to debug and monitor. In addition, it's difficult to set up a local environment to test your functions before deployment (pre-integration testing). The good news is that tools will eventually arrive to improve observability in serverless environments, as serverless popularity is rising and multiple open source projects and frameworks have been created by the community and cloud providers (AWS X-Ray, Datadog, Dashbird, and Komiser).

- Cold starts: It takes some time to handle a first request by your function as the cloud provider needs to allocate proper resources (AWS Lambda needs to start a container) for your tasks. To avoid this situation, your function must remain in an active state.

- Stateless: Functions need to be stateless to provide the provisioning that enables serverless applications to be transparently scalable. Therefore, to persist data or manage sessions, you need to use an external database, such as DynamoDB or RDS, or an in-memory cache engine, such as Redis or Memcached.

Having stated all these limitations, these aspects will change in the future with an increasing number of vendors coming up with upgraded versions of their platforms.

Serverless cloud providers

There are multiple FaaS providers out there, but to keep it simple we'll compare only the biggest three:

- AWS Lambda

- Google Cloud Functions

- Microsoft Azure Functions

The following is a pictorial comparison:

As shown in the preceding diagram, AWS Lambda is the most used, best-known, and the most mature solution in the serverless space today, and that's why upcoming chapters will be fully dedicated to AWS Lambda.