Fundamentals of deploying Azure IaaS

As described earlier, IaaS refers to building a virtual datacenter. In the core, this refers to the following:

- Networking

- Storage

- Compute

Extended with overall security, governance, and monitoring capabilities.

Each of these architectural building blocks will be described in more detail in this section.

Networking

The core foundation of any datacenter, physical or virtual, is networking. This is where you define the IP address ranges and firewall communication settings, as well as define which virtual machines can connect with each other. Next, you also will outline how hybrid datacenter connectivity will be established between your on-premises datacenter(s) and the Azure datacenter(s).

From the ground up, you need to think about and deploy the following Azure services:

- Datacenter hybrid connectivity using Azure site-to-site VPN or ExpressRoute.

- Creating Azure virtual networks and corresponding subnets.

- Deploy firewall-like capabilities using Azure Network Security Groups, Application Security Groups, Azure Firewall, or third-party Network Virtual Appliances.

- Consider how you will perform virtual machine remote management. Regarding advanced security, just-in-time virtual machine access from Azure Security Center or the new Azure Bastion (public preview) might be good options. If neither of these, make sure your RDP/SSH sessions are behind a firewall scenario, never directly exposing your management host or virtual machines to the public internet.

- Just like in your own datacenter, Azure supports load balancing capabilities on its virtual network. You can choose from Azure Load Balancer, a layer-4 capable load balancer, supporting both TCP and UDP traffic on all ports. If you want to load balance web application protocols (HTTP/HTTPS), it might be interesting to deploy Azure Application Gateway, a layer-7 load balancing service, extendable with a Web Application Firewall (WAF) for advanced security and threat detection. The last option for load balancing is deploying a third-party load balancing appliance from trusted vendors such as Kemp, F5, or Barracuda.

- In the case of having multiple Azure datacenters for highly available workload scenarios, deploy Azure Traffic Manager or the new Azure Front Door service, allowing for detection and redirection across multiple Azure regions for failover and avoiding latency issues.

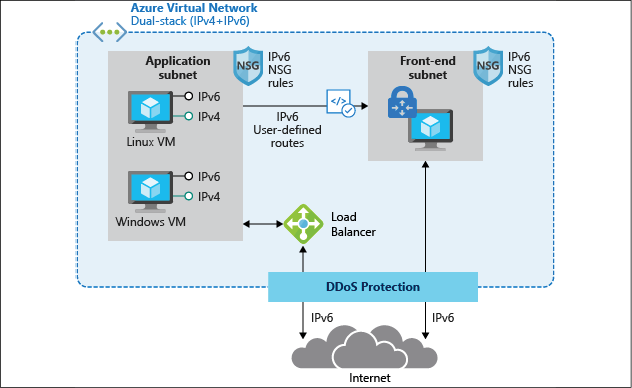

Besides these, running an Azure virtual network infrastructure—see Figure 13 for an example diagram of what this network design could look like—is similar to what it's like in your own datacenters. Here are some of the core characteristics and capabilities:

- Bring your own network: This refers to the aspect of defining your Azure internal virtual network (VNet) IP ranges. Azure supports the standard class-A, class-B, and class-C IP addressing.

- Public IP addresses: Every Azure subscription comes with a default of five public IP addresses that can be allocated to Azure resources (firewall, load balancer, and virtual machine). Know that these IP addresses can be defined as dynamic or static. Also, keep in mind that it will never be a range of IP addresses, but rather standalone. It is also not possible to bring your own range of public IP addresses into an Azure datacenter.

- Internal IP addresses: When you deploy an Azure VNet and subnets, you are in control of the IP range (CIDR-based). Preferably, Azure allocates dynamic IP addresses to resources such as virtual machines (as opposed to the more traditional fixed IP addresses you allocate to servers in your own datacenter). In my opinion, the only servers requiring a fixed IP address in Azure would be software clusters such as SQL or Oracle, or when the virtual machine is running DNS services for the subnet it is deployed in.

- Azure DNS: Azure comes with a full operation DNS as a service that you can leverage for your own name resolution. When deploying an Azure VNet and subnet, it refers to this Azure DNS by default. You can change this setting to refer to your own DNS solution, however. This can be an in-Azure running virtual machine or an on-premises DNS solution (assuming you have hybrid connectivity in place).

- IPv4 / IPv6: Azure virtual networks support IPv4, but currently IPv6 support is in preview. More and more internal services, such as virtual machines and Internet of Things (IoT), are supporting IPv6 for its network connectivity, both inbound and outbound.

Figure 13: Azure virtual network architecture

Further details on Azure networking services, capabilities, and how to deploy and manage them can be found at the following link:

https://docs.microsoft.com/en-us/azure/virtual-network/

Storage

The next layer in the virtual datacenter architecture I will highlight is Azure Storage. Similar again to your on-premises datacenter scenario, Azure offers several different storage services:

- Azure Storage accounts for blobs, tables, queues, and files

- Azure managed disks for virtual machine disks

- Azure File Sync, allowing you to synchronize on-premises file shares to Azure

- Azure big data solutions

Let me describe the core characteristics of each of these services.

Azure Storage accounts

Azure Storage accounts are the easiest way to start consuming storage in Azure. An Azure Storage account is much like your on-premises NAS or SAN solution, on which you define volumes or shares.

When you deploy an Azure Storage account in an Azure region, it offers four different use cases:

- Blob storage: Probably the most common storage type. Blobs allow you to store larger datasets (VHD files, images, documents, log files, and so on) inside storage containers.

- Files: Azure file shares to which you can connect using the SMB file share protocol; this is supported from both Windows endpoints (SMB) a Linux (Mount).

- Tables and queues: These services are mainly supporting your application landscape. Tables are a quick alternative for storing data, whereas queues can be used for sending telemetry information.

Azure Storage accounts offer several options regarding high availability:

- LRS – Local Redundant Storage: All data is replicated three times within the same Azure datacenter building.

- ZRS – Zone Redundant Storage: All data is replicated three times across different Azure buildings in the same Azure region.

- GRS – Geo Redundant Storage: All data is replicated three times in the same Azure datacenter, and replicated another three times to a different Azure region (taking geo-compliance into account, to guarantee the data never leaves the regional boundaries).

- GRS – Read Access: Similar to GRS, but the replicated data in the other Azure region is stored in a read-only format. Only Microsoft can flip the switch to make this a writeable copy.

Azure managed disks

For a long time, Azure Storage accounts were the only storage option in Azure for building virtual disks for your virtual machines, dating back to Azure classic 10 years ago. While storage accounts were good, they also came with some limitations, performance and scalability being the most important ones.

That's where Microsoft released a new virtual disk storage architecture about 3 years back, called managed disks. In the scenario of managed disks, the Azure Storage fabric does most of the work for you. You don't have to worry about creating a storage account or performance or scalability issues; you just create the disks and you're done. Talking about performance, managed disks come with a full list of SKUs to choose from, offering everything from average (500 IOPS-P10) to high-performing disks (20.000 IOPS-P80), and there are also Ultra SSD disk types available, going up to 160.000 IOPS with this model.

It is important to note that the disk subsystem performance is also largely dependent on the actual VM size you allocate to the Azure virtual machine; the same goes for storage capacity, as not all Azure VMs support a large number of disks. Besides having larger disk volumes available from the operating system perspective, having a larger amount of disks available could also be used for configuring disk striping in an Azure virtual machine disk subsystem. This would also result in better IOPS performance.

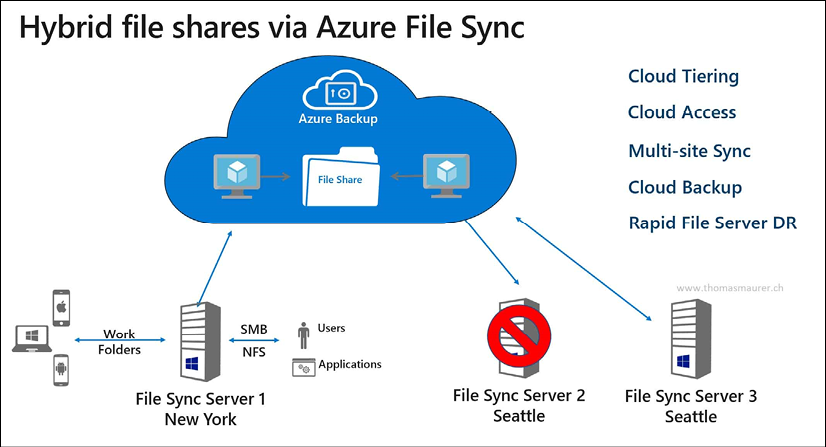

Azure File Sync

If you have multiple file servers today, you most probably have a solution in place to keep them in sync. In the Windows Server world, this could be done using DFS—Distributed File System—which has been a core service since Windows Server 2012. When migrating applications to the cloud, you might also need to migrate the file share dependencies. One option would be deploying Azure virtual machine-based file servers for this. But that might be overkill, especially if those machines are only offering file share services. A valid alternative is deploying Azure File Sync.

By using Azure File Sync (see Figure 14 for a sample architecture), you can centralize your file shares in Azure Files (as part of Azure Storage accounts), using them in the same way as your on-premises Windows file servers, but without the Windows Server layer in between. Starting from a Storage Sync Service in Azure, you create a Sync Group. Within this Sync Group, you configure registered servers. Once this server is registered, you deploy the Azure File Sync Agent on to it, which takes care of the synchronization process of your file shares.

Azure File Sync also provides storage tiering functionality, allowing you to save on storage costs when storing archiving data that you don't consult often but need to keep because of data compliance.

Data deduplication is another benefit for these cloud tiering-enabled volumes on Windows Server 2016 and 2019.

Figure 14: Azure File Sync

Compute

This brings us to the next logical layer in the virtual datacenter, deploying virtual machines. This is probably one of the most common use cases for the public cloud.

As mentioned in the introductory paragraphs at the beginning of this chapter, virtualization has dramatically changed how organizations are deploying and managing their IT infrastructure. Thanks to solutions such as VMware and Hyper-V, systems can be consolidated with a smaller physical server footprint, are easy to deploy, are easy to recover, and provide other benefits when it comes to moving them across environments (from dev/test to production, for example).

Most of the aspects and characteristics you know about from running virtual machines in your own datacenter can be mapped to running virtual machines in Azure. I often describe it as "just another datacenter." (However, obviously Azure is a lot more than that…)

Just like with the networking layer, let me provide you with an overview of the several benefits that come with deploying virtual machine workloads in Azure:

- Deploying Azure virtual machines allows for agility and scale, offering a multitude of administrative processes to do so. Leverage your expertise of PowerShell to deploy and manage virtual machines, just how you would deploy and manage them in your datacenter. Or, extend to Infrastructure as Code, allowing you to deploy virtual machines from Azure templates. This allows not only for Azure resources deployment but can be extended with configuration management tools such as PowerShell Desired State Configuration, Chef, Puppet, and more.

- Azure virtual machines come with a default SLA of 99.9%, for a single deployed virtual machine with premium disks. If your business applications require an even better SLA, deploy your virtual machines as part of a virtual machine availability set. This guarantees an SLA of 99.95%, whereby the different VMs in the availability set will never run on the same physical rack in the Azure datacenter. Or, deploy VMs in a virtual machine availability zone, leveling up the SLA to 99.99%. In this architecture, your VMs (two or more instances) will be spread across different Azure physical datacenter buildings in the same Azure region.

- You might have business requirements in place, forcing you to deploy virtual machines in different locations, hundreds or thousands of miles apart from each other. Or, maybe you want to run the applications as close to the customer/end user as possible, to avoid any latency issues. Azure can accommodate this exact scenario, as all Azure datacenter regions are interconnected with each other using Microsoft Backbone cabling. From a connectivity perspective, you can use Azure VNet peering or a site-to-site VPN to build out multi-region datacenters for enterprise-ready high availability.

- If you don't need real-time high availability, but rather are looking into a quick and solid disaster recovery scenario, know that Azure has a service baked into the platform known as Azure Site Recovery. Based on virtual machine disk state change replication, Azure VMs will be kept in sync across multiple Azure regions (keeping compliance and data sovereignty boundaries in place). In the case of a failure or disaster happening with one of the virtual machines, your IT admin can start a manual (or scripted) failover process, guaranteeing the uptime of your application workloads in the other datacenter region. The main benefit besides fast failover is cost saving. You only pay for the underlying storage, as long as the disaster recovery virtual machines are offline. During the disaster timeframe, you only pay for the actual consumption cost of the running VMs during the lifetime of the disaster scenario.

- Although the Azure datacenters are owned and managed by Microsoft, it doesn't mean they are only limited to running Windows Server operating systems and Microsoft Server applications. 60% of Azure virtual machines are running a Linux operating system today.

- Many business applications, such as SAP, Oracle, and Citrix, are available in the Azure Marketplace, allowing for easier deployment, just like most other Azure virtual machine workloads. Starting from pre-configured images, any organization can deploy an enterprise application architecture in no time. Support for these third-party solution workloads is provided by Microsoft, together with the vendor.

- Azure offers more than 125 different virtual machine sizes, each having different characteristics and capabilities. Starting from a single CPU core virtual machine with 0.75 GB of memory, you can go up to virtual machines with 256 virtual cores and more than 430 GB memory. Next, you also have specific virtual machine families, supporting specific workloads (for example, the N-Series family is equipped with an Nvidia chipset for high-end graphical compute applications, the H-Series is recommended for high-performance compute (HPC) infrastructure, and you also have specific virtual machines sizes for SAP and SAP HANA).

For an overview of Azure virtual machines types and sizes, use this link to the Azure documentation:

https://docs.microsoft.com/en-us/azure/virtual-machines/windows/sizes

For a broader view on Azure virtual machine compute capabilities in Azure, use this link:

https://azure.microsoft.com/en-us/product-categories/compute/

The last Azure compute characteristic I want to highlight here is Azure Confidential Computing. In early May 2018, Microsoft Azure became the first cloud platform, enabling new data security capabilities. Mainly relying on technologies such as Intel SGX and Virtualization-Based Security (VBS), it offers Trusted Execution Environments (TEEs) in a public cloud. With more and more businesses moving their business-critical workloads to the cloud, security becomes even more crucial. Azure Confidential Computing aims at delivering top-notch security and protection for data in the cloud. The concept is based on the following key domains:

- Hardware: Intel SGX chipset from a hardware security perspective

- Compute: The Azure compute platform allows VM instances with TEE enabled

- Services: Secured platforms enable highly secured workloads such as blockchain

- Research: The Microsoft Research department is working closely with Azure PGs to continuously improve the capabilities of this trusted platform