Securing an Azure storage account with SAS using PowerShell

A Shared Access Signature (SAS) provides more granular access to blobs by specifying an expiry limit, specific permissions, and IPs.

Using an SAS, we can specify different permissions to users or applications on different blobs, based on the requirement. For example, if an application needs to read one file/blob from a container, instead of providing access to all the files in the container, we can use an SAS to provide read access on the required blob.

In this recipe, we'll learn to create and use an SAS to access blobs.

Getting ready

Before you start, go through the following steps:

- Make sure you have an existing Azure storage account. If not, create one by following the Provisioning an Azure storage account using PowerShell recipe in Chapter 1, Creating and Managing Data in Azure Data Lake.

- Make sure you have an existing Azure storage container. If not, create one by following the Creating containers and uploading files to Azure Blob storage using PowerShell recipe.

- Make sure you have existing blobs/files in an Azure storage container. If not, you can upload blobs by following the previous recipe.

- Log in to your Azure subscription in PowerShell. To log in, run the

Connect- AzAccountcommand in a new PowerShell window and follow the instructions.

How to do it…

Let's begin by securing blobs using an SAS.

Securing blobs using an SAS

Perform the following steps:

- Execute the following command in the PowerShell window to get the storage context:

$resourcegroup = "packtadestorage" $storageaccount = "packtadestoragev2" #get storage context $storagecontext = (Get-AzStorageAccount -ResourceGroupName $resourcegroup -Name $storageaccount). Context

- Execute the following commands to get the SAS token for the

logfile1.txtblob in thelogfilescontainer with list and read permissions:#set the token expiry time $starttime = Get-Date $endtime = $starttime.AddDays(1) # get the SAS token into a variable $sastoken = New-AzStorageBlobSASToken -Container "logfiles" -Blob "logfile1.txt" -Permission lr -StartTime $starttime -ExpiryTime $endtime -Context $storagecontext # view the SAS token. $sastoken

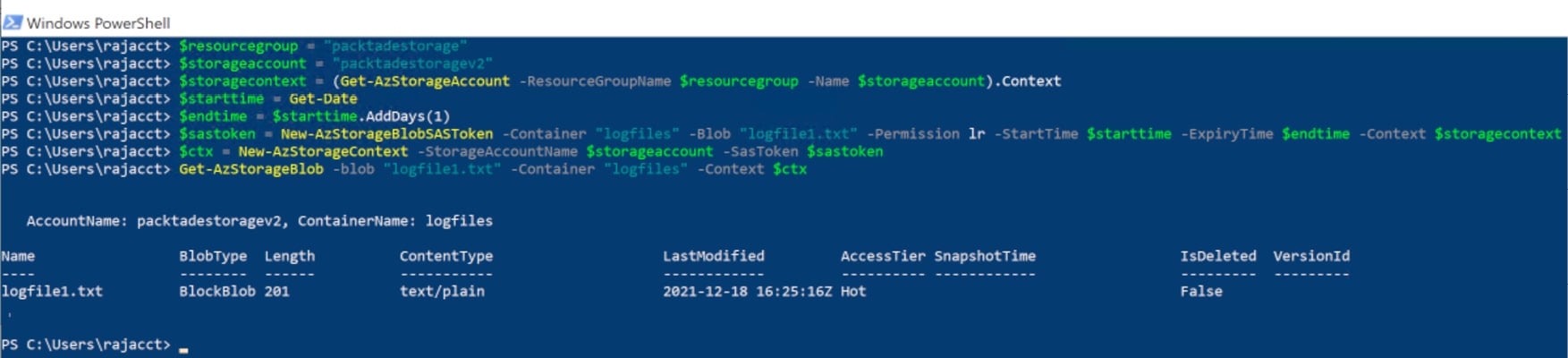

- Execute the following commands to list the blob using the SAS token:

#get storage account context using the SAS token $ctx = New-AzStorageContext -StorageAccountName $storageaccount -SasToken $sastoken #list the blob details Get-AzStorageBlob -blob "logfile1.txt" -Container "logfiles" -Context $ctx

You should get output as shown in the following screenshot:

Figure 2.40 – Listing blobs using an SAS

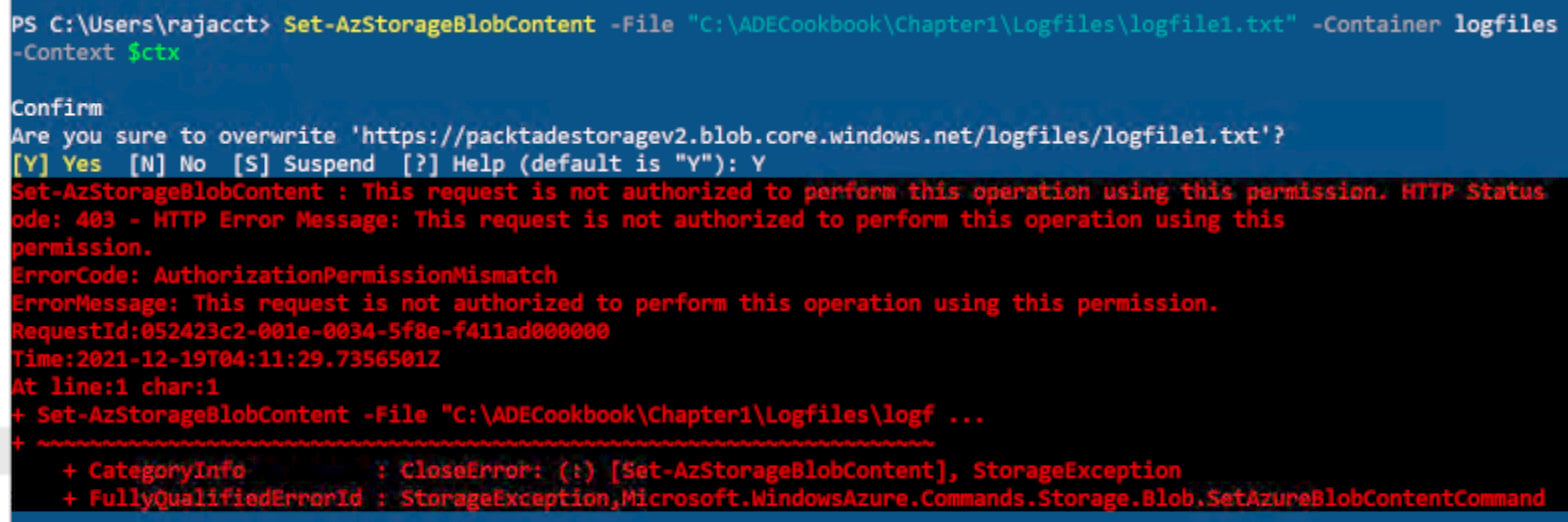

- Execute the following command to write data to

logfile1.txt. Ensure you have theLogfile1.txtfile in theC:\ADECookbook\Chapter1\ Logfiles\folder in the machine you are running the script from:Set-AzStorageBlobContent -File C:\ADECookbook\Chapter1\ Logfiles\Logfile1.txt -Container logfiles -Context $ctx

You should get output as shown in the following screenshot:

Figure 2.41 – Uploading a blob using an SAS

The write fails, as the SAS token was created with list and read access.

Securing a container with an SAS

Perform the following steps:

- Execute the following command to create a container stored access policy:

$resourcegroup = "packtadestorage" $storageaccount = "packtadestoragev2" #get storage context $storagecontext = (Get-AzStorageAccount -ResourceGroupName $resourcegroup -Name $storageaccount). Context $starttime = Get-Date $endtime = $starttime.AddDays(1) New-AzStorageContainerStoredAccessPolicy -Container logfiles -Policy writepolicy -Permission lw -StartTime $starttime -ExpiryTime $endtime -Context $storagecontext

- Execute the following command to create the SAS token:

#get the SAS token $sastoken = New-AzStorageContainerSASToken -Name logfiles -Policy writepolicy -Context

- Execute the following commands to list all the blobs in the container using the SAS token:

#get the storage context with SAS token $ctx = New-AzStorageContext -StorageAccountName $storageaccount -SasToken $sastoken #list blobs using SAS token Get-AzStorageBlob -Container logfiles -Context $ctx

How it works…

To generate a shared access token for a blob, use the New-AzStorageBlobSASToken command. We need to provide the blob name, container name, permission (l = list, r = read, and w = write), and storage context to generate an SAS token. We can additionally secure the token by providing IPs that can access the blob.

We then use the SAS token to get the storage context using the New-AzStorageContext command. We use the storage context to access the blobs using the Get-AzStorageBlob command. Note that we can only list and read blobs and can't write to them, as the SAS token doesn't have write permissions.

To generate a shared access token for a container, we first create an access policy for the container using the New-AzStorageContainerStoredAccessPolicy command. The access policy specifies the start and expiry time, permission, and IPs. We then generate the SAS token by passing the access policy name to the New-AzStorageContainerSASToken command.

We can now access the container and the blobs using the SAS token.