Resource configuration challenges

In the previous section, we covered how Kubernetes has two different configuration methods—imperative and declarative. One question to consider is what challenges do users need to be aware of when creating Kubernetes resources with imperative and declarative methodologies?

Let's discuss some of the most common challenges.

The many types of Kubernetes resources

First of all, there are many, many different resources in Kubernetes. Here's a short list of resources a developer should be aware of:

- Deployment

- StatefulSet

- Service

- Ingress

- ConfigMap

- Secret

- StorageClass

- PersistentVolumeClaim

- ServiceAccount

- Role

- RoleBinding

- Namespace

Out of the box, deploying an application on Kubernetes is not as simple as pushing a big red button marked Deploy. Developers need to be able to determine which resources are required to deploy their application and they need to understand those resources at a deep enough level to be able to configure them appropriately. This requires a lot of knowledge of and training on the platform. While understanding and creating resources may already sound like a large hurdle, this is actually just the beginning of many different operational challenges.

Keeping the live and local states in sync

A method of configuring Kubernetes resources that we would encourage is to maintain their configuration in source control for teams to edit and share, which also allows the source control repository to become the source of truth. The configuration defined in source control (referred to as the 'local state') is then created by applying them to the Kubernetes environment and the resources become 'live' or enter what can be called the 'live state.' This sounds simple enough, but what happens when developers need to make changes to their resources? The proper answer would be to modify the local files and apply the changes to synchronize the local state to the live state in an effort to update the source of truth. However, this isn't what usually ends up happening. It is often simpler, in the short term, to modify the live resource in place with kubectl patch or kubectl edit and completely skip over modifying the local files. This results in a state inconsistency between local and live states and is an act that makes scaling on Kubernetes difficult.

Application life cycles are hard to manage

Life cycle management is a loaded term, but in this context, we'll refer to it as the concept of installing, upgrading, and rolling back applications. In the Kubernetes world, an installation would create resources to deploy and configure an application. The initial installation would create what we refer to here as version 1 of an application.

An upgrade, then, can be thought of as an edit or modification to one or many of those Kubernetes resources. Each batch of edits can be thought of as a single upgrade. A developer could modify a single Service resource, which would bump the version number to version 2. The developer could then modify a Deployment, a ConfigMap, and a Service, bumping the version count to version 3.

As newer versions of an application continue to be rolled out onto Kubernetes, it becomes more difficult to keep track of the changes that have occurred. Kubernetes, in most cases, does not have an inherent way of keeping a history of changes. While this makes upgrades harder to keep track of, it also makes restoring a prior version of an application much more difficult. Say a developer previously made an incorrect edit on a particular resource. How would a team know where to roll back to? The n-1 case is particularly easy to work out, as that is the most recent version. What happens, however, if the latest stable release was five versions ago? Teams often end up scrambling to resolve issues because they cannot quickly identify the latest stable configuration that worked previously.

Resource files are static

This is a challenge that primarily affects the declarative configuration style of applying YAML resources. Part of the difficulty in following a declarative approach is that Kubernetes resource files are not natively designed to be parameterized. Resource files are largely designed to be written out in full before being applied and the contents remain the source of truth until the file is modified. When dealing with Kubernetes, this can be a frustrating reality. Some API resources can be lengthy, containing many different customizable fields, and it can be quite cumbersome to write and configure YAML resources in full.

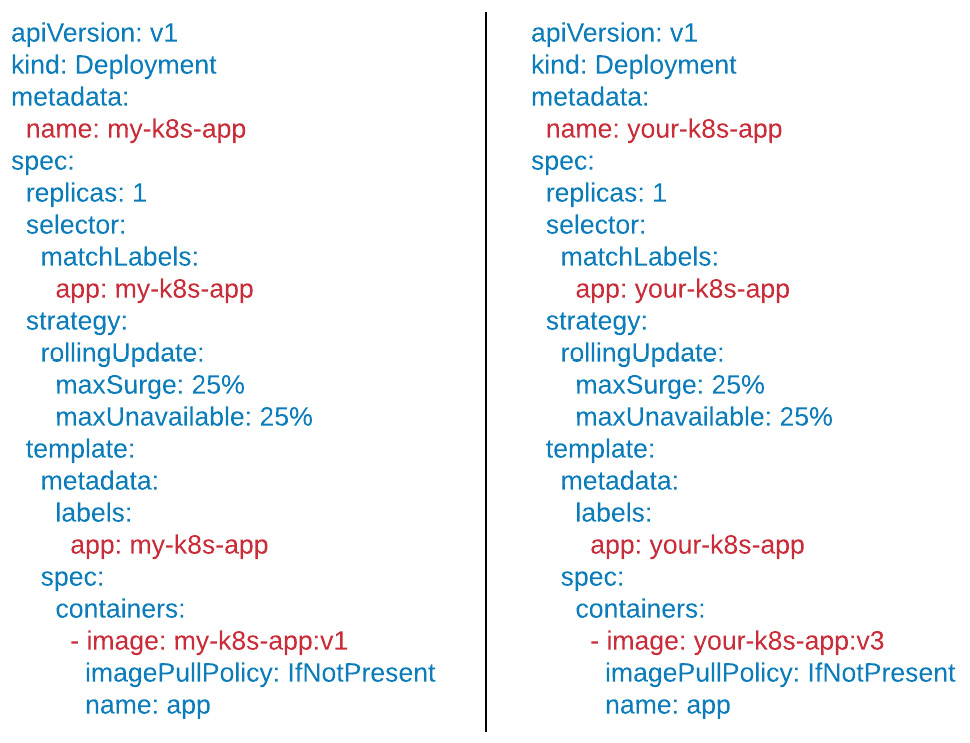

Static files lend themselves to becoming boilerplate. Boilerplate represents text or code that remains largely consistent in different but similar contexts. This becomes an issue if developers manage multiple different applications, where they could potentially manage multiple different Deployment resources, multiple different Services, and so on. In comparing the different applications' resource files, you may find large numbers of similar YAML configuration between them.

The following figure depicts an example of two resources with significant boilerplate configuration between them. The blue text denotes lines that are boilerplate, while the red text denotes lines that are unique:

Figure 1.5 - An example of two resources with boilerplate

Notice, in this example, that each file is almost exactly the same. When managing files that are as similar as this, boilerplate becomes a major headache for teams managing their applications in a declarative fashion.