Configuring an external database

In this recipe, we will attach Orchestrator to an external database. The internal Orchestrator PostgreSQL is production-ready, however for certain designs, such as Orchestrator Cluster and large deployments we still require one.

Getting ready

We will need a database; the following databases are supported with vRO7:

- Oracle 11g all editions - 64 bit

- Oracle 12g/c all editions - 64 bit

- SQL Server 2008 R1/R2 all editions - 64 bit

- SQL Server 2012 R1/R2 all editions - 64 bit

- PostgreSQL

You will need to create an empty database for Orchestrator, and you should also create a dedicated user account for Orchestrator to access the database.

If your database requires SSL, you will need to import the SSL certificate first; for this, see the How it works... section of this recipe.

Tip

When you replace the database, you will have to reconfigure the following items: Licensing and Packaging Signing Certificate.

How to do it...

In this example, we have added an MS-SQL database to Orchestrator. The other databases are not that much different.

The following information is needed for each type of database:

|

Database type |

Oracle |

SQL Server |

PostgreSQL |

|

Login |

required |

required |

required |

|

SSL |

optional |

optional |

optional |

|

Hostname |

required |

required |

required |

|

Port |

1521 or custom |

1433 or custom |

5432 or custom |

|

Database name |

- |

required |

required |

|

Instance |

required |

optional |

- |

|

Domain |

- |

optional |

- |

|

Use NTLMv2 |

- |

optional |

- |

To configure a database, follow these steps:

My MS-SQL database is stored on the VM called Central.mylab.local.

- Open Control Center.

- Click on Configure Database.

- Select SQL Server for Microsoft SQL server.

- Fill in the required information. You only need to fill in the domain if you are using Windows authentication.

- Click on Save Changes.

- You are now asked to Update database.

- After updating, the screen returns to the following one. You have configured the external database. You may need to configure the licensing and Package Signing Certificate as they were stored in an internal PostgreSQL database. Additionally, you may need to force the re-installation of plugins:

How it works...

The Orchestrator database contains the entire configuration, workflows, workflow runs, events, runtime information, actions, and a lot more.

If you want to use your existing co-operation, backup, and restore procedures of your database or a database cluster for more security, an external database is a good idea.

Orchestrator comes with an embedded PostgreSQL database, which is rated for production for small and medium deployments by VMware and can be easily backed up using the Control Center or a cron script on the Linux console of the appliance. However, we still require a shared database for clustering; see the recipe Building an Orchestrator cluster in Chapter 3, Distributed Design.

Using the vCenter Server database for Orchestrator is not really a pretty solution. IT best practices dictate the usage of dedicated resources for production environments.

Sizing

Sizing is hard to predict. Each Workflow run consumes around 4 KB, and most objects (for example, vCenter Server object) require around 50 KB each. VMware recommends 1 GB for a production database. The good thing is that Orchestrator regularly runs clean-up jobs to reduce the database content. Also have a look at the recipe User preferences in Chapter 7, Interacting with Orchestrator, where we discuss certain properties that influence how much information is kept in the database.

Database roles

For the initial setup (and for updates), you should give the dedicated Orchestrator user the db_owner rights of the Orchestrator database.

For normal usage scenarios the Orchestrator user only requires db_dataread and db_datawrite rights.

Exporting and importing a database

If you are using the internal PostgreSQL or an external PostgreSQL database, you can use the Control Center to export as well as import the database content.

The export can include information on the last workflow runs as well as the logs.

See also the recipe Backup and recovery in Chapter 2, Optimizing Orchestrator Configuration.

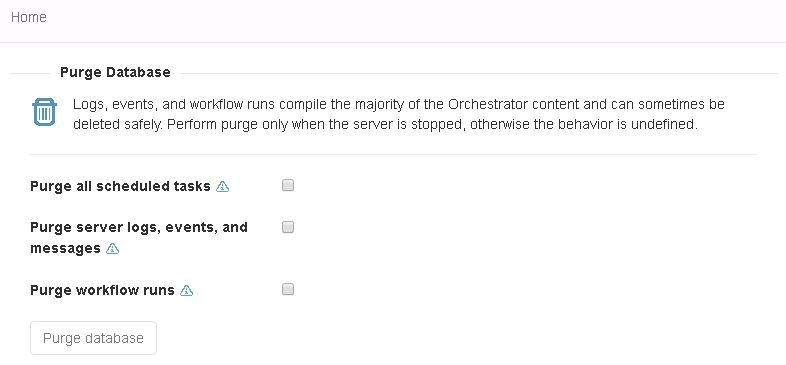

Purging the Database

This sounds much harsher than it is. Purging means getting rid of stuff you may not need anymore and making the database a bit smaller.

Purging the database from time to time isn't such a bad idea, however, you can't be sure whether or not you will throw away stuff you might need. For example, workflow runs and logs can take up a lot of space after some time, but they may also be important. (for example, SOX compliance).

There's more...

Here are some things you might find useful.

Microsoft SQL

Giving the database the settings, ALLOW_SNAPSHOT_ISOLATION and READ_COMMITTED_SNAPSHOT, will reduce the possibility of deadlocks and is also a prerequisite for Orchestrator clusters. This can be done by running the following script on the SQL cluster:

ALTER DATABASE [vRO DB Name] SET ALLOW_SNAPSHOT_ISOLATION ON; GO; ALTER DATABASE [vRO DB Name] SET READ_COMMITTED_SNAPSHOT ON; GO;

Oracle

The database should have NLS_CHARACTER_SET = AL32UTF8 set before you start allowing Orchestrator to build its tables.

To avoid an ORA-01450 error, it is important that you have the database block size configured in correspondence with your database index.

Internal PostgreSQL

To access the local DB (for example, for backups), you need the following information:

|

Database name |

|

|

User |

|

|

Password |

|

The PostgreSQL install is protected to only allow local access to it. You'll find the installation in /var/lib/pgsql.

See also

The recipe Backup and recovery in Chapter 2, Optimizing Orchestrator Configuration.