Kubernetes core concepts

Before diving into the meat and potatoes of Kubernetes, we’ll explain some key concepts in this section to help you start the journey with Kubernetes.

Containerized workloads

A containerized workload means applications running on Kubernetes. Going back to the raw definition of containerization, a container provides an isolated environment for your application, with higher density and better utilization of the underlying infrastructure compared to the applications deployed on the physical server or virtual machines (VMs):

Figure 1.2 – Virtual machine versus containers

The preceding diagram shows the difference between VMs and containers. When compared to VMs, containers are more efficient and easier to manage.

Container images

A container isolates the application with all its dependencies, libraries, binaries, and configuration files. The package of the application, together with its dependencies, libraries, binaries, and configurations, is what we call a container image. Once a container image is built, the content of the image is immutable. All the code changes and dependencies updates will need to build a new image.

Container registry

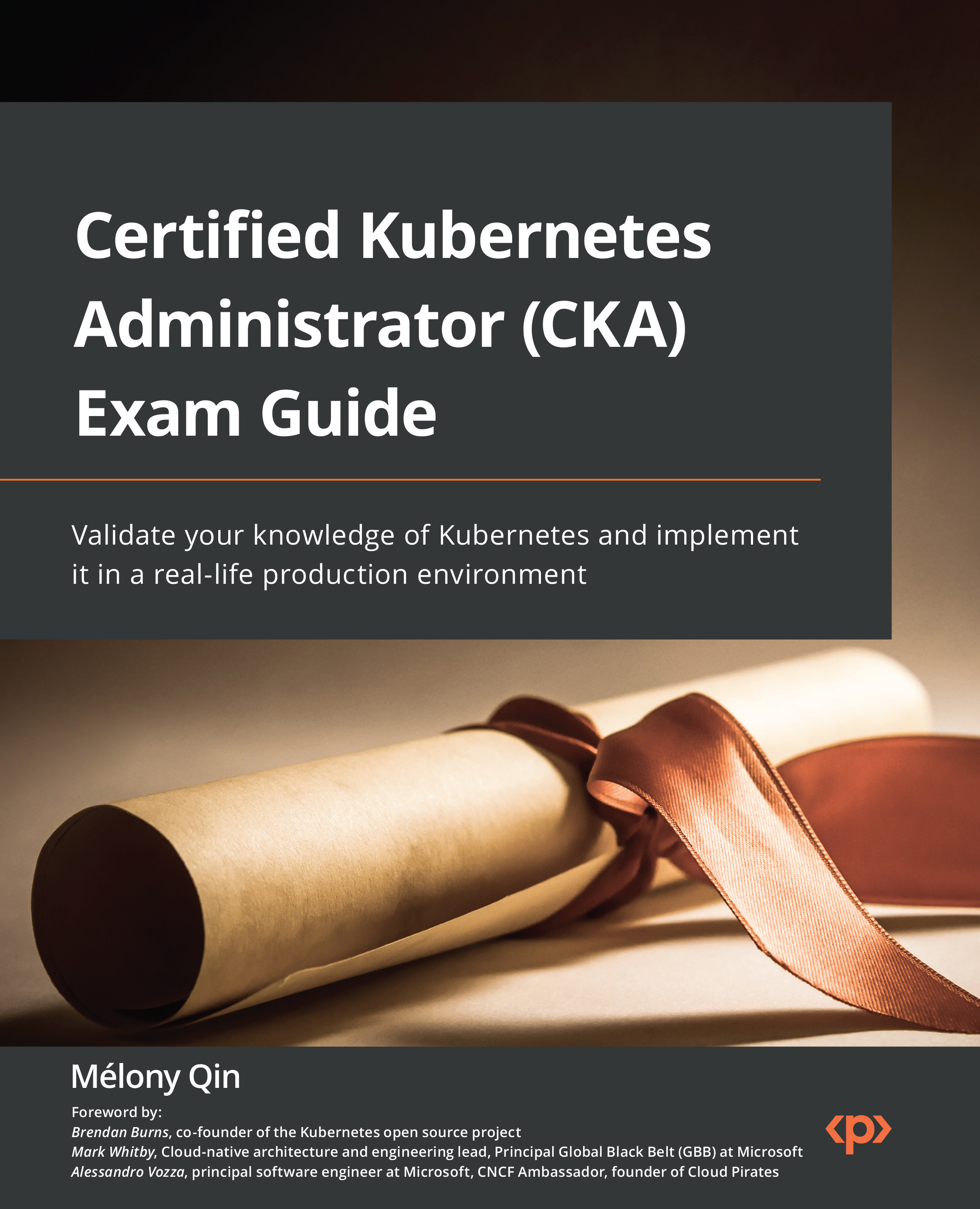

To store the container image, we need a container registry. The container registry is located on your local machine, on-premises, or sometimes in the cloud. You need to authenticate to the container registry to access its content to ensure security. Most public registries, such as DockerHub and quay.io, allow a wide range of non-gated container image distributions across the board:

Figure 1.3 – Container images

The upside of this entire mechanism is that it allows the developers to focus on coding and configuring, which is the core value of their job, without worrying about the underlying infrastructure or managing dependencies and libraries to be installed on the host node, as shown in the preceding diagram.

Container runtimes

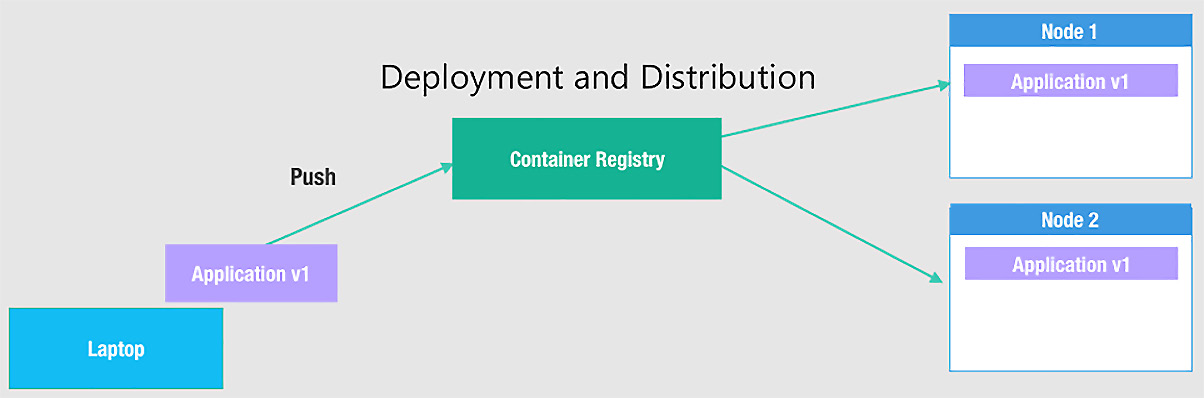

The container runtime is in charge of running containers, which is also known as the container engine. This is a software virtualization layer that runs containers on a host operating system. A container runtime such as Docker can pull container images from a container registry and manage the container life cycle using CLI commands, in this case, Docker CLI commands, as the following diagram describes:

Figure 1.4 – Managing Docker containers

Besides Docker, Kubernetes supports multiple container runtimes, such as containerd and CRI-O. In the context of Kubernetes, the container runtime helps get containers up and running within the Pods on each worker node. We’ll cover how to set up the container runtime in the next chapter as part of preparation work prior to provisioning a Kubernetes cluster.

Important note

Kubernetes runs the containerized workloads by provisioning Pods run on worker nodes. A node could be a physical or a virtual machine, on-premises, or in the cloud.