After years and years of research and development, AI is a rapidly expanding field. As consumer-level computer hardware becomes more and more powerful, developers are finding new and exciting ways to implement ever complex forms of AI in all kinds of applications. One such AI concept is Neural Networks, a subset of machine learning that we mentioned in the previous section. Neural Networks enable computers to "learn", and through repeated training become more and more efficient and effective at solving any number of problems. A very popular exercise for testing Neural Network machine learning is teaching an AI how to discern the value of a set of handwritten numbers.

In what we call supervised learning, we provide our Neural Network a set of training data. In the handwritten number scenario, we pass in hundreds or thousands of images collected from any source containing handwritten numbers. Using a process called back propagation, the network can adjust itself with the values and data it just "learned" to create a more accurate prediction in the next iteration of the learning cycle.

Believe it or not, the concept of Neural Networks has been around since the 1940s, with the first implementation happening in the early 1950s. The concept is fairly straightforward at a high level—a series of nodes, called neurons, are connected to one another via their axons, or connectors. If these terms sound familiar, it's because they were borrowed from brain cell structures with the same names, and in some ways, similar functions.

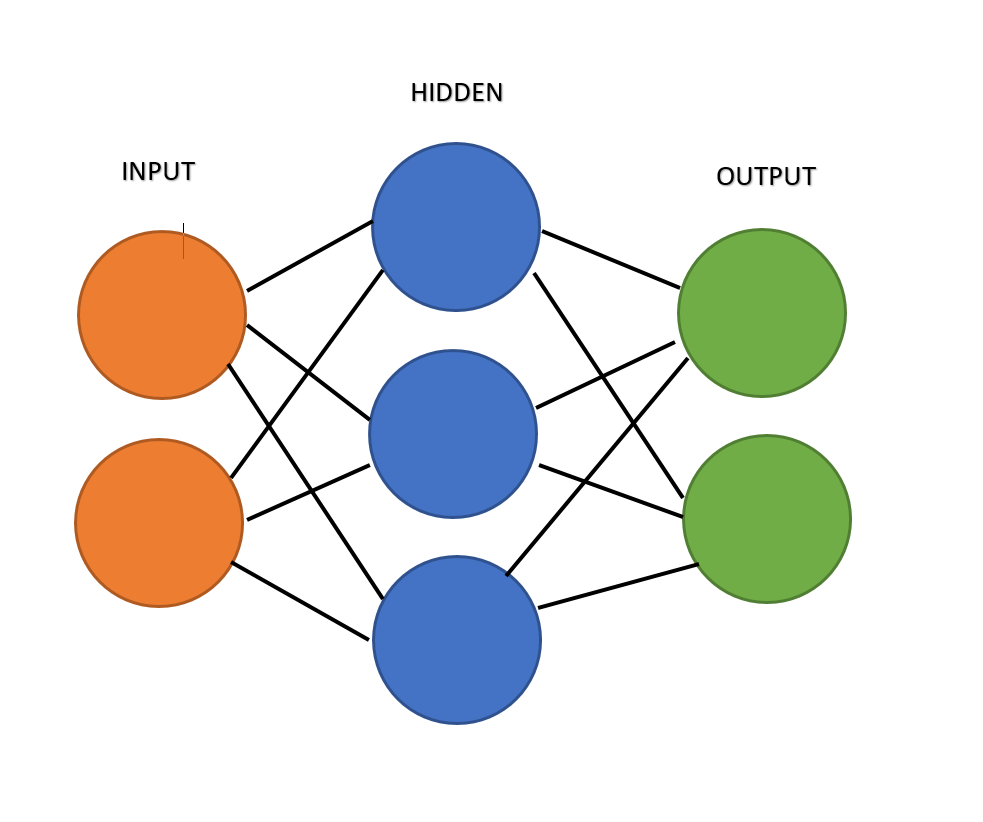

Layers of these networks are connected to one another. Generally, there is an input layer, a hidden layer, and an output layer. This structure is represented by the following diagram:

The input, which represents the data the agent is taking in, such as images, audio, or anything else, is passed through a hidden layer, which converts the data into something the program can use and then sends that data through to the output layer for final processing.

In neural net machine learning, not all input is equal; at least, it shouldn't be. Input is weighed before being passed into the hidden layer. While it's generally okay to start with equal weights, the program can then self-adjust those weights through each iteration using back propagation. Put simply, weights are how likely the input data is to be useful in the prediction.

After many iterations of training, the AI will then be able to tackle brand new data sets, even if it has never encountered them before! While the use for machine learning in games is still limited, the field continues to expand and is a very popular topic these days. Make sure not to miss the train and check out Machine Learning for Developers by Rodolfo Bonnin to deep dive into all things related to machine learning.