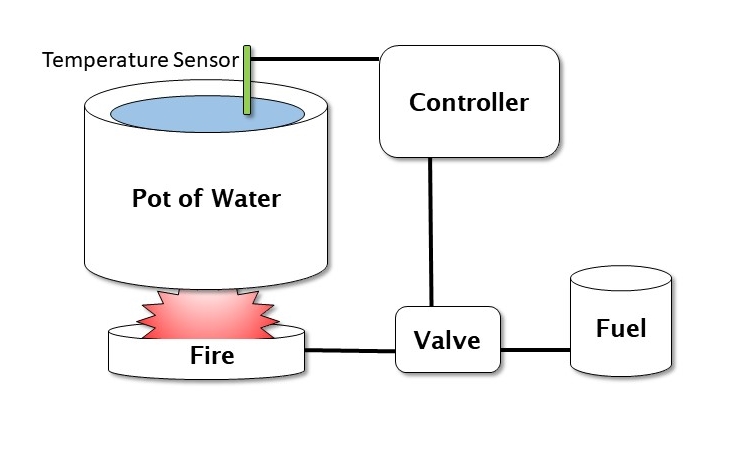

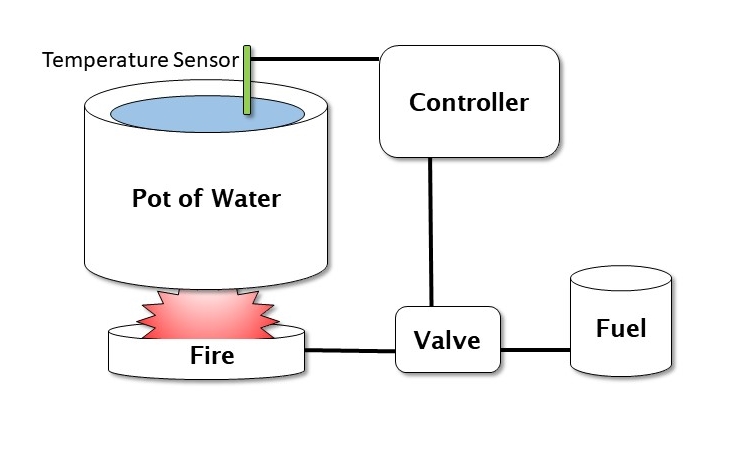

In order to have control of our robot, we have to establish some sort of control or feedback loop. Let’s say that we tell the robot to move 12 inches (30 cm) forward. The robot has to send a command to the motors to start moving forward, and then have some sort of mechanism to measure 12 inches of travel. We can use several means to accomplish this, but let’s just use a clock. The robot moves 3 inches (7.5 cm) per second. We need the control loop to start the movement, and then at each update cycle, or time through the loop, check the time, and see if 4 seconds has elapsed. If it has, then it sends a stop command to the motors. The timer is the control, 4 seconds is the set point, and the motor is the system that is controlled. The process also generates an error signal that tells us what control to apply (in this case, to stop). The following diagram shows a simple control loop. What we want is a constant temperature in the pot of water:

The Valve controls the heat produced by the fire, which warms the pot of water. The Temperature Sensor detects if the water is too cold, too hot, or just right. The Controller uses this information to control the valve for more heat. This type of schema is called a closed loop control system.

You can think of this also in terms of a process. We start the process, and then get feedback to show our progress, so that we know when to stop or modify the process. We could be doing speed control, where we need the robot to move at a specific speed, or pointing control, where the robot aims or turns in a specific direction.

Let’s look at another example. We have a robot with a self-charging docking station, with a set of light emitting diodes (LEDs) on the top as an optical target. We want the robot to drive straight into the docking station. We use the camera to see the target LEDs on the docking station. The camera generates an error, which is the direction that the LEDs are seen in the camera. The distance between the LEDs also gives us a rough range to the dock. Let’s say that the LEDs in the image are off to the left of center 50% and the distance is 3 feet (1 m) We send that information to a control loop to the motors – turn right (opposite the image) a bit and drive forward a bit. We then check again, and the LEDs are closer to the center (40%) and the distance is a bit less (2.9 feet or 90 cm). Our error signal is a bit less, and the distance is a bit less, so we send a slower turn and a slower movement to the motors at this update cycle. We end up exactly in the center and come to zero speed just as we touch the docking station. For those people currently saying "But if you use a PID controller …", yes, you are correct, I’ve just described a "P" or proportional control scheme. We can add more bells and whistles to help prevent the robot from overshooting or undershooting the target due to its own weight and inertia, and to damp out oscillations caused by those overshoots.

The point of these examples is to point out the concept of control in a system. Doing this consistently is the concept of real-time control.

In order to perform our control loop at a consistent time interval (or to use the proper term, deterministically), we have two ways of controlling our program execution: soft real time and hard real time.

A hard real-time system places requirements that a process executes inside a time window that is enforced by the operating system, which provides deterministic performance – the process always takes exactly the same amount of time.

The problem we are faced with is that a computer running an operating system is constantly getting interrupted by other processes, running threads, switching contexts, and performing tasks. Your experience with desktop computers, or even smart phones, is that the same process, like starting up a word processor program, always seems to take a different amount of time whenever you start it up, because the operating system is interrupting the task to do other things in your computer.

This sort of behavior is intolerable in a real-time system where we need to know in advance exactly how long a process will take down to the microsecond. You can easily imagine the problems if we created an autopilot for an airliner that, instead of managing the aircraft’s direction and altitude, was constantly getting interrupted by disk drive access or network calls that played havoc with the control loops giving you a smooth ride or making a touchdown on the runway.

A real-time operating system (RTOS) allows the programmers and developers to have complete control over when and how the processes are executing, and which routines are allowed to interrupt and for how long. Control loops in RTOS systems always take the exact same number of computer cycles (and thus time) every loop, which makes them reliable and dependable when the output is critical. It is important to know that in a hard real-time system, the hardware is enforcing timing constraints and making sure that the computer resources are available when they are needed.

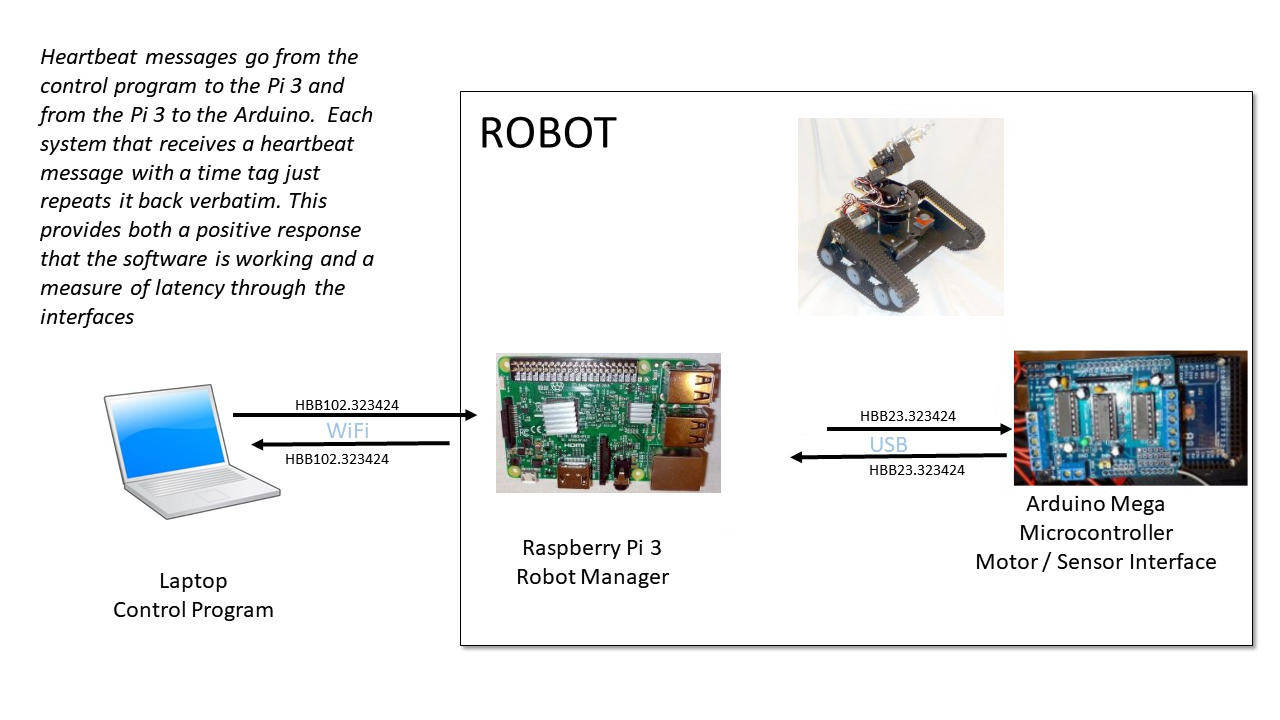

We can actually do hard real time in an Arduino microcontroller, because it has no operating system and can only do one task at a time, or run only one program at a time. We have complete control over the timing of any executing program. Our robot will also have a more capable processor in the form of a Raspberry Pi 3 running Linux. This computer, which has some real power, does quite a number of tasks simultaneously to support the operating system, run the network interface, send graphics to the output HDMI port, provide a user interface, and even support multiple users.

Soft real time is a bit more of a relaxed approach. The software keeps track of task execution, usually in the form of frames, which are set time intervals, like the frames in a movie film. Each frame is a fixed time interval, like 1/20 of a second, and the program divides its tasks into parts it can execute in those frames. Soft real time is more appropriate to our playroom cleaning robot than a safety-critical hard real-time system – plus, RTOSs are expensive and require special training. What we are going to do is treat the timing of our control loop as a feedback system. We will leave extra "room" at the end of each cycle to allow the operating system to do its work, which should leave us with a consistent control loop that executes at a constant time interval. Just like our control loop example, we will make a measurement, determine the error, and apply a correction each cycle.

We are not just worried about our update rate. We also have to worry about "jitter", or random variability in the timing loop caused by the operating system getting interrupted and doing other things. An interrupt will cause our timing loop to take longer, causing a random jump in our cycle time. We have to design our control loops to handle a certain amount of jitter for soft real time, but these are comparatively infrequent events.

The process is actually fairly simple in practice. We start by initializing our timer, which needs to be as high a resolution as we can get. We are writing our control loop in Python, so we will use the time.time() function, which is specifically designed to measure our internal program timing performance (set frame rate, do loop, measure time, generate error, sleep for error, loop). Each time we call time.time(), we get a floating point number, which is the number of seconds from the Unix clock.

The concept for this process is to divide our processing into a set of fixed time frames. Everything we do will fit within an integral number of frames. Our basic running speed will process 30 frames per second. That is how fast we will be updating the robot’s position estimate, reading sensors, and sending commands to motors. We have other functions that run slower than the 30 frames, so we can divide them between frames in even multiples. Some functions run every frame (30 fps), and are called and executed every frame. Let’s say that we have a sonar sensor that can only update 10 times a second. We call the read sonar function every third frame. We assign all our functions to be some multiple of our basic 30 fps frame rate, so we have 30, 15, 10, 7.5, 6,5,4.28,2, and 1 frames per second if we call the functions every frame, every second frame, every third frame, and so on. We can even do less that one frame per second – a function called every 60 frames executes once every 2 seconds.

The tricky bit is we need to make sure that each process fits into one frame time, which is 1/30 of a second or 0.033 seconds or 33 milliseconds. If the process takes longer than that, we have to ether divide it up into parts, or run it in a separate thread or program where we can start the process in one frame and get the result in another. It is also important to try and balance the frames so that not all processing lands in the same frame. The following diagram shows a task scheduling system based on a 30 frames per second basic rate.

We have four tasks to take care of: Task A runs at 15 fps, Task B runs at 6 fps (every five frames), Task C runs at 10 fps (every three frames), and Task D runs at 30 fps, every frame. Our first pass (the top diagram) at the schedule has all four tasks landing on the same frame at frames 1, 13, and 25. We can improve the balance of the load on the control program if we delay the start of Task B on the second frame, as shown in the bottom diagram.

This is very akin to how measures in music work, where a measure is a certain amount of time, and different notes have different intervals – one whole note can only appear once per measure, a half note can appear twice, all the way down to 64th notes. Just as a composer makes sure that each measure has the right number of beats, we can make sure that our control loop has a balanced measure of processes to execute each frame.

Let’s start by writing a little program to control our timing loop and to let you play with these principles.

This is exciting; our first bit of coding together. This program just demonstrates the timing control loop we are going to use in the main robot control program, and is here to let you play around with some parameters and see the results. This is the simplest version I think is possible of a soft-time controlled loop, so feel free to improve and embellish.

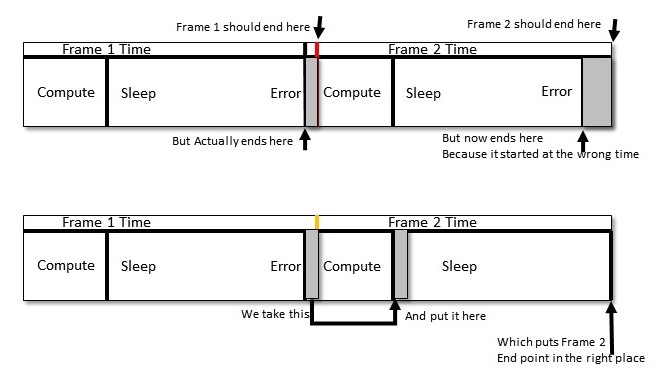

The following diagram show what we are doing with this program:

Now we begin with coding. This is pretty straightforward Python code; we won't get fancy until later. We start by importing our libraries. It is not surprising that we start with the time module. We also will use the mean function from numpy (Python numerical analysis) and matplotlib to draw our graph at the end. We will also be doing some math calculations to simulate our processing and create a load on the frame rate.

import time

from numpy import mean

import matplotlib.pyplot as plt

import math

#

Now we have some parameters to control our test. This is where you can experiment with different timings. Our basic control is the FRAMERATE – how many updates per second do we want to try? Let’s start with 30, as we did before:

# set our frame rate - how many cycles per second to run our loop?

FRAMERATE = 30

# how long does each frame take in seconds?

FRAME = 1.0/FRAMERATE

# initialize myTimer

# This is one of our timer variables where we will store the clock time from the operating system.

myTimer = 0.0

The duration of the test is set by the counter variable. The time the test will take is the FRAME time times the number of cycles in the counter. In our example, 2000 frames divided by 30 frames per second is 66.6 seconds, or a bit over a minute to run the test:

# how many cycles to test? counter*FRAME = runtime in seconds

counter = 2000

We will be controlling our timing loop in two ways. We will first measure the amount of time it takes to perform the calculations for this frame. We have a stub of a program with some trig functions we will call to put a load on the computer. Robot control functions, such as computing the angles needed in a robot arm, need lots of trig math to work.

We will measure the time for our control function to run, which will take some part of our frame. We then compute how much of our frame remains, and tell the computer to sleep this process for the rest of the time. Using the sleep function releases the computer to go take care of other business in the operating system, and is a better way to mark time rather that running a tight loop of some sort to waste the rest of our frame time. The second way we control our loop is by measuring the complete frame – compute time plus rest time – and looking to see if we are over or under our frame time. We use TIME_CORRECTION for this function to trim our sleep time to account for variability in the sleep function and any delays getting back from the operating system:

# factor for our timing loop computations

TIME_CORRECTION= 0.0

# place to store data

We will collect some data to draw a "jitter" graph at the end of the program. We use the dataStore structure for this. Let's put a header on the screen to tell the you the program has begun, since it takes a while to finish:

dataStore = []

# Operator information ready to go

# We create a heading to show that the program is starting its test

print "START COUNTING: FRAME TIME", FRAME, "RUN TIME:",FRAME*counter

# initialize the precision clock

In this step, we are going to set up some variables to measure our timing. As we mentioned, the objective is to have a bunch of compute frames, each the same length. Each frame has two parts: a compute part, where we are doing work, and a sleep period, when we are allowing the computer to do other things. myTime is the "top of frame" time, when the frame begins. newTime is the end of the work period timer. We use masterTime to compute the total time the program is running:

myTime = newTime = time.time()

# save the starting time for later

masterTime=myTime

# begin our timing loop

for ii in range(counter):

This section is our "payload", the section of the code doing the work. This might be an arm angle calculation, a state estimate, or a command interpreter. We'll stick in some trig functions and some math to get the CPU to do some work for us. Normally, this "working" section is the majority of our frame, so let's repeat these math terms 1,000 times:

# we start our frame - this represents doing some detailed math calculations

# this is just to burn up some CPU cycles

for jj in range(1000):

x = 100

y = 23 + ii

z = math.cos(x)

z1 = math.sin(y)

#

# read the clock after all compute is done

# this is our working frame time

#

Now we read the clock to find the working time. We can now compute how long we need to sleep the process before the next frame. The important part is that working time + sleep time = frame time. I'll call this timeError:

newTime = time.time()

# how much time has elapsed so far in this frame

# time = UNIX clock in seconds

# so we have to subract our starting time to get the elapsed time

myTimer = newTime-myTime

# what is the time left to go in the frame?

timeError = FRAME-myTimer

We carry forward some information from the previous frame here. TIME_CORRECTION is our adjustment for any timing errors in the previous frame time. We initialized it earlier to zero before we started our loop so we don't get an undefined variable error here. We also do some range checking because we can get some large "jitters" in our timing caused by the operating system that can cause our sleep timer to crash if we try to sleep a negative amount of time:

We use the Python max function as a quick way to clamp the value of sleep time to be zero or greater. Max returns the greater of two arguments. The alternative is something like if a< 0 : a=0.

# OK time to sleep

# the TIME CORRECTION helps account for all of this clock reading

# this also corrects for sleep timer errors

# we are using a porpotional control to get the system to converge

# if you leave the divisor out, then the system oscillates out of control

sleepTime = timeError + (TIME_CORRECTION/1.5)

# quick way to eliminate any negative numbers

# which are possible due to jitter

# and will cause the program to crash

sleepTime=max(sleepTime,0.0)

So here is our actual sleep command. The sleep command does not always provide a precise time interval, so we will be checking for errors:

# put this process to sleep

time.sleep(sleepTime)

This is the time correction section. We figure out how long our frame time was in total (working and sleeping) and subtract it from what we want the frame time to be (FrameTime). Then we set our time correction to that value. I'm also going to save the measured frame time into a data store so we can graph how we did later, using matplotlib. This technique is one of Python's more useful features:

#print timeError,TIME_CORRECTION

# set our timer up for the next frame

time2=time.time()

measuredFrameTime = time2-myTime

##print measuredFrameTime,

TIME_CORRECTION=FRAME-(measuredFrameTime)

dataStore.append(measuredFrameTime*1000)

#TIME_CORRECTION=max(-FRAME,TIME_CORRECTION)

#print TIME_CORRECTION

myTime = time.time()

This completes the looping section of the program. This example does 2,000 cycles of 30 frames a second and finishes in 66.6 seconds. You can experiment with different cycle times and frame rates.

Now that we have completed the program, we can make a little report and a graph. We print out the frame time and total runtime, compute the average frame time (total time/counter), and display the average error we encountered, which we can get by averaging the data in the dataStore:

# Timing loop test is over - print the results

#

# get the total time for the program

endTime = time.time() - masterTime

# compute the average frame time by dividing total time by our number of frames

avgTime = endTime / counter

#print report

print "FINISHED COUNTING"

print "REQUESTED FRAME TIME:",FRAME,"AVG FRAME TIME:",avgTime

print "REQUESTED TOTAL TIME:",FRAME*counter,"ACTUAL TOTAL TIME:", endTime

print "AVERAGE ERROR",FRAME-avgTime, "TOTAL_ERROR:",(FRAME*counter) - endTime

print "AVERAGE SLEEP TIME: ",mean(dataStore),"AVERAGE RUN TIME",(FRAME*1000)-mean(dataStore)

# loop is over, plot result

# this let's us see the "jitter" in the result

plt.plot(dataStore)

plt.show()

Results from our program are shown in the following code. Note that the average error is just 0.00018 of a second, or .18 milliseconds out of a frame of 33 milliseconds:

START COUNTING: FRAME TIME 0.0333333333333 RUN TIME: 66.6666666667

FINISHED COUNTING

REQUESTED FRAME TIME: 0.0333333333333 AVG FRAME TIME: 0.0331549999714

REQUESTED TOTAL TIME: 66.6666666667 ACTUAL TOTAL TIME: 66.3099999428

AVERAGE ERROR 0.000178333361944 TOTAL_ERROR: 0.356666723887

AVERAGE SLEEP TIME: 33.1549999714 AVERAGE RUN TIME 0.178333361944

The following diagram shows the timing graph from our program. The "spikes" in the image are jitter caused by operating system interrupts. You can see the program controls the frame time in a fairly narrow range. If we did not provide control, the frame time would get greater and greater as the program executed. The diagram shows that the frame time stays in a narrow range that keeps returning to the correct value: