Data Preprocessing

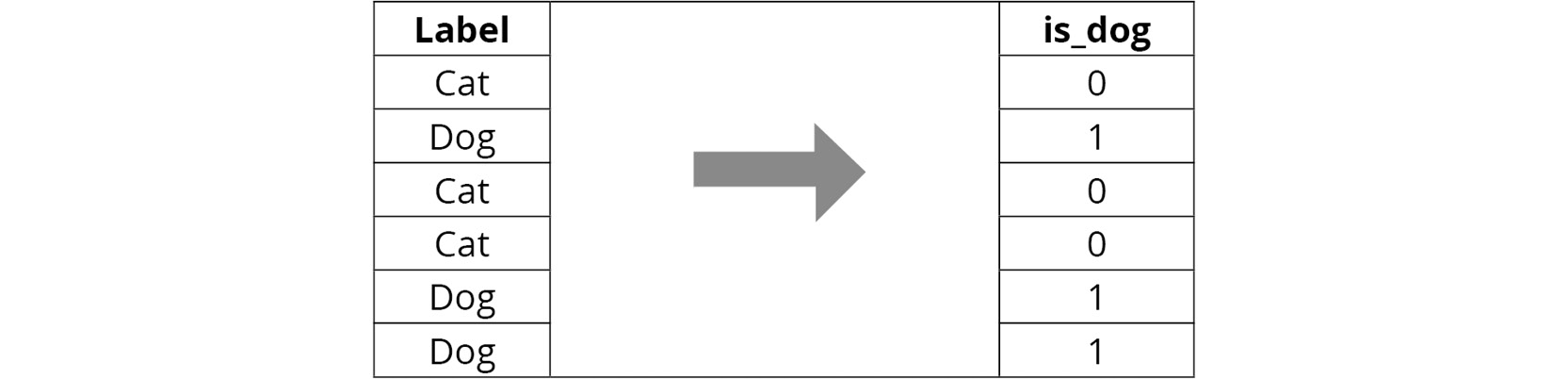

To fit models to the data, it must be represented in numerical format since the mathematics used in all machine learning algorithms only works on matrices of numbers (you cannot perform linear algebra on an image). This will be one goal of this section: to learn how to encode all the features into numerical representations. For example, in binary text, values that contain one of two possible values may be represented as zeros or ones. An example of this can be seen in the following diagram. Since there are only two possible values, the value 0 is assumed to be a cat and the value 1 is assumed to be a dog.

We can also rename the column for interpretation:

Figure 1.6: A numerical encoding of binary text values

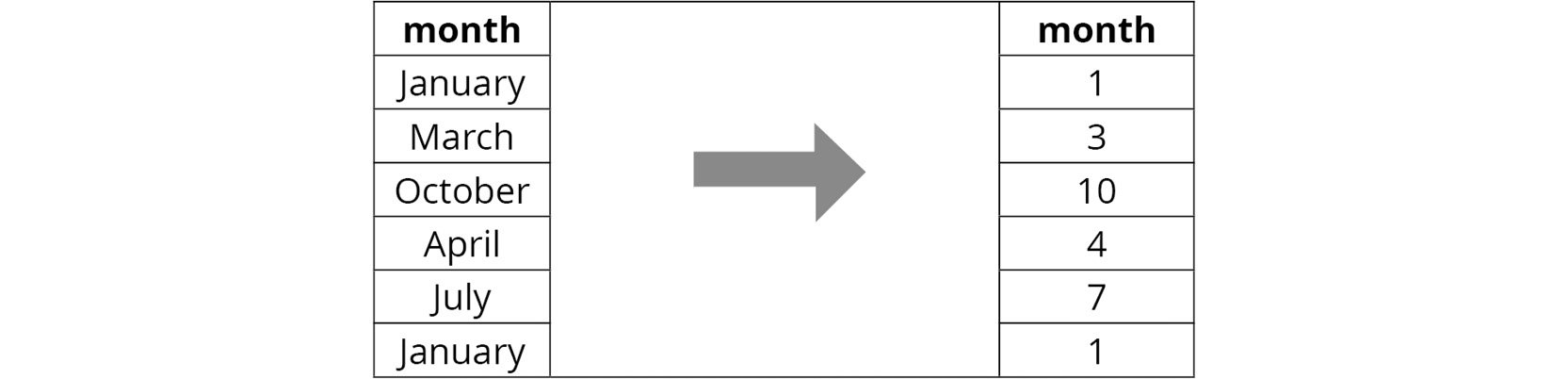

Another goal will be to appropriately represent the data in numerical format - by appropriately, we mean that we want to encode relevant information numerically through the distribution of numbers. For example, one method to encode the months of the year would be to use the number of the month in the year. For example, January would be encoded as 1, since it is the first month, and December would be 12. Here's an example of how this would look in practice:

Figure 1.7: A numerical encoding of months

Not encoding information appropriately into numerical features can lead to machine learning models learning unintuitive representations, as well as relationships between the feature data and target variables that will prove useless for human interpretation.

An understanding of the machine learning algorithms you are looking to use will also help encode features into numerical representations appropriately. For example, algorithms for classification tasks such as Artificial Neural Networks (ANNs) and logistic regression are susceptible to large variations in the scale between the features that may hamper their model-fitting ability.

Take, for example, a regression problem attempting to fit house attributes, such as the area in square feet and the number of bedrooms, to the house price. The bounds of the area may be anywhere from 0 to 5000, whereas the number of bedrooms may only vary from 0 to 6, so there is a large difference between the scale of the variables.

An effective way to combat the large variation in scale between the features is to normalize the data. Normalizing the data will scale the data appropriately so that it is all of a similar magnitude. This ensures that any model coefficients or weights can be compared correctly. Algorithms such as decision trees are unaffected by data scaling, so this step can be omitted for models using tree-based algorithms.

In this section, we demonstrated a number of different ways to encode information numerically. There is a myriad of alternative techniques that can be explored elsewhere. Here, we will show some simple and popular methods that can be used to tackle common data formats.

Exercise 1.02: Cleaning the Data

It is important that we clean the data appropriately so that it can be used for training models. This often includes converting non-numerical datatypes into numerical datatypes. This will be the focus of this exercise - to convert all the columns in the feature dataset into numerical columns. To complete the exercise, perform the following steps:

- First, we load the

featuredataset into memory:%matplotlib inline import pandas as pd data = pd.read_csv('../data/OSI_feats.csv') - Again, look at the first

20rows to check out the data:data.head(20)

The following screenshot shows the output of the preceding code:

Figure 1.8: First 20 rows and 8 columns of the pandas feature DataFrame

Here, we can see that there are a number of columns that need to be converted into the numerical format. The numerical columns we may not need to modify are the columns named Administrative, Administrative_Duration, Informational, Informational_Duration, ProductRelated, ProductRelated_Duration, BounceRates, ExitRates, PageValues, SpecialDay, OperatingSystems, Browser, Region, and TrafficType.

There is also a binary column that has either one of two possible values. This is the column named Weekend.

Finally, there are also categorical columns that are string types, but there are a limited number of choices (

>2) that the column can take. These are the columns named Month and VisitorType. - For the numerical columns, use the

describefunction to get a quick indication of the bounds of the numerical columns:data.describe()

The following screenshot shows the output of the preceding code:

Figure 1.9: Output of the describe function in the feature DataFrame

- Convert the binary column, Weekend, into a numerical column. To do so, we will examine the possible values by printing the count of each value and plotting the result, and then convert one of the values into

1and the other into0. If appropriate, rename the column for interpretability.For context, it is helpful to see the distribution of each value. We can do that using the

value_countsfunction. We can try this out on theWeekendcolumn:data['Weekend'].value_counts()

We can also look at these values as a bar graph by plotting the value counts by calling the

plotmethod of the resulting DataFrame and passing thekind='bar'argument:data['Weekend'].value_counts().plot(kind='bar')

Note

The

kind='bar'argument will plot the data as a bar graph. The default is aline graph. When plotting in Jupyter notebooks, in order to make the plots within the notebook, the following command may need to be run:%matplotlib inline.The following figure shows the output of the preceding code:

Figure 1.10: A plot of the distribution of values of the default column

- Here, we can see that this distribution is skewed toward

falsevalues. This column represents whether the visit to the website occurred on a weekend, corresponding to atruevalue, or a weekday, corresponding to afalsevalue. Since there are more weekdays than weekends, this skewed distribution makes sense. Convert the column into a numerical value by converting theTruevalues into1and theFalsevalues into0. We can also change the name of the column from its default tois_weekend. This makes it a bit more obvious what the column means:data['is_weekend'] = data['Weekend'].apply(lambda \ row: 1 if row == True else 0)

Note

The

applyfunction iterates through each element in the column and applies the function provided as the argument. A function has to be supplied as the argument. Here, alambdafunction is supplied. - Take a look at the original and converted columns side by side. Take a sample of the last few rows to see examples of both values being manipulated so that they're numerical data types:

data[['Weekend','is_weekend']].tail()

Note

The

tailfunction is identical to theheadfunction, except the function returns the bottomnvalues of the DataFrame instead of the topn.The following figure shows the output of the preceding code:

Figure 1.11: The original and manipulated column

Here, we can see that

Trueis converted into1andFalseis converted into0. - Now we can drop the

Weekendcolumn, as only theis_weekendcolumn is needed:data.drop('Weekend', axis=1, inplace=True) - Next, we have to deal with categorical columns. We will approach the conversion of categorical columns into numerical values slightly differently than with binary text columns, but the concept will be the same. Convert each categorical column into a set of dummy columns. With dummy columns, each categorical column will be converted into

ncolumns, wherenis the number of unique values in the category. The columns will be0or1, depending on the value of the categorical column.This is achieved with the

get_dummiesfunction. If we need any help understanding this function, we can use thehelpfunction or any function:help(pd.get_dummies)

The following figure shows the output of the preceding code:

Figure 1.12: The output of the help command being applied to the pd.get_dummies function

- Let's demonstrate how to manipulate categorical columns with the

agecolumn. Again, it is helpful to see the distribution of values, so look at the value counts and plot them:data['VisitorType'].value_counts() data['VisitorType'].value_counts().plot(kind='bar')

The following figure shows the output of the preceding code:

Figure 1.13: A plot of the distribution of values of the age column

- Call the

get_dummiesfunction on theVisitorTypecolumn and take a look at the rows alongside the original:colname = 'VisitorType' visitor_type_dummies = pd.get_dummies(data[colname], \ prefix=colname) pd.concat([data[colname], \ visitor_type_dummies], axis=1).tail(n=10)

The following figure shows the output of the preceding code:

Figure 1.14: Dummy columns from the VisitorType column

Here, we can see that, in each of the rows, there can be one value of

1, which is in the column corresponding to the value in theVisitorTypecolumn.In fact, when using dummy columns, there is some redundant information. Because we know there are three values, if two of the values in the dummy columns are

0for a particular row, then the remaining column must be equal to1. It is important to eliminate any redundancy and correlations in features as it becomes difficult to determine which feature is the most important in minimizing the total error. - To remove the interdependency, drop the

VisitorType_Othercolumn because it occurs with the lowest frequency:visitor_type_dummies.drop('VisitorType_Other', \ axis=1, inplace=True) visitor_type_dummies.head()Note

In the

dropfunction, theinplaceargument will apply the function in place, so a new variable does not have to be declared.Looking at the first few rows, we can see what remains of our dummy columns for the original

VisitorTypecolumn:

Figure 1.15: Final dummy columns from the VisitorType column

- Finally, add these dummy columns to the original feature data by concatenating the two DataFrames column-wise and dropping the original column:

data = pd.concat([data, visitor_type_dummies], axis=1) data.drop('VisitorType', axis=1, inplace=True) - Repeat the exact same steps with the remaining categorical column,

Month. First, examine the distribution of column values, which is an optional step. Second, create dummy columns. Third, drop one of the columns to remove redundancy. Fourth, concatenate the dummy columns into a feature dataset. Finally, drop the original column if it remains in the dataset. You can do this using the following code:colname = 'Month' month_dummies = pd.get_dummies(data[colname], prefix=colname) month_dummies.drop(colname+'_Feb', axis=1, inplace=True) data = pd.concat([data, month_dummies], axis=1) data.drop('Month', axis=1, inplace=True) - Now, we should have our entire dataset as numerical columns. Check the types of each column to verify this:

data.dtypes

The following figure shows the output of the preceding code:

Figure 1.16: The datatypes of the processed feature dataset

- Now that we have verified the datatypes, we have a dataset we can use to train a model, so let's save this for later:

data.to_csv('../data/OSI_feats_e2.csv', index=False) - Let's do the same for the

targetvariable. First, load the data in, convert the column into a numerical datatype, and save the column as a CSV file:target = pd.read_csv('../data/OSI_target.csv') target.head(n=10)The following figure shows the output of the preceding code:

Figure 1.17: First 10 rows of the target dataset

Here, we can see that this is a

Booleandatatype and that there are two unique values. - Convert this into a binary numerical column, much like we did with the binary columns in the feature dataset:

target['Revenue'] = target['Revenue'].apply(lambda row: 1 \ if row==True else 0) target.head(n=10)

The following figure shows the output of the preceding code:

Figure 1.18: First 10 rows of the target dataset when converted into integers

- Finally, save the target dataset to a CSV file:

target.to_csv('../data/OSI_target_e2.csv', index=False)

In this exercise, we learned how to clean the data appropriately so that it can be used to train models. We converted the non-numerical datatypes into numerical datatypes; that is, we converted all the columns in the feature dataset into numerical columns. Lastly, we saved the target dataset as a CSV file so that we can use it in the following exercises and activities.

Note

To access the source code for this specific section, please refer to https://packt.live/2YW1DVi.

You can also run this example online at https://packt.live/2BpO4EI.

Appropriate Representation of the Data

In our online shoppers purchase intention dataset, we have some columns that are defined as numerical variables when, upon closer inspection, they are actually categorical variables that have been given numerical labels. These columns are OperatingSystems, Browser, TrafficType, and Region. Currently, we have treated them as numerical variables, though they are categorical, which should be encoded into the features if we want the models we build to learn the relationships between the features and the target.

We do this because we may be encoding some misleading relationships in the features. For example, if the value of the OperatingSystems field is equal to 2, does that mean it is twice the value as that which has the value 1? Probably not, since it refers to the operating system. For this reason, we will convert the field into a categorical variable. The same may be applied to the Browser, TrafficType, and Region columns.

Exercise 1.03: Appropriate Representation of the Data

In this exercise, we will convert the OperatingSystems, Browser, TrafficType, and Region columns into categorical types to accurately reflect the information. To do this, we will create dummy variables from the column in a similar manner to what we did in Exercise 1.02, Cleaning the Data. To do so, perform the following steps:

- Open a Jupyter Notebook.

- Load the dataset into memory. We can use the same feature dataset that was the output from Exercise 1.02, Cleaning the Data, which contains the original numerical versions of the

OperatingSystems,Browser,TrafficType, andRegioncolumns:import pandas as pd data = pd.read_csv('../data/OSI_feats_e2.csv') - Look at the distribution of values in the

OperatingSystemscolumn:data['OperatingSystems'].value_counts()

The following figure shows the output of the preceding code:

Figure 1.19: The distribution of values in the OperatingSystems column

- Create dummy variables from the

OperatingSystemcolumn:colname = 'OperatingSystems' operation_system_dummies = pd.get_dummies(data[colname], \ prefix=colname)

- Drop the dummy variable representing the value with the lowest occurring frequency and join back with the original data:

operation_system_dummies.drop(colname+'_5', axis=1, \ inplace=True) data = pd.concat([data, operation_system_dummies], axis=1)

- Repeat this for the

Browsercolumn:data['Browser'].value_counts()

The following figure shows the output of the preceding code:

Figure 1.20: The distribution of values in the Browser column

- Create dummy variables, drop the dummy variable with the lowest occurring frequency, and join back with the original data:

colname = 'Browser' browser_dummies = pd.get_dummies(data[colname], \ prefix=colname) browser_dummies.drop(colname+'_9', axis=1, inplace=True) data = pd.concat([data, browser_dummies], axis=1)

- Repeat this for the

TrafficTypeandRegioncolumns:Note

The

#symbol in the code snippet below denotes a code comment. Comments are added into code to help explain specific bits of logic.colname = 'TrafficType' data[colname].value_counts() traffic_dummies = pd.get_dummies(data[colname], prefix=colname) # value 17 occurs with lowest frequency traffic_dummies.drop(colname+'_17', axis=1, inplace=True) data = pd.concat([data, traffic_dummies], axis=1) colname = 'Region' data[colname].value_counts() region_dummies = pd.get_dummies(data[colname], \ prefix=colname) # value 5 occurs with lowest frequency region_dummies.drop(colname+'_5', axis=1, inplace=True) data = pd.concat([data, region_dummies], axis=1)

- Check the column types to verify they are all numerical:

data.dtypes

The following figure shows the output of the preceding code:

Figure 1.21: The datatypes of the processed feature dataset

- Finally, save the dataset to a CSV file for later use:

data.to_csv('../data/OSI_feats_e3.csv', index=False)

Now, we can accurately test whether the browser type, operating system, traffic type, or region will affect the target variable. This exercise has demonstrated how to appropriately represent data for use in machine learning algorithms. We have presented some techniques that we can use to convert data into numerical datatypes that cover many situations that may be encountered when working with tabular data.

Note

To access the source code for this specific section, please refer to https://packt.live/3dXOTBy.

You can also run this example online at https://packt.live/3iBvDxw.