There's common confusion as to what the difference between concurrency and parallelism is. After all, both of them sound quite similar: two pieces of code running at the same time. In this section, we will define a clear line to divide both of them.

Let's start by going back to our non-concurrent example from the first section:

fun getProfile(id: Int) : Profile {

val basicUserInfo = getUserInfo(id)

val contactInfo = getContactInfo(id)

return createProfile(basicUserInfo, contactInfo)

}

If we go back to the timeline for this implementation of getProfile(), we will see that the timelines of getUserInfo() and getContactInfo() don't overlap.

The execution of getContactInfo() will happen after getUserInfo() has finished, always:

Let's now look again at the concurrent implementation:

suspend fun getProfile(id: Int) {

val basicUserInfo = asyncGetUserInfo(id)

val contactInfo = asyncGetContactInfo(id)

createProfile(basicUserInfo.await(), contactInfo.await())

}

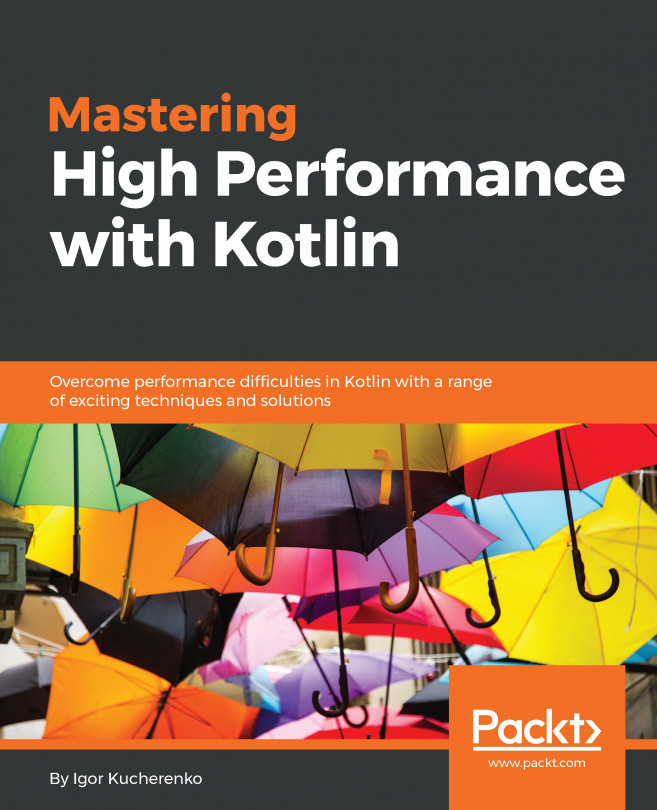

A timeline for the concurrent execution of this code would be something like the following diagram. Notice how the execution of asyncGetUserInfo() and asyncGetContactInfo() overlaps, whereas createProfile() doesn't overlap with either of them:

A timeline for the parallel execution would look exactly the same as the one above. The reason why both concurrent and parallel timelines look the same is because this timeline is not granular enough to reflect what is happening at a lower level.

The difference is that concurrency happens when the timeline of different sets of instructions in the same process overlaps, regardless of whether they are being executed at the exact same point in time or not. The best way to think of this is by picturing the code of getProfile() being executed in a machine with only one core. Because a single core would not be able to execute both threads at the same time, it would interleave between asyncGetUserInfo() and asyncGetContactInfo(), making their timelines overlap – but they would not be executing simultaneously.

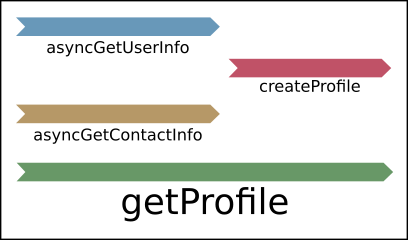

The following diagram is a representation of concurrency when it happens in a single core – it's concurrent but not parallel. A single processing unit will interleave between threads X and Y, and while both of their overall timelines overlap, at a given point in time only one of them is being executed:

Parallel execution, on the other hand, can only happen if both of them are being executed at the exact same point in time. For example, if we picture getProfile() being executed in a computer with two cores, one core executing the instructions of asyncGetUserInfo() while a second core is executing those of asyncGetContactInfo().

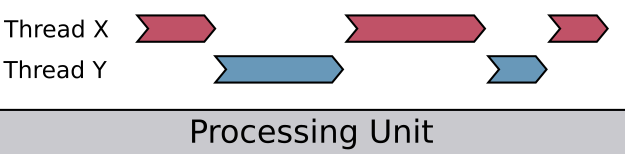

The following diagram is a representation of concurrent code being executed in parallel, using two processing units, each of them executing a thread independently. In this case, not only the timelines of thread X and Y are overlapping, but they are indeed being executed at the exact same point in time:

Here is a summarized explanation:

- Concurrency happens when there is overlapping in the timeline of two or more algorithms. For this overlap to happen, it's necessary to have two or more active threads of execution. If those threads are executed in a single core, they are not executed in parallel, but they are executed concurrently nonetheless, because that single core will interleave between the instructions of the different threads, effectively overlapping their execution.

- Parallelism happens when two algorithms are being executed at the exact same point in time. For this to be possible, it's required to have two or more cores and two or more threads, so that each core can execute the instructions of a thread simultaneously. Notice that parallelism implies concurrency, but concurrency can exist without parallelism.

As we will see in the next section, this difference is not just a technicality, and understanding it, along with understanding your code, will help you to write code that performs well.