Jupyter provides a means to conveniently cooperate with your Spark environment. In this recipe, we will guide you in how to install Jupyter on your local machine.

Installing Jupyter

Getting ready

We require a working installation of Spark. This means that you will have followed the steps outlined in the first, and either the second or third recipes. In addition, a working Python environment is also required.

No other prerequisites are required.

How to do it...

If you do not have pip installed on your machine, you will need to install it before proceeding.

- To do this, open your Terminal and type (on macOS):

curl -O https://bootstrap.pypa.io/get-pip.py

Or the following on Linux:

wget https://bootstrap.pypa.io/get-pip.py

- Next, type (applies to both operating systems):

python get-pip.py

This will install pip on your machine.

- All you have to do now is install Jupyter with the following command:

pip install jupyter

How it works...

pip is a management tool for installing Python packages for PyPI, the Python Package Index. This service hosts a wide range of Python packages and is the easiest and quickest way to distribute your Python packages.

However, calling pip install does not only search for the packages on PyPI: in addition, VCS project URLs, local project directories, and local or remote source archives are also scanned.

Jupyter is one of the most popular interactive shells that supports developing code in a wide variety of environments: Python is not the only one that's supported.

Directly from http://jupyter.org:

Another way to install Jupyter, if you are using Anaconda distribution for Python, is to use its package management tool, the conda. Here's how:

conda install jupyter

Note that pip install will also work in Anaconda.

There's more...

Now that you have Jupyter on your machine, and assuming you followed the steps of either the Installing Spark from sources or the Installing Spark from binaries recipes, you should be able to start using Jupyter to interact with PySpark.

To refresh your memory, as part of installing Spark scripts, we have appended two environment variables to the bash profile file: PYSPARK_DRIVER_PYTHON and PYSPARK_DRIVER_PYTHON_OPTS. Using these two environment variables, we set the former to use jupyter and the latter to start a notebook service.

If you now open your Terminal and type:

pyspark

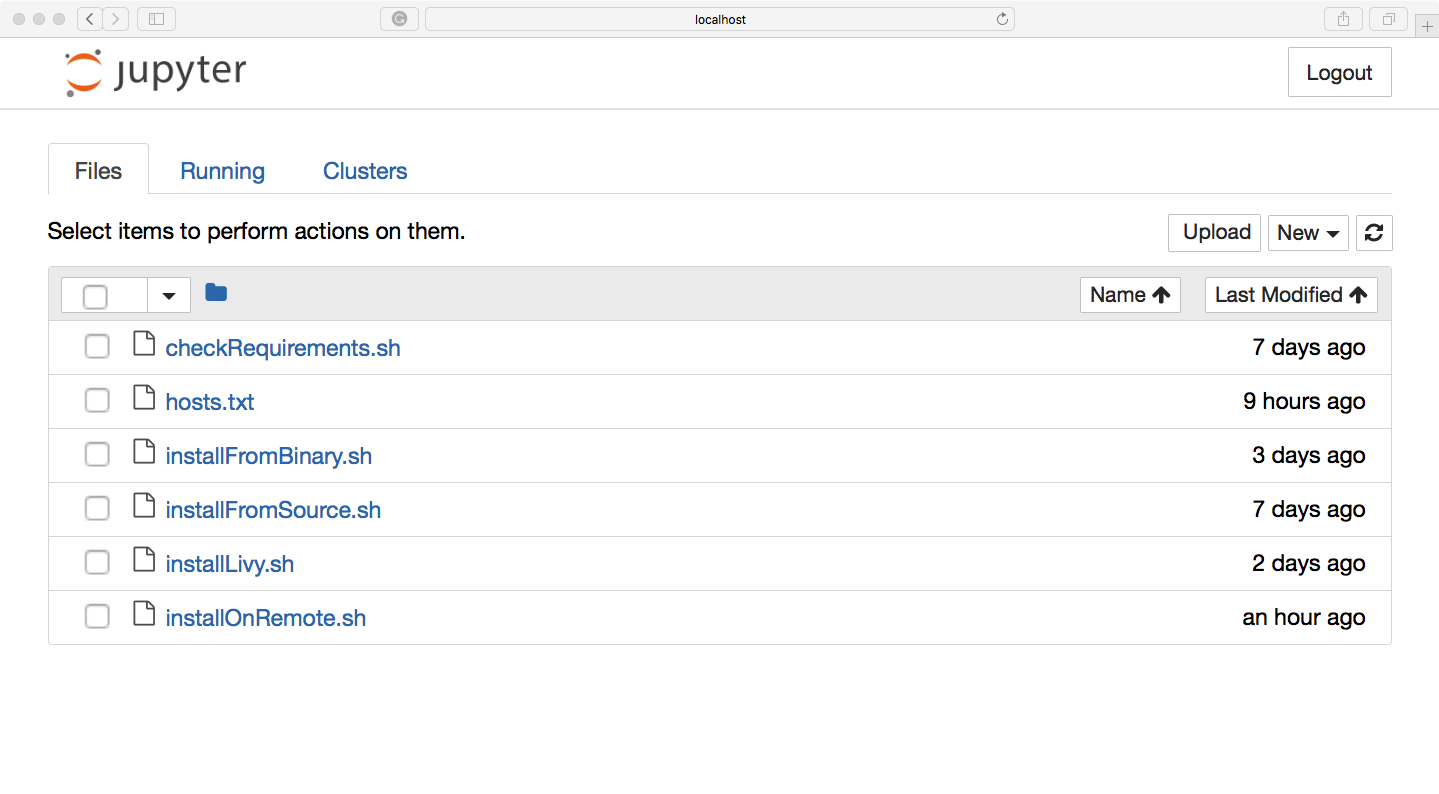

When you open your browser and navigate to http://localhost:6661, you should see a window not that different from the one in the following screenshot:

See also

- Check out https://pypi.python.org/pypi, as the number of really cool projects available for Python is mind-boggling