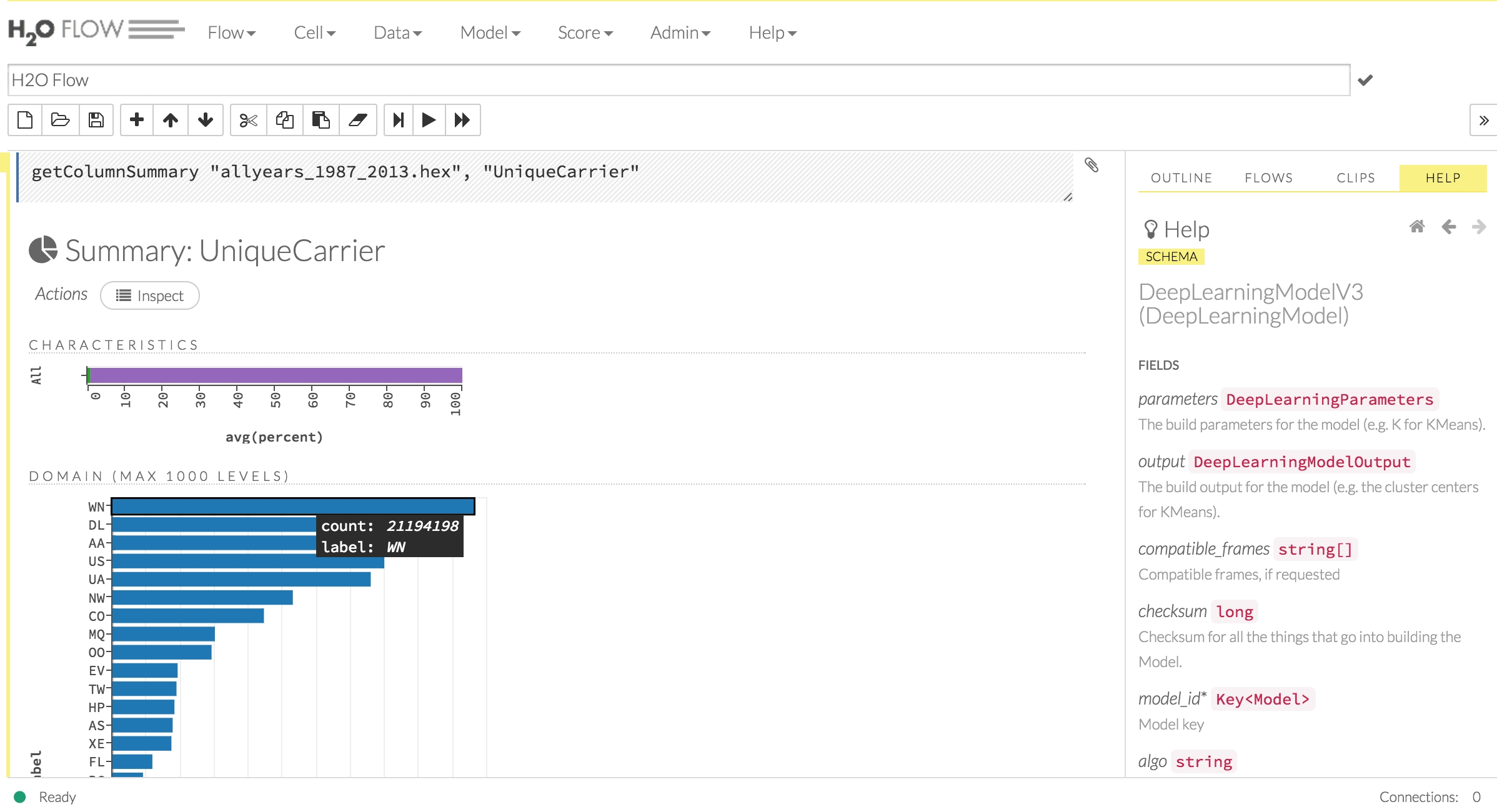

As stated previously, MLlib is a library of popular machine learning algorithms built using Spark. Not surprisingly, H2O and MLlib share many of the same algorithms but differ in both their implementation and functionality. One very handy feature of H2O is that it allows users to visualize their data and perform feature engineering tasks, which we will cover in depth in later chapters. The visualization of data is accomplished by a web-friendly GUI and allows users a friendly interface to seamlessly switch between a code shell and a notebook-friendly environment. The following is an example of the H2O notebook - called Flow - that you will become familiar with soon:

One other nice addition is that H2O allows data scientists to grid search many hyper-parameters that ship with their algorithms. Grid search is a way of optimizing all the hyperparameters of an algorithm to make model configuration easier. Often, it is difficult to know which hyperparameters to change and how to change them; the grid search allows us to explore many hyperparameters simultaneously, measure the output, and help select the best models based on our quality requirements. The H2O grid search can be combined with model cross-validation and various stopping criteria, resulting in advanced strategies such as picking 1000 random parameters from a huge parameters hyperspace and finding the best model that can be trained under two minutes and with AUC greater than 0.7