Module transport and execution

Once a playbook is parsed and the hosts are determined, Ansible is ready to execute a task. Tasks are made up of a name (this optional, but nonetheless important, as mentioned previously), a module reference, module arguments, and task control directives. In Ansible 2.9 and earlier, modules were identified by a single unique name. However, in versions of Ansible such as 2.10 and later, the advent of collections (which we will discuss in more detail in the next chapter) meant that Ansible module names could now be non-unique. As a result, those of you with prior Ansible experience might have noticed that, in this book, we are using ansible.builtin.debug instead of debug, which we would have used in Ansible 2.9 and earlier. In some cases, you can still get away with using the short-form module names (such as debug); however, remember that the presence of a collection with its own module called debug might cause unexpected results. And, as such, the advice from Ansible in their official documentation is to start making friends with the long-form module names as soon as possible – these are officially called FQCNs. We will use them throughout this book and will explain all of this in more detail in the next chapter. In addition to this, a later chapter will cover task control directives in detail, so we will only concern ourselves with the module reference and arguments.

The module reference

Every task has a module reference. This tells Ansible which bit of work to carry out. Ansible has been designed to easily allow for custom modules to live alongside a playbook. These custom modules can be a whole new functionality, or they can replace modules shipped with Ansible itself. When Ansible parses a task and discovers the name of the module to use for a task, it looks in a series of locations in order to find the module requested. Where it looks also depends on where the task lives, for example, whether inside a role or not.

If a task is inside a role, Ansible will first look for the module within a directory tree named library within the role the task resides in. If the module is not found there, Ansible looks for a directory named library at the same level as the main playbook (the one referenced by the ansible-playbook execution). If the module is not found there, Ansible will finally look in the configured library path, which defaults to /usr/share/ansible/. This library path can be configured in an Ansible config file or by way of the ANSIBLE_LIBRARY environment variable.

In addition to the preceding paths (which have been established as valid module locations in Ansible almost since its inception), the advent of Ansible 2.10 and newer versions bring with them Collections. Collections are now one of the key ways in which modules can be organized and shared with others. For instance, in the earlier example where we looked at the Amazon EC2 dynamic inventory plugin, we installed a collection called amazon.aws. In that example, we only made use of the dynamic inventory plugin; however, installing the collection actually installed a whole set of modules for us to use to automate tasks on Amazon EC2. The collection would have been installed in ~/.ansible/collections/ansible_collections/amazon/aws if you ran the command provided in this book. If you look in there, you will find the modules in the plugins/modules subdirectory. Further collections that you install will be located in similar directories, which have been named after the collection that they were installed from.

This design, which enables modules to be bundled with collections, roles, and playbooks, allows for the addition of functionality or the reparation of problems quickly and easily.

Module arguments

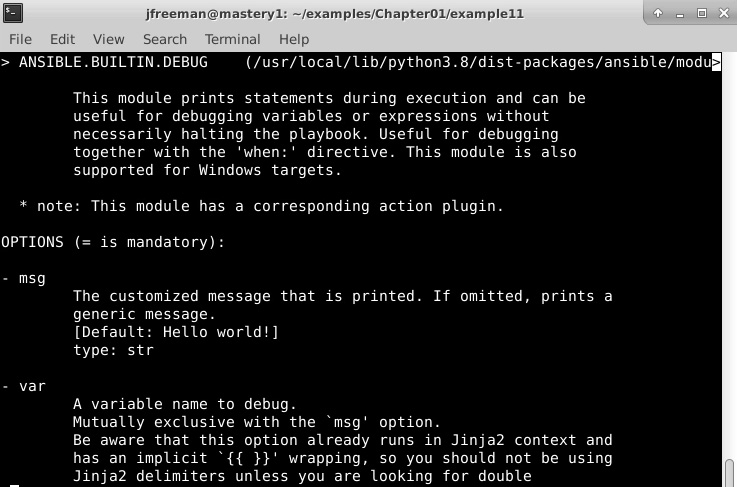

Arguments to a module are not always required; the help output of a module will indicate which arguments are required and which are not. Module documentation can be accessed with the ansible-doc command, as follows (here, we will use the debug module, which we have already used as an example):

ansible-doc ansible.builtin.debug

Figure 1.11 shows the kind of output you can expect from this command:

Figure 1.11 – An example of the output from the ansible-doc command run on the debug module

If you scroll through the output, you will find a wealth of useful information including example code, the outputs from the module, and the arguments (that is, options), as shown in Figure 1.11

Arguments can be templated with Jinja2, which will be parsed at module execution time, allowing for data discovered in a previous task to be used in later tasks; this is a very powerful design element.

Arguments can either be supplied in a key=value format or in a complex format that is more native to YAML. Here are two examples of arguments being passed to a module showcasing these two formats:

- name: add a keypair to nova

openstack.cloudkeypair: cloud={{ cloud_name }} name=admin-key wait=yes

- name: add a keypair to nova

openstack.cloud.keypair:

cloud: "{{ cloud_name }}"

name: admin-key

wait: yes

In this example, both formats will lead to the same result; however, the complex format is required if you wish to pass complex arguments into a module. Some modules expect a list object or a hash of data to be passed in; the complex format allows for this. While both formats are acceptable for many tasks, the complex format is the format used for the majority of examples in this book as, despite its name, it is actually easier for humans to read.

Module blacklisting

Starting with Ansible 2.5, it is now possible for system administrators to blacklist Ansible modules that they do not wish to be available to playbook developers. This might be for security reasons, to maintain conformity or even to avoid the use of deprecated modules.

The location for the module blacklist is defined by the plugin_filters_cfg parameter found in the defaults section of the Ansible configuration file. By default, it is disabled, and the suggested default value is set to /etc/ansible/plugin_filters.yml.

The format for this file is, at present, very simple. It contains a version header to allow for the file format to be updated in the future and a list of modules to be filtered out. For example, if you were preparing for a transition to Ansible 4.0 and were currently on Ansible 2.7, you would note that the sf_account_manager module is to be completely removed in Ansible 4.0. As a result, you might wish to prevent users from making use of this by blacklisting it to prevent anyone from creating code that would break when Ansible 4.0 is rolled out (please refer to https://docs.ansible.com/ansible/devel/porting_guides/porting_guide_2.7.html). Therefore, to prevent anyone from using this internally, the plugin_filters.yml file should look like this:

--- filter_version:'1.0' module_blacklist: # Deprecated – to be removed in 4.0 - sf_account_manager

Although useful in helping to ensure high-quality Ansible code is maintained, this functionality is, at the time of writing, limited to modules. It cannot be extended to anything else, such as roles.

Module transport and execution

Once a module is found, Ansible has to execute it in some way. How the module is transported and executed depends on a few factors; however, the common process is to locate the module file on the local filesystem and read it into memory, and then add the arguments passed to the module. Then, the boilerplate module code from the Ansible core is added to the file object in memory. This collection is compressed, Base64-encoded, and then wrapped in a script. What happens next really depends on the connection method and runtime options (such as leaving the module code on the remote system for review).

The default connection method is smart, which most often resolves to the ssh connection method. With a default configuration, Ansible will open an SSH connection to the remote host, create a temporary directory, and close the connection. Ansible will then open another SSH connection in order to write out the wrapped ZIP file from memory (the result of local module files, task module arguments, and Ansible boilerplate code) into a file within the temporary directory that we just created and close the connection.

Finally, Ansible will open a third connection in order to execute the script and delete the temporary directory and all of its contents. The module results are captured from stdout in JSON format, which Ansible will parse and handle appropriately. If a task has an async control, Ansible will close the third connection before the module is complete and SSH back into the host to check the status of the task after a prescribed period until the module is complete or a prescribed timeout has been reached.

Task performance

The previous discussion regarding how Ansible connects to hosts results in three connections to the host for every task. In a small environment with a small number of tasks, this might not be a concern; however, as the task set grows and the environment size grows, the time required to create and tear down SSH connections increases. Thankfully, there are a couple of ways to mitigate this.

The first is an SSH feature, ControlPersist, which provides a mechanism that creates persistent sockets when first connecting to a remote host that can be reused in subsequent connections to bypass some of the handshaking required when creating a connection. This can drastically reduce the amount of time Ansible spends on opening new connections. Ansible automatically utilizes this feature if the host platform that runs Ansible supports it. To check whether your platform supports this feature, refer to the SSH man page for ControlPersist.

The second performance enhancement that can be utilized is an Ansible feature called pipelining. Pipelining is available to SSH-based connection methods and is configured in the Ansible configuration file within the ssh_connection section:

[ssh_connection] pipelining=true

This setting changes how modules are transported. Instead of opening an SSH connection to create a directory, another to write out the composed module, and a third to execute and clean up, Ansible will instead open an SSH connection on the remote host. Then, over that live connection, Ansible will pipe in the zipped composed module code and script for execution. This reduces the connections from three to one, which can really add up. By default, pipelining is disabled to maintain compatibility with the many Linux distributions that have requiretty enabled in their sudoers configuration file.

Utilizing the combination of these two performance tweaks can keep your playbooks nice and fast even as you scale your environment. However, bear in mind that Ansible will only address as many hosts at once as the number of forks Ansible is configured to run. Forks are the number of processes Ansible will split off as a worker to communicate with remote hosts. The default is five forks, which will address up to five hosts at once. You can raise this number to address more hosts as your environment size grows by adjusting the forks= parameter in an Ansible configuration file or by using the --forks (-f) argument with ansible or ansible-playbook.