Understanding Azure disks

Azure disks are block storage primarily intended for virtual machines. They present varying levels of performance on Azure depending on your requirements and choice of stock keeping unit (SKU).

Disk management options

Azure disks come with two management options:

- Managed disks: Azure takes care of the management of the disks for you. The platform automatically manages where the disk is stored, how it scales, and that it delivers at the intended performance. Managed disks also come with higher Service Level Agreement (SLAs) than unmanaged disks. Disk costs are calculated at the size of the provisioned disk regardless of the data consumed. Managed disks are recommended by Microsoft over unmanaged disks.

- Unmanaged disks: These disks are managed by you. This requires the allocation of the disk to a storage account. The management of the allocation of disks to storage accounts is vital to ensure the reliability of the disks. Factors such as storage space available on the storage account, and storage account type are pivotal for managing these disk types. Disk costs are calculated based on consumed data on the disk.

Disk performance

When selecting the correct disk for your application's purpose, consider the reliability and performance requirements. Factors that should be considered include the following:

- IOPS: IOPS (input/output operations per second) refers to a performance measurement for storage that describes the count of operations performed per second.

- Throughput: Disk throughput describes the speed at which data can be transferred through a disk. Throughput is calculated as IOPS x I/O Size = Disk Throughput.

- Reliability: Azure offers better SLAs based on the disk type chosen in conjunction with a VM.

- Latency: Latency refers to the length of time it takes for a disk to process a request received and send a response. It will limit the effective performance of disks and therefore it's pertinent to use disks with lower latency for high-performance workloads. The effect of latency on a disk can be expressed through the following example. If a disk can offer 2,500 IOPs as the maximum performance but has 10 ms latency, it will only be able to deliver 250 IOPs, which will potentially be highly restrictive.

- Premium storage provides consistently lower latency than other Azure storage and is advised when considering high-performance workloads.

Top Tip

Azure VM SLAs on a single instance that have premium SSD disks attached go up to 99.9% compared to 99.5% for a standard SSD and 95% for a standard HDD. Because of this, it is advised to utilize premium SSD disks on production or critical virtual machines in Azure.

Now that you understand the various factors that impact disk performance, we will now learn about disk caching and its effect on performance.

Disk caching

Caching refers to temporary storage utilized in a specialized fashion to provide faster read and write performance than traditional permanent storage. It does this by loading disk components that are read or written frequently (therefore representing repeatable patterns) to the cache storage. As such, the data needs to be predictable to benefit from caching. Data is loaded into the cache once it has been read or written at least once before from the disk.

Write caching is used to speed up data writes by persisting storage in a lazy write fashion. This means data written is stored on the cache and the app considers this data to be saved to the persistent disk, but the cache in fact waits for an opportunity to write the data to the disk as it becomes available. This leaves an opportunity for risk if the system is shut down prior to the data write completing as expected.

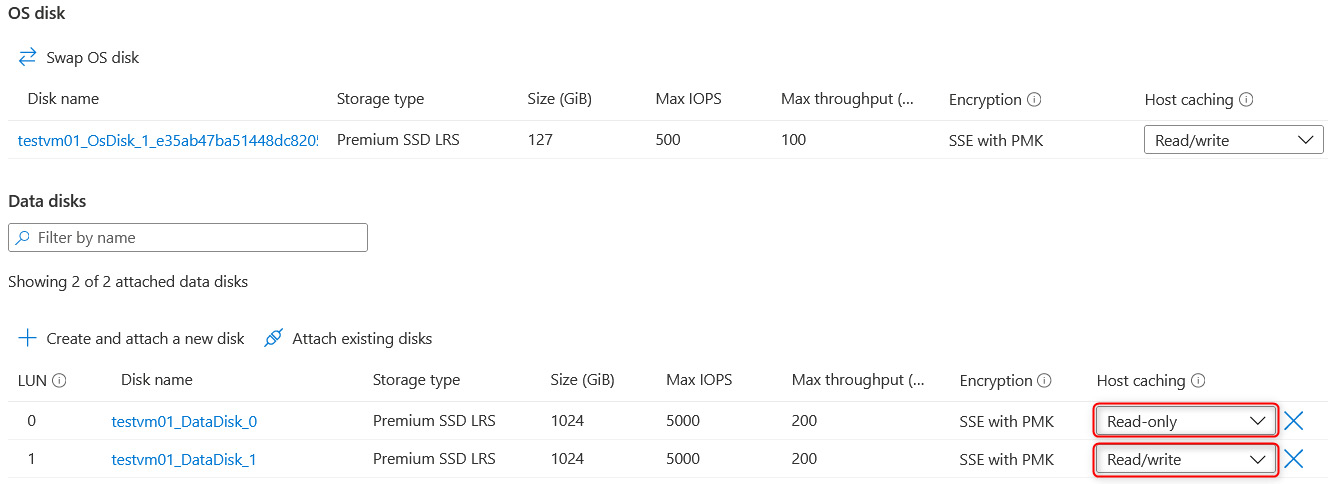

Host caching can be enabled on your VMs and can be configured per disk (both OS and data disks). The default configuration for the OS disk sets this to Read/write. The default setting for data disks is Read-only mode:

Figure 10.1 – Disk cache configuration on a VM

The following caching modes can be selected depending on your requirement:

- Read-only: When you expect workloads to predominantly perform repeatable read operations. Data retrieval performance is enhanced where data is read from the cache.

- Read/write: When you have workloads where you expect to perform a balance of repeatable or predictable read and write operations.

- None: If your workload doesn't follow either of these patterns, then it's best to set host caching to none.

Top Tip

Caching provides little or no value for random I/O, and where data is dispersed across the disk it can even reduce performance and therefore needs to be considered carefully before being enabled.

You now understand the different types of caching available. We will now explore how to calculate the impact on performance by implementing the different caches available.

Calculating expected caching performance

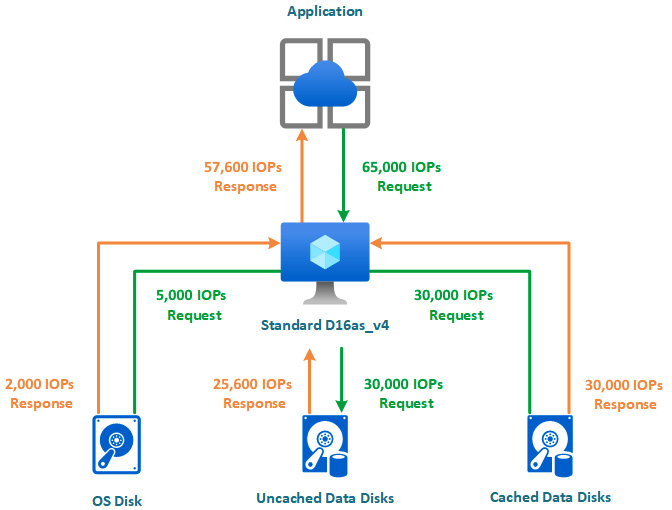

When configuring your VM to take advantage of disk caching, you should consider the type of operations, as mentioned in the preceding sections, and whether your workloads will benefit from caching. Set the disks that will provide caching to the appropriate caching option. Each VM SKU has its unique limit on the performance cap applied to the VM. One of the advantages to the disk performance on the VM is that by enabling caching and setting some disks to not be cached, you can potentially exceed the performance limits of VM storage.

An example would be: imagine you have a Standard_D16as_v4 VM SKU provisioned with 8 data disks. This VM has the following performance limits:

- Cached IOPS: 32,000

- Uncached IOPS: 25,600

- 8 x P40 data disks that can achieve 7,500 IOPS each

Some items to note are the following:

- 4 of the P40 data disks are attached in Read-only cache mode and the others are set to None.

- Although the VM SKU is capable of having 32 data disks, only 8 data disks were used and attached for the example.

An application that is installed makes a request for 65,000 IOPS to the VM, 5,000 to the OS disk, and 30,000 to each of the data disk arrays. The following transactions occur on the disks:

- The OS disk offers 2,000 IOPS

- The 4 data disks that are cached offer a collective 30,000 IOPS

- The 4 data disks that are not cached offer a collective 30,000 IOPS

Since the VM limit imposes an uncached limit of 25,600 IOPS, this is the maximum delivered by the uncached disks. The cached disks are combined with the performance of the OS disk for a collective total of 32,000 IOPS. Since this is the limit imposed on the SKU, this performance metric is maxed out too. Using the cache effectively in this example, the VM was able to achieve a total of 57,600 IOPS, which far exceeds either of the single limits imposed on the VM. This is illustrated in the following diagram:

Figure 10.2 – Cache calculations

As you can see from the preceding diagram, by carefully analyzing disk caching, significant performance improvements can be achieved.

Top Tip

Take note that changing the cache setting on the disks will cause an interruption to the disk on the VM. In the event the cache setting is modified on a data disk, it is detached and reattached again, but when the setting is modified for the OS disk, it will cause the VM to be restarted.

Now that you understand the impact of caching on disks and VMs, we will explore the different types/SKUs of disks available to us within Azure.