Key concepts of regularization

Having gained some intuition regarding what constitutes a suitable fit, as well as understanding examples of underfitting and overfitting, let us now delve into a more precise definition and explore key concepts that enable us to better comprehend regularization.

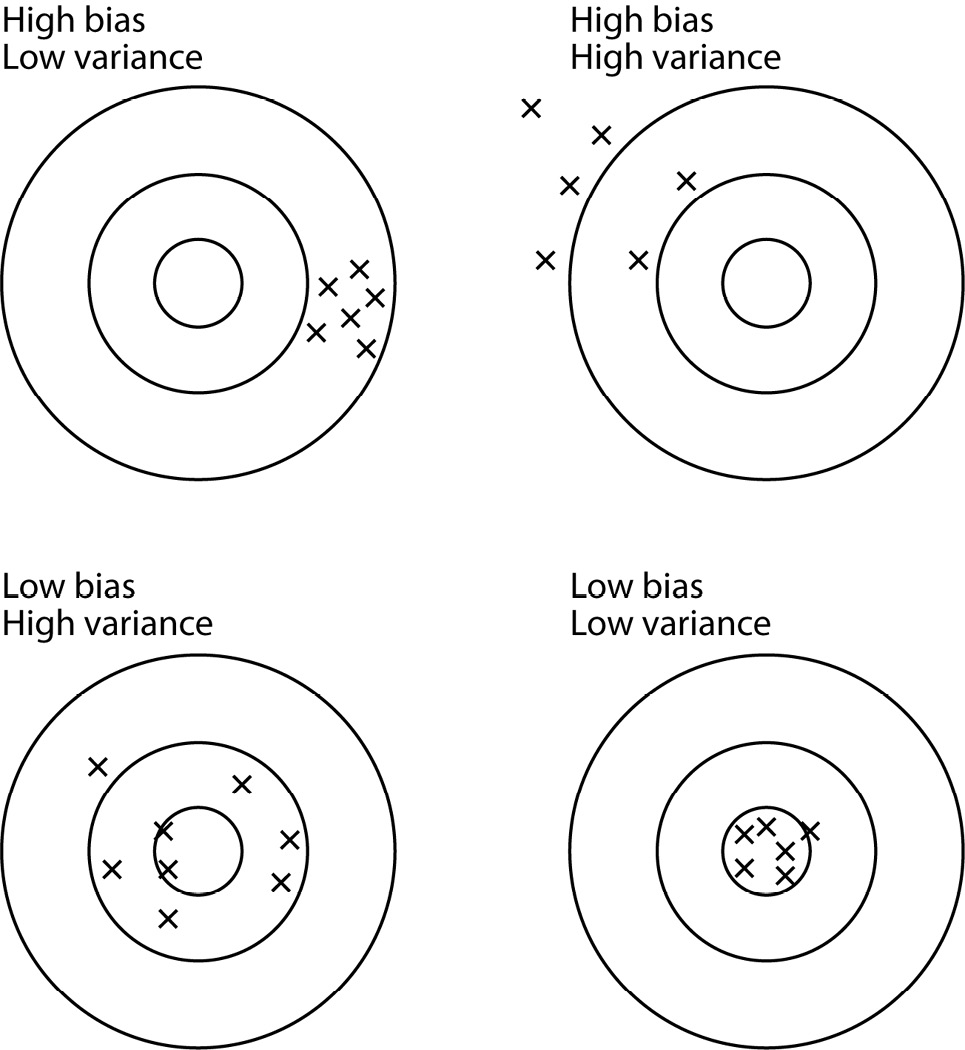

Bias and variance

Bias and variance are two key concepts when talking about regularization. We can define two main kinds of errors a model can have:

- Bias is how bad a model is at capturing the general behavior of the data

- Variance is how bad a model is at being robust to small input data fluctuations

Those two concepts, in general, are not mutually exclusive. If we take a step back from ML, there is a very common figure to visualize bias and variance, assuming the model’s goal is to hit the center of a target:

Figure 1.7 – Visualization of bias and variance

Let’s describe those four cases:

- High bias and low variance...