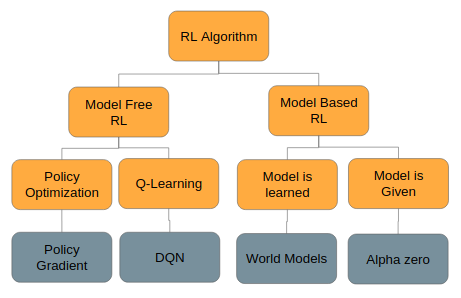

There are many different types of RL algorithms; the main distinction is between the model-based and model-free RL algorithms. What we model about the environment is shown in the following diagram:

Model-based RL, as the name suggests, already starts with an idea of the world. This allows the agent to plan and think ahead. One of the problems with this approach is that, usually, the true model of the environment is not available and the model has to learn it by experience. An example of this is AlphaZero, from DeepMind, which was trained by self-play.

On the other hand, we have model-free methods, which, of course, don't use a model. One of the main advantages of this method is the sample efficiency and the fact that (currently) these models are easier to work with and improve.

In this chapter, we will focus on...