Before we get started with the TensorFlow Playground, let's recap the essential concepts quickly. It will help us appreciate the TensorFlow Playground better.

The inspiration for neural networks is the biological brain, and the smallest unit in the brain is a neuron.

A Perceptron is a neuron based on the idea of the biological neuron. The perceptron basically deals with binary inputs and outputs, making it impractical for actual pragmatic purposes. Also, because of its binary nature, it learns too fast due to the drastic change in output for a small change in input, and so does not provide fine details.

Activation functions were used to negate the issue with perceptrons. This gave rise to other types of neurons that deal with values between ranges of 0 to 1, -1 to 1, and so on, instead of just a 0 or a 1.

ANNs are made up of these neurons stacked in layers. There is an input layer, a dense or fully connected layer, and an output layer.

Cost functions, such as MSE and cross entropy, are ways to measure the magnitude of error in the output of a neuron.

Gradient descent is a mechanism through which a neuron can learn to output values closer to the expected or desired output.

Backpropagation is an incredibly smart approach to making gradient descent happen throughout the network across all layers.

Each back and forth propagation or iteration of the predicted output and the cost through the network is called a training step.

The learning rate is the value that is adjusted to the weight of the neuron at each training step to get an output that's closer to the expected output.

Softmax is a special kind of neuron that accepts a weighted signal indicating the confidence of some class prediction and outputting a probability score between 0 to 1 for all of those classes.

Now, we can proceed to TensorFlow Playground at https://Playground.tensorflow.org. TensorFlow Playground is an online tool to visualize an ANN or deepnet in action, and is an excellent place to reiterate what we have learned conceptually in a visual and intuitive way.

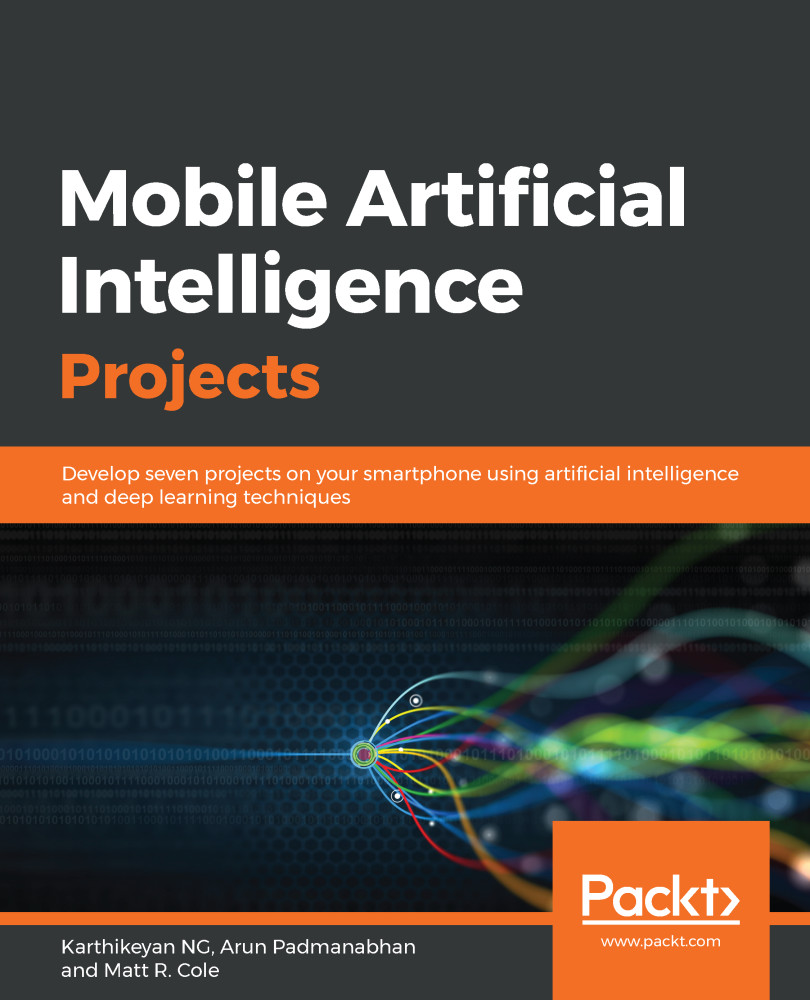

Now, without further ado, let's get on with TensorFlow Playground. Once the page is loaded, you will see a dashboard to create your own neural network for predefined classification problems. Here is a screenshot of the default page and its sections:

Let's look at each of the sections from this screenshot:

- Section 1: The data section shows choices of the pre-built problems to build and visualize the network. The first problem is chosen, which is basically to distinguish between the blue an orange dots. Below that, there are controls to divide the data into training and testing subsets. There is also a parameter to set the batch size. The Batch size is the number of samples that are taken into the network for learning during each training step.

- Section 2: The features section indicates the number of input parameters. In this case, there are two features chosen as the input features.

- Section 3: The hidden layer section is where we can create hidden layers to increase complexity. There are also controls to increase and decrease the number of neurons within each hidden or dense layer. In this example, there are two hidden layers with four and two neurons, respectively.

- Section 4: The output section is where we can see the loss or the cost graph, along with a visualization of how well the network has learned to separate the red and blue dots.

- Section 5: This section is the control panel for adjusting the tuning parameters of the network. It has a widget to start, pause, and refresh the training of the network. Next to it, there is a counter indicating the number of epochs elapsed. Then there is Learning rate, the constant by which the weights are adjusted. That is followed by the choice of activation function to use within the neurons. Finally, there is an option to indicate the kind of problem to visualize, that is classification, or regression. In this example, we are visualizing a classification task.

- We will ignore the Regularization and Regularization rate for now, as we have not covered these terms in a conceptual manner as of yet. We will visit these terms in later in the book when it is ideal for appreciating its purpose.

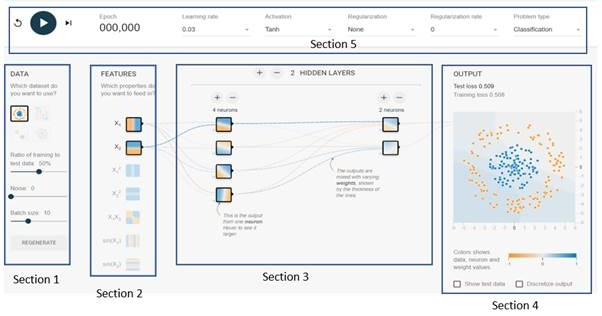

We are now ready to start fiddling around with TensorFlow Playground. We will start with the first dataset, with the following settings on the tuning parameters:

- Learning rate = 0.01

- Activation = Tanh

- Regularization = None

- Regularization rate = 0

- Problem type = Classification

- DATA = Circle

- Ratio of training to test data = 50%

- Batch size = 10

- FEATURES = X1 and X2

- Two hidden/dense layers; the first layer with 4 neurons, and the second layer with 2 neurons

Now start training by clicking the play button on the top-left corner of the dashboard. Moving right from the play/pause button, we can see the number of epochs that have elapsed. At about 200 epochs, pause the training and observe the output section:

The key observations from the dashboard are as follows:

- We can see the performance graph of the network on the right section of the dashboard. The test and training loss is the cost of the network during testing and training, respectively. As discussed previously, the idea is to minimize cost.

- Below that, you will observe that there is a visualization of how the network has separated or classified the blue dots from the orange ones.

- If we hover the mouse pointer over any of the neurons, we can see what the neuron has learned to separate the blue and orange dots. Having said this, let's take a closer look at both of the neurons from the second layer to see what they have learned about the task.

- When we hover over the first neuron in the second layer, we can see that this neuron has done a good job of learning the task at hand. In comparison, the second neuron in the second layer has learned less about the task.

- That brings us to the dotted lines coming out of the neurons: they are the corresponding weights of the neuron. The blue dotted lines indicate positive weights while the orange dotted ones indicate negative weights. They are commonly called tensors.

Another key observation is that the first neuron in the second layer has a stronger tensor signal coming out of it compared to the second one. This is indicative of the influence this neuron has in the overall task of separating the blue and orange dots, and it is quite apparent when we see what it has learned compared to the overall end results visual.

Now, keeping in mind all the terms we have learned in this chapter, we can play around by changing the parameters and seeing how this affects the overall network. It is even possible to add new layers and neurons.

TensorFlow Playground is an excellent place to reiterate the fundamentals and essential concepts of ANNs.