As you saw earlier in this chapter, having a DevOps culture is about rethinking how engineering teams work together, by breaking the development and operations silos and bringing a new set of tools, in order to implement the best practices. AWS helps to accomplish this in many different ways. For some developers, the world of operations can be scary and confusing, but if you want better cooperation between engineers, it is important to expose every aspect of running a service to the entire engineering organization.

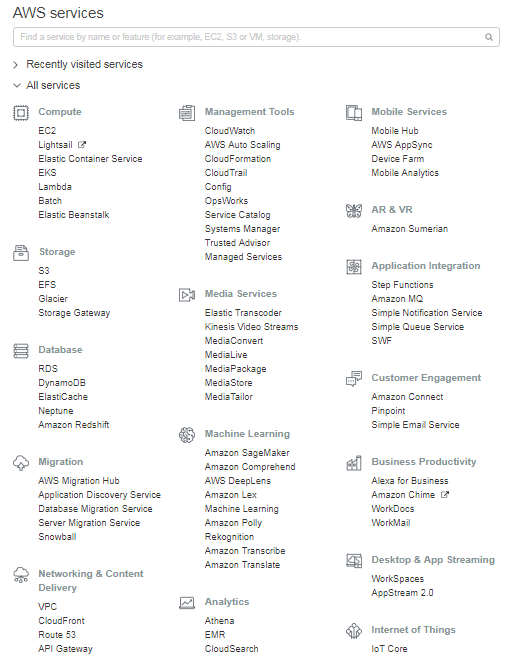

As an operations engineer, you can't have a gatekeeper mentality towards developers. Instead, it's better to make them comfortable by accessing production and working on the different components of the platform. A good way to get started with this is in the AWS console, as follows:

While a bit overwhelming, this is still a much better experience for people who are unfamiliar with navigating this web interface, rather than referring to constantly out-of-date documentation, using SSH and random plays in order to discover the topology and configuration of the service. Of course, as your expertise grows and your application becomes more complex, the need to operate it faster increases, and the web interface starts to show some weaknesses. To get around this issue, AWS provides a very DevOps-friendly alternative. An API is accessible through a command-line tool and a number of SDKs (including Java, JavaScript, Python, .NET, PHP, Ruby Go, and C++). These SDKs let you administrate and use the managed services. Finally, as you saw in the previous section, AWS offers a number of services that fit DevOps methodologies and will ultimately allow us to implement complex solutions in no time.

Some of the major services that you will use, at the computing level are Amazon Elastic Compute Cloud (EC2), the service to create virtual servers. Later, as you start to look into how to scale the infrastructure, you will discover Amazon EC2 Auto Scaling, a service that lets you scale pools on EC2 instances, in order to handle traffic spikes and host failures. You will also explore the concept of containers with Docker, through Amazon Elastic Container Service (ECS). In addition to this, you will create and deploy your application using AWS Elastic Beanstalk, with which you retain full control over the AWS resources powering your application; you can access the underlying resources at any time. Lastly, you will create serverless functions through AWS Lambda, to run custom code without having to host it on our servers. To implement your continuous integration and continuous deployment system, you will rely on the following four services:

- AWS Simple Storage Service (S3): This is the object store service that will allow us to store our artifacts

- AWS CodeBuild: This will let us test our code

- AWS CodeDeploy: This will let us deploy artifacts to our EC2 instances

- AWS CodePipeline: This will let us orchestrate how our code is built, tested, and deployed across environments

To monitor and measure everything, you will rely on AWS CloudWatch, and later, on ElasticSearch/Kibana, to collect, index, and visualize metrics and logs. To stream some of our data to these services, you will rely on AWS Kinesis. To send email and SMS alerts, you will use the Amazon SNS service. For infrastructure management, you will heavily rely on AWS CloudFormation, which provides the ability to create templates of infrastructures. In the end, as you explore ways to better secure our infrastructure, you will encounter Amazon Inspector and AWS Trusted Advisor, and you will explore the IAM and the VPC services in more detail.

United States

United States

Great Britain

Great Britain

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

Singapore

Singapore

Hungary

Hungary

Ukraine

Ukraine

Luxembourg

Luxembourg

Estonia

Estonia

Lithuania

Lithuania

South Korea

South Korea

Turkey

Turkey

Switzerland

Switzerland

Colombia

Colombia

Taiwan

Taiwan

Chile

Chile

Norway

Norway

Ecuador

Ecuador

Indonesia

Indonesia

New Zealand

New Zealand

Cyprus

Cyprus

Denmark

Denmark

Finland

Finland

Poland

Poland

Malta

Malta

Czechia

Czechia

Austria

Austria

Sweden

Sweden

Italy

Italy

Egypt

Egypt

Belgium

Belgium

Portugal

Portugal

Slovenia

Slovenia

Ireland

Ireland

Romania

Romania

Greece

Greece

Argentina

Argentina

Netherlands

Netherlands

Bulgaria

Bulgaria

Latvia

Latvia

South Africa

South Africa

Malaysia

Malaysia

Japan

Japan

Slovakia

Slovakia

Philippines

Philippines

Mexico

Mexico

Thailand

Thailand