In this article by Stephane Jourdan and Pierre Pomes, the authors of Infrastructure as Code (IAC) Cookbook, the following sections will be covered:

- Configuring the Terraform AWS provider

- Creating and using an SSH key pair to use on AWS

- Using AWS security groups with Terraform

- Creating an Ubuntu EC2 instance with Terraform

(For more resources related to this topic, see here.)

Introduction

A modern infrastructure often usesmultiple providers (AWS, OpenStack, Google Cloud, Digital Ocean, and many others), combined with multiple external services (DNS, mail, monitoring, and others). Many providers propose their own automation tool, but the power of Terraform is that it allows you to manage it all from one place, all using code. With it, you can dynamically create machines at two IaaS providers depending on the environment, register their names at another DNS provider, and enable monitoring at a third-party monitoring company, while configuring the company GitHub account and sending the application logs to an appropriate service. On top of that, it can delegate configuration to those who do it well (configuration management tools such as Chef, Puppet, and so on),all with the same tool. The state of your infrastructure is described, stored, versioned, and shared.

In this article, we'll discover how to use Terraform to bootstrap a fully capable infrastructure on Amazon Web Services (AWS), deploying SSH key pairs and securing IAM access keys.

Configuring the Terraform AWS provider

We can use Terraform with many IaaS providers such as Google Cloud or Digital Ocean. Here we'll configure Terraform to be used with AWS.

For Terraform to interact with an IaaS, it needs to have a provider configured.

Getting ready

To step through this section, you will need the following:

- An AWS account with keys

- A working Terraform installation

- An empty directory to store your infrastructure code

- An Internet connection

How to do it…

To configure the AWS provider in Terraform, we'll need the following three files:

- A file declaring our variables, an optional description, and an optional default for each (variables.tf)

- A file setting the variables for the whole project (terraform.tfvars)

- A provider file (provider.tf)

Let's declare our variables in the variables.tf file. We can start by declaring what's usually known as the AWS_DEFAULT_REGION, AWS_ACCESS_KEY_ID, and AWS_SECRET_ACCESS_KEY environment variables:

variable "aws_access_key" {

description = "AWS Access Key"

}

variable "aws_secret_key" {

description = "AWS Secret Key"

}

variable "aws_region" {

default = "eu-west-1"

description = "AWS Region"

}

Set the two variables matching the AWS account in the terraform.tfvars file. It's not recommended to check this file into source control: it's better to use an example file instead (that is: terraform.tfvars.example). It's also recommended that you use a dedicated Terraform user for AWS, not the root account keys:

aws_access_key = "< your AWS_ACCESS_KEY >"

aws_secret_key = "< your AWS_SECRET_KEY >"

Now, let's tie all this together into a single file—provider.tf:

provider "aws" {

access_key = "${var.aws_access_key}"

secret_key = "${var.aws_secret_key}"

region = "${var.aws_region}"

}

Apply the following Terraform command:

$ terraform apply

Apply complete! Resources: 0 added, 0 changed, 0 destroyed.

It only means the code is valid, not that it can really authenticate with AWS (try with a bad pair of keys). For this, we'll need to create a resource on AWS.

You now have a new file named terraform.tfstate that has been created at the root of your repository. This file is critical: it's the stored state of your infrastructure. Don't hesitate to look at it, it's a text file.

How it works…

This first encounter with HashiCorp Configuration Language (HCL), the language used by Terraform, looks pretty familiar: we've declared variables with an optional description for reference. We could have declared them simply with the following:

variable "aws_access_key" { }

All variables are referenced to use the following structure:

${var.variable_name}

If the variable has been declared with a default, as our aws_region has been declared with a default of eu-west-1, this value will be used if there's no override in the terraform.tfvars file.

What would have happened if we didn't provide a safe default for our variable? Terraform would have asked us for a value when executed:

$ terraform apply

var.aws_region

AWS Region

Enter a value:

There's more…

We've used values directly inside the Terraform code to configure our AWS credentials. If you're already using AWS on the command line, chances are you already have a set of standard environment variables:

$ echo ${AWS_ACCESS_KEY_ID}

<your AWS_ACCESS_KEY_ID>

$ echo ${AWS_SECRET_ACCESS_KEY}

<your AWS_SECRET_ACCESS_KEY>

$ echo ${AWS_DEFAULT_REGION}

eu-west-1

If not, you can simply set them as follows:

$ export AWS_ACCESS_KEY_ID="123"

$ export AWS_SECRET_ACCESS_KEY="456"

$ export AWS_DEFAULT_REGION="eu-west-1"

Then Terraform can use them directly, and the only code you have to type would be to declare your provider! That's handy when working with different tools.

The provider.tffile will then look as simple as this:

provider "aws" { }

Creating and using an SSH key pair to use on AWS

Now we have our AWS provider configured in Terraform, let's add a SSH key pair to use on a default account of the virtual machines we intend to launch soon.

Getting ready

To step through this section, you will need the following:

How to do it…

The resource we want for this is named aws_key_pair. Let's use it inside a keys.tf file, and paste the public key content:

resource "aws_key_pair""admin_key" {

key_name = "admin_key"

public_key = "ssh-rsa AAAAB3[…]"

}

This will simply upload your public key to your AWS account under the name admin_key:

$ terraform apply

aws_key_pair.admin_key: Creating...

fingerprint: "" =>"<computed>"

key_name: "" =>"admin_key"

public_key: "" =>"ssh-rsa AAAAB3[…]"

aws_key_pair.admin_key: Creation complete

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

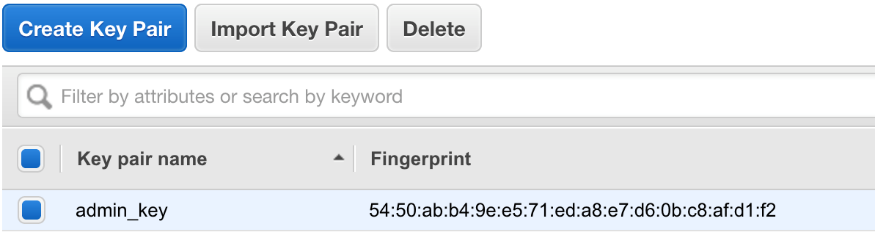

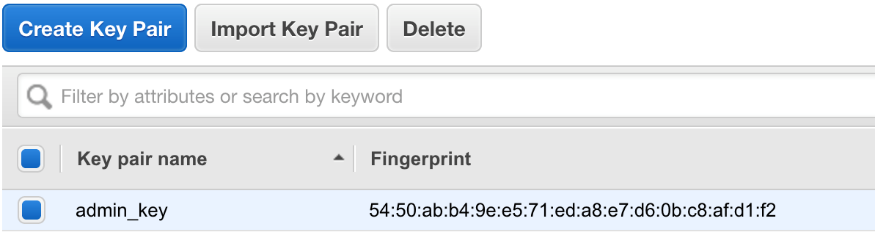

If you manually navigate to your AWS account, under EC2 |Network & Security | Key Pairs, you'll now find your key:

Unlock access to the largest independent learning library in Tech for FREE!

Get unlimited access to 7500+ expert-authored eBooks and video courses covering every tech area you can think of.

Renews at €18.99/month. Cancel anytime

Another way to use our key with Terraform and AWS would be to read it directly from the file, and that would show us how to use file interpolation with Terraform.

To do this, let's declare a new empty variable to store our public key in variables.tf:

variable "aws_ssh_admin_key_file" { }

Initialize the variable to the path of the key in terraform.tfvars:

aws_ssh_admin_key_file = "keys/aws_terraform"

Now let's use it in place of our previous keys.tf code, using the file() interpolation:

resource "aws_key_pair""admin_key" {

key_name = "admin_key"

public_key = "${file("${var.aws_ssh_admin_key_file}.pub")}"

}

This is a much clearer and more concise way of accessing the content of the public key from the Terraform resource. It's also easier to maintain, as changing the key will only require to replace the file and nothing more.

How it works…

Our first resource, aws_key_pair takes two arguments (a key name and the public key content). That's how all resources in Terraform work.

We used our first file interpolation, using a variable, to show how to use a more dynamic code for our infrastructure.

There's more…

Using Ansible, we can create a role to do the same job. Here's how we can manage our EC2 key pair using a variable, under the name admin_key. For simplification, we're using here the three usual environment variables—AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, and AWS_DEFAULT_REGION:

Here's a typical Ansible file hierarchy:

├── keys

│ ├── aws_terraform

│ └── aws_terraform.pub

├── main.yml

└── roles

└── ec2_keys

└── tasks

└── main.yml

In the main file (main.yml), let's declare that our host (localhost) will apply the role dedicated to manage our keys:

---

- hosts: localhost

roles:

- ec2_keys

In the ec2_keys main task file, create the EC2 key (roles/ec2_keys/tasks/main.yml):

---

- name: ec2 admin key

ec2_key:

name: admin_key

key_material: "{{ item }}"

with_file: './keys/aws_terraform.pub'

Execute the code with the following command:

$ ansible-playbook -i localhost main.yml

TASK [ec2_keys : ec2 admin key]

************************************************

ok: [localhost] => (item=ssh-rsa AAAA[…] aws_terraform_ssh)

PLAY RECAP *********************************************************************

localhost : ok=2 changed=0 unreachable=0

failed=0

Using AWS security groups with Terraform

Amazon's security groups are similar to traditional firewalls, with ingress and egress rules applied to EC2 instances. These rules can be updated on-demand. We'll create an initial security group allowing ingress Secure Shell (SSH) traffic only for our own IP address, while allowing all outgoing traffic.

Getting ready

To step through this section, you will need the following:

- A working Terraform installation

- An AWS provider configured in Terraform

- An Internet connection

How to do it…

The resource we're using is called aws_security_group. Here's the basic structure:

resource "aws_security_group""base_security_group" {

name = "base_security_group"

description = "Base Security Group"

ingress { }

egress { }

}

We know we want to allow inbound TCP/22 for SSH only for our own IP (replace 1.2.3.4/32 by yours!), and allow everything outbound. Here's how it looks:

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["1.2.3.4/32"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

You can add a Name tag for easier reference later:

tags {

Name = "base_security_group"

}

Apply this and you're good to go:

$ terraform apply

aws_security_group.base_security_group: Creating...

[…]

aws_security_group.base_security_group: Creation complete

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

You can see your newly created security group by logging into the AWS Console and navigating to EC2 Dashboard|Network & Security|Security Groups:

Another way of accessing the same AWS console information is through the AWS command line:

$ aws ec2 describe-security-groups --group-names base_security_group

{...}

There's more…

We can achieve the same result using Ansible. Here's the equivalent of what we just did with Terraform in this section:

---

- name: base security group

ec2_group:

name: base_security_group

description: Base Security Group

rules:

- proto: tcp

from_port: 22

to_port: 22

cidr_ip: 1.2.3.4/32

Summary

In this article, you learnedhow to configure the Terraform AWS provider, create and use an SSH key pair to use on AWS, and use AWS security groups with Terraform.

Resources for Article:

Further resources on this subject:

United States

United States

Great Britain

Great Britain

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

Singapore

Singapore

Hungary

Hungary

Ukraine

Ukraine

Luxembourg

Luxembourg

Estonia

Estonia

Lithuania

Lithuania

South Korea

South Korea

Turkey

Turkey

Switzerland

Switzerland

Colombia

Colombia

Taiwan

Taiwan

Chile

Chile

Norway

Norway

Ecuador

Ecuador

Indonesia

Indonesia

New Zealand

New Zealand

Cyprus

Cyprus

Denmark

Denmark

Finland

Finland

Poland

Poland

Malta

Malta

Czechia

Czechia

Austria

Austria

Sweden

Sweden

Italy

Italy

Egypt

Egypt

Belgium

Belgium

Portugal

Portugal

Slovenia

Slovenia

Ireland

Ireland

Romania

Romania

Greece

Greece

Argentina

Argentina

Netherlands

Netherlands

Bulgaria

Bulgaria

Latvia

Latvia

South Africa

South Africa

Malaysia

Malaysia

Japan

Japan

Slovakia

Slovakia

Philippines

Philippines

Mexico

Mexico

Thailand

Thailand