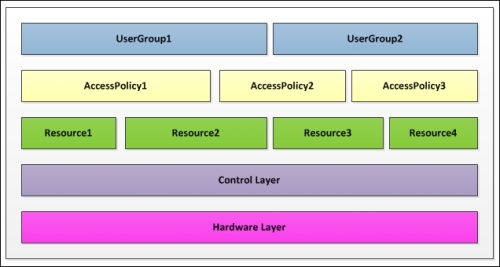

The five-layer model

When designing a new infrastructure, a common mistake is trying to focus on everything at once. A better and suggested approach is to divide the solution into layers and then analyze, size, and make decisions, one level at a time.

FlexCast Management Architecture can be divided into the following five layers:

- User layer: This defines user groups and locations

- Access layer: This defines how users access the resources

- Resource layer: This defines which resources are assigned to the given users

- Control layer: This defines the components required to run the solution

- Hardware layer: This defines the physical elements where the software components run

The power of a FlexCast architecture is that it's extremely flexible; different users can have their own set of policies and resources, but everything is managed by a single, integrated control layer, as shown in the following figure:

The five-layer model of FlexCast Management Architecture

The user layer

The need for a new application delivery solution normally comes from user requirements.

The minimum information that must be collected is as follows:

- What users need access to (business applications, a personalized desktop environment, and so on)

- What endpoints the users will use (personal devices, thin clients, smartphones, and so on)

- Where users connect from (company's internal network, unreliable external networks, and so on)

User groups can access more than one resource at a time. For example, office workers can access a shared desktop environment with some common office applications that are installed, and in addition, use some hosted applications.

The access layer

The access layer defines how users gain access to their resources based on where the user is located and the security policies of the organization.

The infrastructure components that provide access to resources are as follows:

- StoreFront (Web Interface 5.4 is still supported to offer customers additional time to migrate to StoreFront)

- NetScaler Gateway

The following figure demonstrates the access layer:

FlexCast Management Architecture—the access layer

StoreFront

Internal users access a StoreFront store using Citrix Receiver that is installed on their endpoints or via the StoreFront web interface. StoreFront also offers a receiver for HTML5 that does not require any installation on the user's device.

Upon successful authentication, StoreFront contacts a Delivery Controller to receive the list of available resources and allows the users to mark some of them as favorites.

StoreFront requires Windows 2008 R2 SP1 or later, with Microsoft Internet Information Services (IIS) and the .NET framework. Even if it can be installed on a server that runs other components, my suggestion is to install it on at least two dedicated servers with a load balancer in front of them to provide high availability.

Note

Users' subscriptions are automatically synchronized between all the StoreFront servers.

StoreFront is the entry point of your XenApp infrastructure. To correctly size its servers, you will need to estimate or measure the maximum number of concurrent logins (if your infrastructure mainly serves internal users, most of the logins will happen in the morning, when users arrive at office).

From my experience, StoreFront does not require a lot of hardware resources: a server with two CPUs and 4 GB of memory is enough for up to 1,000 user connections per hour.

NetScaler Gateway™

Remote users connect and authenticate to NetScaler Gateway, which is located within the network's DMZ.

Note

NetScaler Gateway is available both as a hardware appliance (MPX) and a virtual appliance (VPX); the virtual appliance delivers the same features and functionalities as that of the physical one. The main difference between VPX and MPX is about SSL; MPX has dedicated offload capabilities.

An MPX appliance has a single, dual-core processor and 4 GB of memory, while the virtual appliance that can be run on VMware ESXi, Microsoft Hyper-V, and XenServer, requires at least 2 virtual CPUs, 4 GB of memory and 20 GB of disk space.

NetScaler Gateway establishes a secure SSL channel with the user's device, and all the traffic is encapsulated in that channel.

Upon successful validation, NetScaler forwards the user request to the internal StoreFront servers that then generates a list of the available resources that is passed back to the user through NetScaler Gateway.

When the user launches a resource, all the traffic between the server that hosts the resource and the user's device is again encapsulated in the SSL channel.

To provide high availability, you can deploy two NetScaler Gateway appliances in a failover clustering configuration; so, if the primary active appliance fails, the secondary one becomes active. The HA feature is native and part of the NetScaler license; it does not require any external clustering solutions (Microsoft Cluster…).

Access policies

In most environments, there are different security policies based on the users' locations; for example, users in the internal network can authenticate using only the username and password, while external users might need to enter a token code as well (multifactor authentication).

In NetScaler Gateway, you can configure session policies to differentiate between incoming connections by analyzing elements such as IP addresses, HTTP headers, SSL certificates, and so on. Moreover, you can also combine different expressions using logical operators (OR and AND), use built-in functions to test whether the client is running the most updated antivirus, and perform string matching with the power of regular expressions.

Policies do not take actions. They provide the logic to evaluate traffic. To perform an operation based on a policy's evaluation, you have to configure actions and profiles and associate them with policies. Policies can be applied at user, group, and server levels; all the applicable policies are inspected, and the one with the lowest priority wins.

Actions are steps that NetScaler takes; for example, you can allow an incoming connection if it matches the associated policy and deny it if not.

Profiles are collections of settings, for example, the session timeout (in minutes) or the Web interface / Storefront server URL.

To help system administrators create the correct policies and profiles for most common clients (Citrix Receiver, Receiver for Web, Clientless Access, and so on), Quick Configuration Wizard is automatically executed the first time you configure NetScaler Gateway.

The resource layer

A XenApp infrastructure is designed and implemented to offer resources to the users.

XenApp offers different strategies to deliver applications. The choice of the correct strategy has a high impact on the infrastructure. Later in this chapter, you'll learn how to size the servers based on the number and type of applications.

Hosted apps

Hosting apps is the most common strategy: applications are installed and published on servers with a delivery agent installed (worker servers). Each application is assigned to a delivery group; when accessed, the application executes on the hosting server, and the user interface is displayed on the user's desktop. This is the best approach for standard, business applications.

VM-hosted apps

Some applications might not run on server-based operating systems such as Windows 2012. They have particular license or software requirements that conflict with other installed applications. Using the VM-hosted apps strategy, these applications are installed on virtual machines based on client-operating systems (such as Windows 7). When a user requires one of the applications, it runs on the VM, and its user interface is displayed on the user's desktop.

Because applications are installed on a client-operating system that does not support more than one user connected at a time, you need at least one VM for each concurrent execution of the hosted application.

Streamed apps

Applications are not installed on the server or user's desktop. Using a solution such as Microsoft App-V or VMware ThinApp, they are dynamically delivered to the target server or desktop when accessed. Such solutions require an external infrastructure. They are normally chosen when there are a large number of different applications to be delivered or when applications are resource-intensive; therefore, they must be run on the end user's device. Applications have to be carefully evaluated and packaged prior to streaming; refer to Microsoft and VMware's documentation for more detailed information.

Shared desktops

Users connect to a hosted server-based Windows operating system where the virtual machine is shared among a pool of users simultaneously. In this scenario, each user is encapsulated within their own session, and the desktop interface is remotely displayed.

Remote PC Access

Users connect to their physical office desktops, allowing them to work at any time. Remote PC Access uses the Citrix HDX policies and access layer, providing better security and performance over a simple RDP connection. Moreover, the connections can be managed using the centralized delivery console.

The control layer

The control layer includes all the components that are required to run the infrastructure:

- Delivery Controllers

- SQL databases

- License servers

Delivery Controllers

A Delivery Controller is responsible for distributing applications and desktops, managing user access, and optimizing connections to applications. Each Site has one or more Delivery Controllers.

A Delivery Controller is the heart of a XenApp infrastructure; it is queried when a user logs in, when it launches an application, and when policies are evaluated. It's therefore important to correctly size the servers that host this component.

The minimum requirements are as follows:

- 2 vCPU and 4 GB of memory

- 100 MB of disk space to install the software

- Windows 2008 R2 Service Pack 1 or later

In my experience, Delivery Controllers consume a lot of CPU memory; this is especially true in those moments of the day (for example, in the morning) when most of the users start working. If the CPU of the Delivery Controllers' servers reaches a critical threshold, roughly 80 percent, you need to scale up or scale out.

If you're using a virtualized environment, it could be easy to add virtual CPUs to the servers; an alternative is to add another controller to the Site configuration.

Your infrastructure should have at least two Delivery Controllers to provide high availability. You can add more Delivery Controllers and the load will be evenly distributed across all the controllers, thus helping to reduce the overall load on each single controller.

Tip

In a virtualized environment, the controllers should be distributed across multiple physical servers to help spread the CPU load across multiple servers and provide greater levels of fault tolerance. On VMware, for example, you can configure an anti-affinity rule to ensure the virtual servers would never be placed on the same physical one.

SQL databases

All the configuration settings, the log of changes, and the monitoring events of your XenApp infrastructure are stored in SQL databases.

XenApp 7.5 supports the following Microsoft SQL Server versions:

- SQL Server 2008 R2 SP2 Express, Standard, Enterprise, and Datacenter editions

- SQL Server 2012 SP1 Express, Standard, Enterprise, and Datacenter editions

If no SQL Server is found, SQL Server 2012 SP1 Express Edition is installed during the installation of the first controller of your Site. The use of the free Express Edition is, however, suitable only for small installations (less than 100 users).

Note

To provide high availability, XenApp 7.5 supports the following SQL Server features:

- Clustered instances

- Mirroring

- AlwaysOn Availability Groups (SQL Server 2012 only)

By default, the Configuration Logging and Monitoring databases (usually called as the secondary databases) are located on the same server as the Site Configuration database. Initially, a single database is used for all the three datastores, as shown in the following screenshot:

The Site, Logging, and Monitoring databases

The Site database

The Site database contains configuration information on how the system runs.

Its size and load increases during peak hours as each user logon requires multiple transactions to be carried out. It also generates session and connection information to be tracked.

If the Site database becomes unavailable, the system is unable to accept new users, while the existing connections are maintained.

Note

Delivery Controllers don't have Local Host Cache (LHC) like the datastore servers had in previous versions of XenApp. Thanks to LHC, the infrastructure could be run even if the database server is unavailable; with the absence of LHC in XenApp 7.5, the database server has become a more critical element of the infrastructure.

The maximum size is usually reached after 48 hours as a small log of connections is maintained within the Site database for two days.

From my experience, the Site database does not require much storage. A typical storage requirement is as follows (refer to http://support.citrix.com/article/CTX139508):

|

Number of users |

Number of applications |

Database size |

|---|---|---|

|

100 |

50 |

50 MB |

|

100 |

250 |

150 MB |

|

1,000 |

50 |

70 MB |

Monitoring database

The Monitoring database contains historical information used by the Director.

The retention period depends on the XenApp license you own:

- For non-platinum customers, the default and maximum period is seven days

- For platinum customers, the default period is 90 days with no maximum period

Updates to the Monitoring database are performed in batches and the number of transactions per second is usually low (less than 20). Overnight processing is performed to remove obsolete data.

If the Monitoring database becomes unavailable, the system works but data is not collected and not visible within Director.

Of the three databases, the Monitoring database is expected to grow the largest over time. Its size depends on many factors; anyway, a realistic estimation is that 1,000 users working 5 days/week generate 20-30 MB of data each week.

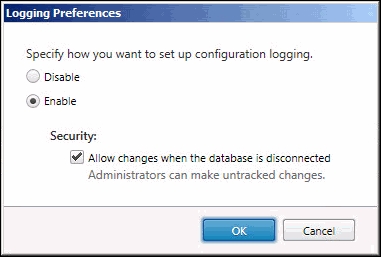

The Configuration Logging database

The Configuration Logging database contains a historical log of all the configuration changes. The information is used by audit reports.

The size and transaction rate are hard to predict; they depend on how much configuration activity is performed. This is usually the smallest and least-loaded database of the three.

The Configuration Logging database has no retention policy; here, data is not removed unless done so manually by the administrator.

The administrator can configure the Site database to accept or refuse configuration changes when the Configuration Logging database is unavailable, as shown in the following screenshot:

Changing logging preferences

Moving databases

Citrix recommends that you change the location of the secondary databases after you create a Site database.

You can simply change the location of the databases from Studio, as follows:

- Select Configuration on the left-hand side of the pane.

- Select the database you want to change the location for.

- Click on Change Database in the Actions pane.

- Specify the location of the new database server.

If you specify a new location, remember that the data in the previous database is not imported to the new one and that logs cannot be aggregated from both the databases.

You can also migrate the databases from the actual database server to the new one without losing data. First, you must stop configuration logging and monitoring to make sure that no new data is written to the database during the move.

Then, start Windows PowerShell and type the following commands:

PS C:\> asnp Citrix* PS C:\> Set-LogSite -State "Disabled" PS C:\> Set-MonitorConfiguration -DataCollectionEnabled $False

Now, you can back up the existing databases and restore them onto the new server.

After having configured the new database location as explained earlier, remember to enable configuration logging and monitoring again with the following commands:

PS C:\> Set-LogSite -State "Enabled" PS C:\> Set-MonitorConfiguration -DataCollectionEnabled $True

Tip

The asnp part means Add-PSSnapin; this means that the first line loads the Citrix-specific PowerShell modules, and it's required to run PowerShell commands and scripts that interact with Citrix products.

Scaling

If your infrastructure grows and more controllers are brought online, the SQL database CPU will eventually become a bottleneck.

To expand the size of a single XenApp installation, you can opt for one of the following options:

- Divide: This is used to move secondary databases to a new server.

- Scale up: This is used to add additional CPU resources to your database server.

- Scale out: This is used to create a second XenApp Site alongside the initial one. Note that each Site would be semi-independent of each other; some components may be shared (such as the access layer), but the controllers and the SQL databases would only function within their own Site.

License servers

The license server stores and manages Citrix licenses. The first time a user connects to a XenApp server, the server checks out a license for the user, and subsequent connections of the same user share the same license.

A single license server is enough for Sites with thousands of servers and users; you could install a second license server in your Site, but the two servers cannot share licenses. Because the license server is contacted when the user connects to a XenApp server, slow responses will ensue and might increase the login time. You should place the license service on a dedicated server or, in the case of smaller infrastructures, on a server that doesn't publish applications. The license server process is single-threaded, so multiple processors do not increase its performance.

If the license server is not available, all the servers in your Site enter a grace period of 720 hours; during this period, users are still allowed to connect. This means that you usually don't need a high-availability solution for your license server; if a server fault occurs, you can install a new license server during the 30 days of the grace period or power on a second license server you prepared and kept turned off (cold standby).

The hardware layer

The hardware layer is the physical implementation of the XenApp solution. After having collected all of the required information for previous layers, we're now ready to choose the correct number of servers and their hardware configuration.

The two different sets of hardware are defined as follows:

- One for the resource layer

- One for the access and control layers

Hosting resources

For server-hosted resources (hosted apps, shared desktops, and VM-hosted apps), a set of physical or virtual machines is required.

VM-hosted apps run on client-operating systems, and each user must be provided with a dedicated machine; therefore, the number of machines depend on the number of concurrent users. For hosted apps or shared desktops, the number of servers you need and their hardware configuration depends on the number of users and applications, and even more on the kind of the applications and how you deliver them to the users.

The use of a virtual infrastructure is highly recommended; it lets you quickly reallocate resources (CPU, memory, and so on) if required. Later in this chapter, you'll learn how to use a new delivery fabric solution by Citrix, Machine Creation Services (MCS), to deploy new servers in minutes, working together with your virtualization technology.

My suggestion for correctly sizing your infrastructure is to set up a test environment where you can verify the load that each application produces using real users or automatic testing tools such as LoginVSI or HP Loadrunner (Citrix EdgeSight for load testing is now deprecated and no longer supported).

Tip

With XenApp 7.5, the hardware layer can also include cloud-hosted desktops and apps with the integration of Amazon AWS, CloudPlatform, and Microsoft Azure.

Amazon AWS only supports Server OS machines.

I found out that the new delivery agent is lighter in weight than the IMA service installed on session host servers in XenApp 6.5; therefore, you might save some hardware resources when moving to the new 7.5 version.

Applications on servers – siloed versus nonsiloed

Two strategies for placing hosted applications on servers are available: siloed and nonsiloed.

Siloed

In this approach, applications are installed on small groups of servers; you could even have the servers running a single application. Applications are usually grouped by their use; for example, all the applications used by the Finance department are installed on the same servers, while the applications used by the HR department are installed on different servers.

This approach is sometimes required if your applications have different hardware requirements or might cause conflicts if installed on the same server. Some application vendors, moreover, don't consider a different licensing agreement if their applications are published through XenApp. So, if you pay licensing fees, by simply counting the number of installations, you might reduce the cost of installing them on a small number of servers.

Siloed applications can increase costs as they require more resources for standby; they require more hot spare servers (at least one for each silo) than the nonsiloed approach. If silos are necessary for isolation, you can consider using app streaming.

Nonsiloed

In this approach, all the applications are installed on all the servers. This approach is more efficient as it reduces the number of required servers, and it may also improve the user experience because it allows users to share the same server session with different applications. If you're using any automatic technology to deploy servers, a nonsiloed approach will also help you to reduce the number of different images you have to create and maintain.

My suggestion is to use the nonsiloed approach when possible. Later in this book, you'll learn that with session machine catalogs and delivery groups, you will still be able to logically group applications on servers even with this approach.

Supporting the infrastructure

A typical XenApp 7.5 infrastructure has a couple of Storefront servers, a couple of Delivery Controllers, one license server, and one database server (clustered to provide high availability). If you have external users, a VPN solution (NetScaler Gateway or third-party products) is usually added.

When sizing the infrastructure, consider that Storefront and Delivery Controller servers can be easily scaled out by adding new servers, while you can't have two active database servers in your Site to balance the load or add another database server to your Site. Moreover, if you're using a shared database server (for example, a database server that is also hosting some production databases), pay attention to the fact that the load generated by XenApp will not be smooth during the day, but it will probably have peaks in the morning and after lunchtime.

Even if Citrix supports the installation of a Delivery Controller, license server, and Storefront on the same server (and this is the default option during the setup), my suggestion is, wherever possible, to use different servers; better if these servers are virtualized. If your virtualized environment has built-in HA functionalities (such as VMware HA or Hyper-V clustering), you might deploy only one Delivery Controller and one Storefront server to start and then add new servers if needed.

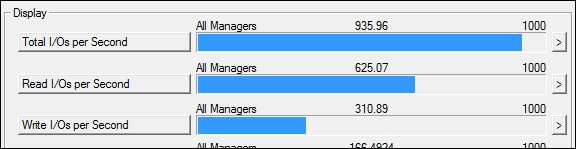

Storage

Storage is often considered as one of the most important and complex decisions in a XenApp or XenDesktop solution.

Storage is not only the amount of disk space that must be allocated to the solution but also the number of Input/Output Operations (IOPS) that must be available in order to provide a good user experience.

It might seem strange at first, but when you're choosing the correct storage solution for your new infrastructure, the most critical information is the maximum number of IOPS the storage platform you're evaluating can offer. All the storage systems can indeed be updated to add more disk space, while the maximum number of IOPS can be limited by the type of connection (fiber-channel, iSCSI, and so on), its speed, or the storage controllers, elements that are difficult or expensive to replace when in production.

As a rule of thumb, you can consider 10-15 IOPS for each virtual desktop-running office applications and 5-8 IOPS for each user-running applications on worker servers.

Tip

You can use the free Iometer tool (http://www.iometer.org) to test the performance of your storage's subsystem.

An example of using the Iometer tool is shown in the following screenshot:

Using Iometer to test the maximum number of IOPS