This article is an excerpt from the book, Pretrain Vision and Large Language Models in Python, by Emily Webber. This book will help you pretrain and fine-tune your own foundation models from scratch on AWS and Amazon SageMaker, while applying them to hundreds of use cases across your organization.

Stable Diffusion is a revolutionary model that allows you to unleash your creativity by generating captivating images through natural language prompts. This article explores the power of Stable Diffusion in producing high-resolution, black-and-white masterpieces, inspired by renowned artists like Ansel Adams. Discover valuable tips and techniques to enhance your Stable Diffusion results, including adding descriptive words, utilizing negative prompts, upscaling images, and maximizing precision and detail. Dive into the world of hyperparameters and learn how to optimize guidance, seed, width, height, and steps to achieve stunning visual outcomes. Unleash your artistic vision with Stable Diffusion's endless possibilities.

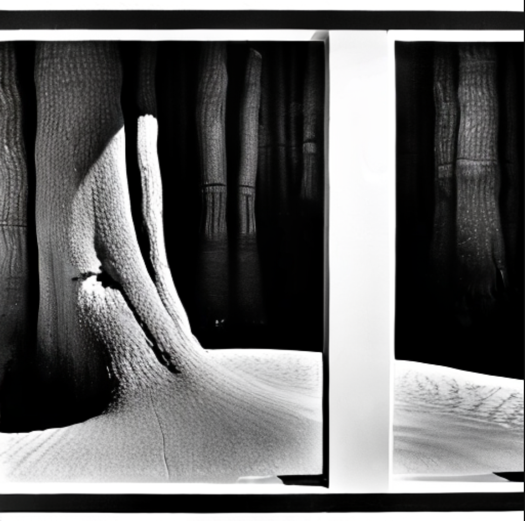

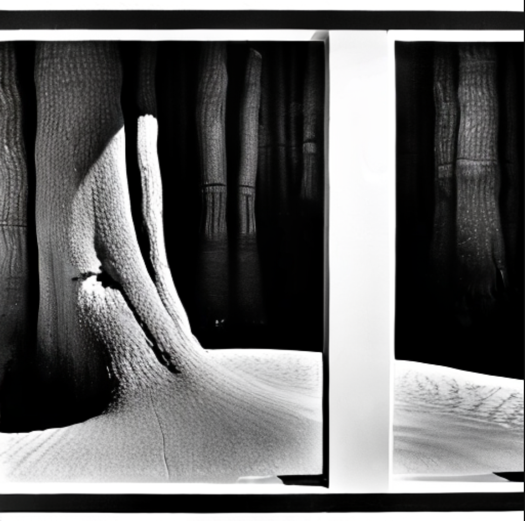

Stable Diffusion is a great model you can use to interact with via natural language and produce new images. The beauty, fun, and simplicity of Stable Diffusion-based models are that you can be endlessly creative in designing your prompt. In this example, I made up a provocative title for a work of art. I asked the model to imagine what it would look like if created by Ansel Adams, a famous American photographer from the mid-twentieth century known for his black-and-white photographs of the natural world. Here was the full prompt: “Closed is open” by Ansel Adams, high resolution, black and white, award-winning. Guidance (20). Let’s take a closer look.

Figure 1 – An image generated by Stable Diffusion

In the following list, you’ll find a few helpful tips to improve your Stable Diffusion results:

Unlock access to the largest independent learning library in Tech for FREE!

Get unlimited access to 7500+ expert-authored eBooks and video courses covering every tech area you can think of.

Renews at $19.99/month. Cancel anytime

- Add any of the following words to your prompt: Award-winning, high resolution, trending on <your favorite site here>, in the style of <your favorite artist here>, 400 high dpi, and so on. There are thousands of examples of great photos and their corresponding prompts online; a great site is Lexica. Starting from what works is always a great path. If you’re passionate about vision, you can easily spend hours of time just pouring through these and finding good examples. For a faster route, that same site lets you search for words as a prompt and renders the images. It’s a quick way to get started with prompting your model.

- Add negative prompts: Stable Diffusion offers a negative prompt option, which lets you provide words to the model that it will explicitly not use. Common examples of this are hands, humans, oversaturated, poorly drawn, and disfigured.

- Upscaling: While most prompting with Stable Diffusion results in smaller images, such as size 512x512, you can use another technique, called upscaling, to render that same image into a much larger, higher quality image, of size 1,024x1,024 or even more. Upscaling is a great step you can use to get the best quality Stable Diffusion models today, both on SageMaker (2) and through Hugging Face directly. (3) We’ll dive into this in a bit more detail in the upcoming section on image-to-image.

- Precision and detail: When you provide longer prompts to Stable Diffusion, such as including more terms in your prompt and being extremely descriptive about the types and styles of objects you’d like it to generate, you actually increase your odds of the response being good. Be careful about the words you use in the prompt. As we learned earlier in the chapter on bias, most large models are trained on the backbone of the internet. With Stable Diffusion, for better or for worse, this means you want to use language that is common online. This means that punctuation and casing actually aren’t as important, and you can be really creative and spontaneous with how you’re describing what you want to see.

- Order: Interestingly, the order of your words matters in prompting Stable Diffusion. If you want to make some part of your prompt more impactful, such as dark or beautiful, move that to the front of your prompt. If it’s too strong, move it to the back.

- Hyperparameters: These are also relevant in language-only models, but let’s call out a few that are especially relevant to Stable Diffusion.

Key hyperparameters for Stable Diffusion prompt engineering

- Guidance: The technical term here is classifier-free guidance, and it refers to a mode in Stable Diffusion that lets the model pay more (higher guidance) or less (lower guidance) attention to your prompt. This ranges from 0 up to 20. A lower guidance term means the model is optimizing less for your prompt, and a higher term means it’s entirely focused on your prompt. For example, in my image in the style of Ansel Adams above, I just updated the guidance term from 8 to 20. In the guidance=8 in Figure 13.3, you see a rolling base and gentle shadows. However, when I updated to guidance=20 on the second image, the model captures the stark contrast and shadow fades that characterized Adams’ work. In addition, we have a new style, almost like M. C. Escher, where the tree seems to turn into the floor.

- Seed: This refers to an integer you can set to baseline your diffusion process. Setting the seed can have a big impact on your model response. Especially if my prompt isn’t very good, I like to start with the seed hyperparameter and try a few random starts. Seed impacts high-level image attributes such as style, size of objects, and coloration. If your prompt is strong, you may not need to experiment heavily here, but it’s a good starting point.

- Width and height: These are straightforward; they’re just the pixel dimensions of your output image! You can use them to change the scope of your result, and hence the type of picture the model generates. If you want a perfectly square image, use 512x512. If you want a portrait orientation, use 512x768. For landscape orientation, use 768x512. Remember you can use the upscaling process we’ll learn about shortly to increase the resolution on the image, so start with smaller dimensions first.

- Steps: This refers to the number of denoising steps the model will take as it generates your new image, and most people start with steps set to 50. Increasing this number will also increase the processing time. To get great results, personally, I like to scale this against guidance. If you plan on using a very high guidance term (~16), such as with a killer prompt, then I wouldn’t set inference steps to anything over 50. This looks like it overfits, and the results are just plain bad. However, if your guidance scale is lower, closer to 8, then increasing the number of steps can get you a better result.

Summary

In conclusion, Stable Diffusion offers a fascinating avenue for creative expression through its image-generation capabilities. By employing strategic prompts, negative constraints, upscaling techniques, and optimizing hyperparameters, users can unlock the full potential of this powerful model. Embrace the boundless creativity and endless possibilities that Stable Diffusion brings to the world of visual art.

Author Bio

Emily Webber is a Principal Machine Learning Specialist Solutions Architect and keynote speaker at Amazon Web Services, where she has led the development of countless solutions and features on Amazon SageMaker. She has guided and mentored hundreds of teams, developers, and customers in their machine-learning journey on AWS. She specializes in large-scale distributed training in vision, language, and generative AI, and is active in the scientific communities in these areas. She hosts YouTube and Twitch series on the topic, regularly speaks at re:Invent, writes many blog posts, and leads workshops in this domain worldwide.

United States

United States

Great Britain

Great Britain

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

Singapore

Singapore

Hungary

Hungary

Philippines

Philippines

Mexico

Mexico

Thailand

Thailand

Ukraine

Ukraine

Luxembourg

Luxembourg

Estonia

Estonia

Lithuania

Lithuania

Norway

Norway

Chile

Chile

South Korea

South Korea

Ecuador

Ecuador

Colombia

Colombia

Taiwan

Taiwan

Switzerland

Switzerland

Indonesia

Indonesia

Cyprus

Cyprus

Denmark

Denmark

Finland

Finland

Poland

Poland

Malta

Malta

Czechia

Czechia

New Zealand

New Zealand

Austria

Austria

Turkey

Turkey

Sweden

Sweden

Italy

Italy

Egypt

Egypt

Belgium

Belgium

Portugal

Portugal

Slovenia

Slovenia

Ireland

Ireland

Romania

Romania

Greece

Greece

Argentina

Argentina

Malaysia

Malaysia

South Africa

South Africa

Netherlands

Netherlands

Bulgaria

Bulgaria

Latvia

Latvia

Japan

Japan

Slovakia

Slovakia