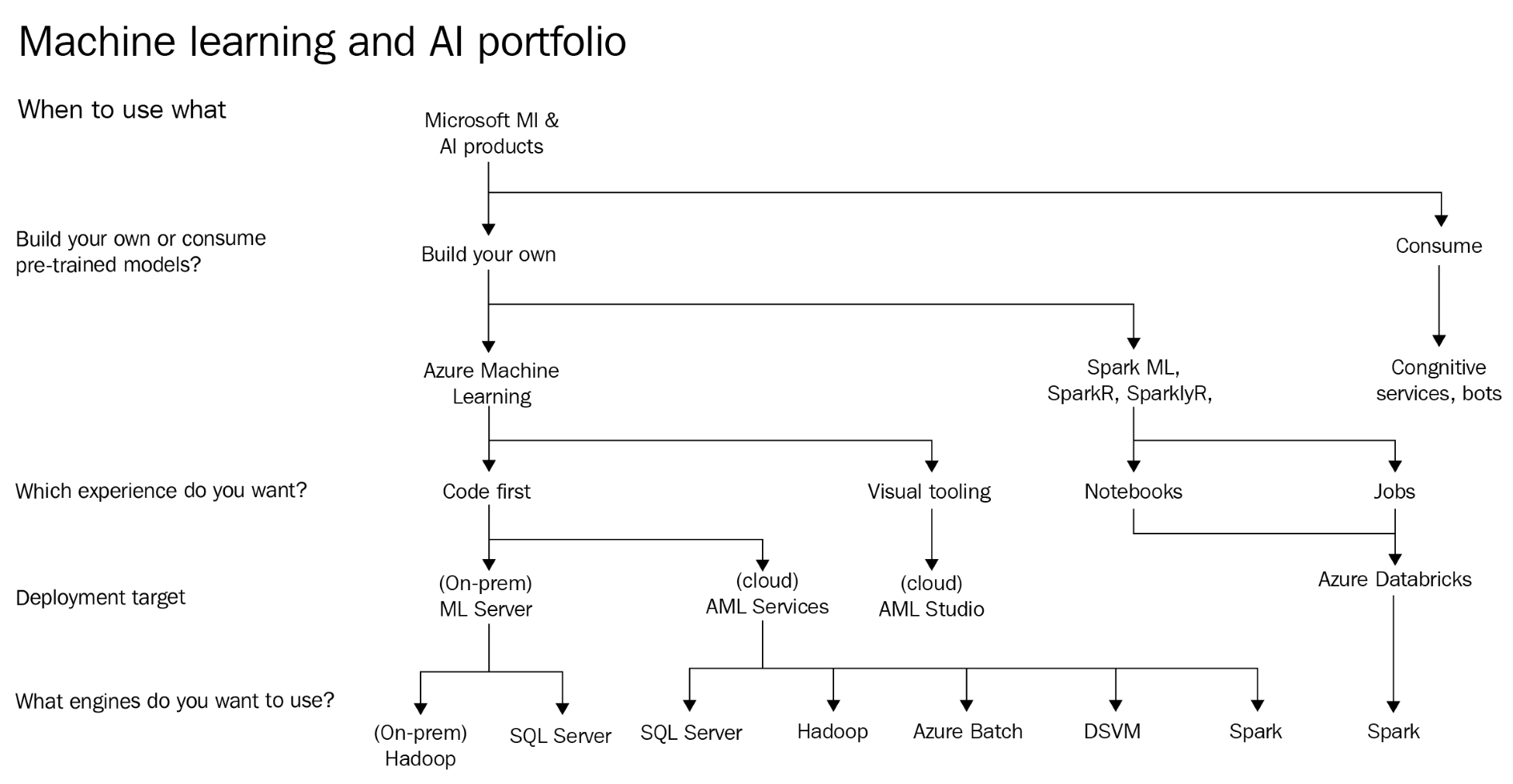

Developers who would like to consume pre-trained AI models, typically use one of Microsoft's Cognitive Services. For those who are building conversational applications, a combination of Bot Framework and Cognitive Services is the recommended path. We will go into the details of Cognitive Services in Chapter 3, Cognitive Services, and Chapter 4, Bot Framework, but it is important to understand when to choose Cognitive Services.

Cognitive Services were built with the goal of giving developers the tools to rapidly build and deploy AI applications. Cognitive Services are pre-trained, customizable AI models that are exposed via APIs with accompanying SDKs and web services. They perform certain tasks, and are designed to scale based on the load against it. In addition, they are also designed to be compliant with security standards and other data isolation requirements. At the time of writing, there are broadly five types of Cognitive Services offered by Azure:

- Knowledge

- Language

- Search

- Speech

- Vision

Knowledge services are focused on building data-based intelligence into your application. QnA Maker is one such service that helps drive a question-and-answer service with all kinds of structured and semi-structured content. Underneath, the service leverages multiple services in Azure. It abstracts all that complexity from the user and makes it easy to create and manage.

Language services are focused on building text-based intelligence into your application. The Language Understanding Intelligent Service, (LUIS) is one type of service that allows users to build applications that can understand natural conversation and pass on the context of the conversation, also known as NLP (short for Natural-language processing), to the requesting application.

Search services are focused on providing services that integrate very specialized search tools for your application. These services are based on Microsoft's Bing search engine, but can be customized in multiple ways to integrate search into enterprise applications. The Bing Entity Search service is one such API that returns information about entities that Bing determines are relevant to a user's query.

Speech services are focused on providing services that allow developers to integrate powerful speech-enabled features into their applications, such as dictation, transcription, and voice command control. The custom speech service enables developers to build customized language modules and acoustic models tailored to specific types of applications and user profiles.

Vision services provide a variety of vision-based intelligent APIs that work on images or videos. The Custom Vision Service can be trained to detect a certain class of images after it has been trained on all the possible classes that the application is looking for.

Each of these Cognitive Services has limitations in terms of their applicability to different situations. They also have limits on scalability, but they are well-designed to handle most enterprise-wide AI solutions. Covering the limits and applicability of the services is outside the scope of this book and is well documented.

Since updates occur on a monthly basis, it is best to refer to the Azure documentation to find the limits of these services.

As a developer, once you, knowingly or unknowingly, hit the limitations of Cognitive Services, the best option is to build your own models to meet your business requirements. Building your own AI models involves ingesting data, transforming it, performing feature engineering on it, training a model, and eventually, deploying the model. This can end up being an elaborate and time-consuming process, depending on the maturity of the organization's capabilities for the different tasks. Picking the right set of tools involves assessing that maturity during the different steps of the process and using a service that fits the organizational capabilities. Referring to the preceding diagram, the second question that gets asked of organizations that want to build their own AI models is related to the kind of experience they would like.