Learning how simple digital data is

My life and your life are full of data. When you commute to work, for example, you could be scrolling through Twitter, looking at friend's pictures on their social networks, buying groceries from your favorite online store, or streaming music. Everything represented by computers is made of ones and zeros; it is that simple. But how is that possible? How did pictures, videos, and songs start from a simple series of ones and zeros?

Although the answer could be a lot more complex and technical, we will stick to the basics to understand the general concepts. Essentially, to do that, we need to learn things from the point of view of a computer, something that you use all of the time and probably take for granted. This concept is essential to understand when it comes to learning computer science theory. Each one or zero stated in a single switch is called a bit, the smallest piece of data a computer can store. Just one circuit board can handle billions of switches, and computers are now able to use billions of bits to represent more complex data, such as text, songs, and images.

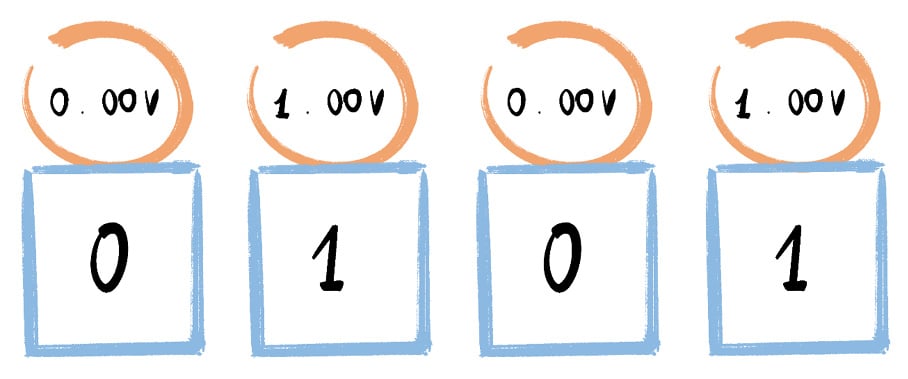

So, speaking of switches, an electrical current flows through switches, and when it does or does not travel through a switch, the switch goes on or off. To give you more context, imagine only using words to describe every note of your favorite song, or every scene of your beloved TV show. That is exactly what a computer does! Our devices use binary code as a language to create digital content we all know.

Here is a simple example of the electrical current that flows through switches:

Figure 1.3 – Example of electrical flow that lets switches go on or off

Before going forward with the bits and the switches thing, you need to remember that in the past, we had analog electronics. All of those older electronics, including televisions, used analog signals with variable wave height to represent sounds and images. The problem was that those wave signals were really small and the waveforms could be interrupted by interference caused by any other signals (and nowadays we are surrounded by signals). This caused snow visuals and static sounds, for example. During the last 30 years, analog technologies have all been digitized. Using bits instead of waveforms reduces the interference dramatically.

Fortunately, neither you nor I have to learn binary code to use Word, Photoshop, or Revit! Let's take an image, for example. Every image on a computer screen is made up of dots that we call pixels, and I'm not talking about object-oriented graphics, of course (vector artworks); let's keep it simple. Anywhere between a few hundred to a few billion pixels can constitute an image, and each pixel is made up of one color, which is coded with decimal values or hexadecimal code. Those decimal values and code have been transformed by the computer, starting from a series of ones and zeros, which started from the flow of electrical current into billions of switches.

Now that we know how computers process binary code, we are ready to talk about digital data types, which is fundamental to understanding more complex subjects, such as data manipulation and databases.