Chapter 11. Improving Performance of an ASP.NET Core Application

When you think about frequently accessed applications (the ones that we use daily), such as Google, YouTube, and Facebook, it is the performance of these applications that distinguishes them from similar applications. Think for a moment. If Google took more than 10 seconds to provide search results, most people would switch over to Bing or some other search engine. So, performance is one of the primary factors in an application's success.

In this chapter, we are going to learn about the following things:

- The approach to analyzing the performance issues of an application

- How to make use of browser developer tools to analyze the performance of an application

- Performance improvements in the UI layer

- Performance improvements in the web/application layer

- Performance improvements in the database layer

Normally, when people talk about the performance of an application, they think about the application's speed. Though speed contributes significantly to the performance of the application, we also need to consider maintainability, scalability, and reusability of the application.

A well-maintained code will be clear and have less technical debt, which in turn will increase the productivity of the developer. When we write code based on service-oriented architecture or micro services, our code will be more usable by others. This would also make our code scalable.

Normally, people think about the performance of the application when they have almost completed the development of the application and pilot users are complaining about the speed of the application. The right time to discuss performance is before the development of the application; we need to work with the product owners, business analysts, and actual users in order to arrive at a standard of an acceptable level of performance for the application. Then we design and code with this expected level of performance as our goal.

This also depends on the domain of the application. For example, a mission-critical healthcare application would demand great performance (they might expect responses in less than a second), whereas the performance of a back-office application may not demand so much. So, it is critical to understand the domain in which we are working.

If you have been asked to tune the performance of an existing application, it is also important to understand the existing architecture of the application. With ASP.NET Core, you can build a simple CRUD application to a mission-critical application serving millions of users across the world. A large application might have many other components, such as a load balancer, separate caching servers, Content Delivery Networks (CDN), an array of slave DB servers, and so on. So, when you analyze the performance of the application, first you need to study architecture, analyze each of the individual components involved, measure the performance of each of the components, and try to optimize them when the application does not suit your acceptable performance. The main thing is not to jump into performance improvement techniques without studying and analyzing the architecture of the application. If you are creating a new application, you can think about performance right from the start of the application's creation.

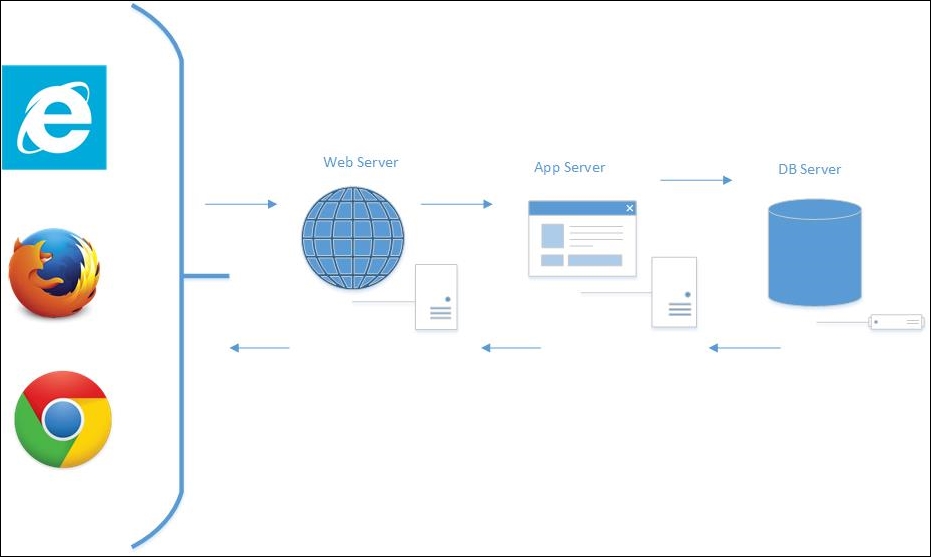

We will examine a typical web application setup, shown in the following screenshot. We will then analyze it and consider how to improve it:

The following steps show the process of using a web application:

- The user accesses an ASP.NET Core web application from a browser, such as Internet Explorer, Firefox, or Chrome. When the user types the URL into the browser and presses the Enter key, the browser creates a session and fires the HTTP request. This is not specific to an ASP.NET Core application. This behavior is the same for all web applications, irrespective of the technology on which they are built.

- The request reaches the web server. If it is a simple request, the web server itself will serve that request. Serving a static HTML file is a typical example of this. If the request is a bit complex, for example, returning some data based on the business logic, the request will be forwarded to the application server.

- The application server will query the database to get the data. Then it might do some business processing on the received data before returning the data to the web server. Sometimes, the web server might act as an application server for a smaller web application.

- Then, the web server will return the response, typically in HTML, to the requesting client.

Thus, we can categorize these components into three layers—the UI layer, the web/application layer, and the DB layer. With respect to improving the overall performance of the ASP.NET Core application, we need to have a thorough look at how we can improve the performance of each of the layers.

Before implementing any performance improvement techniques, we need to first analyze the performance in each of the layers in the application. Only then can we suggest ways improve the overall performance of the application.

The UI layer

The UI layer represents all the events (and associated stuff) happening between the browser and the server. There are many events, including, but not limited to, the following:

- Firing the HTTP request

- Getting the response

- Downloading the resources

- Rendering them in the browser

- Any JavaScript code execution

Reducing the number of HTTP requests

A typical web page might not have only HTML content. It may have references to CSS files, JS files, and images, or other sources. So, when you try to access a web page, the client will fire HTTP requests for each of these references and download those references from the server to the client.

Browser developer tools come in handy when you want to analyze the HTTP requests being fired from the client. Most of the browsers have developer tools that you can make use of.

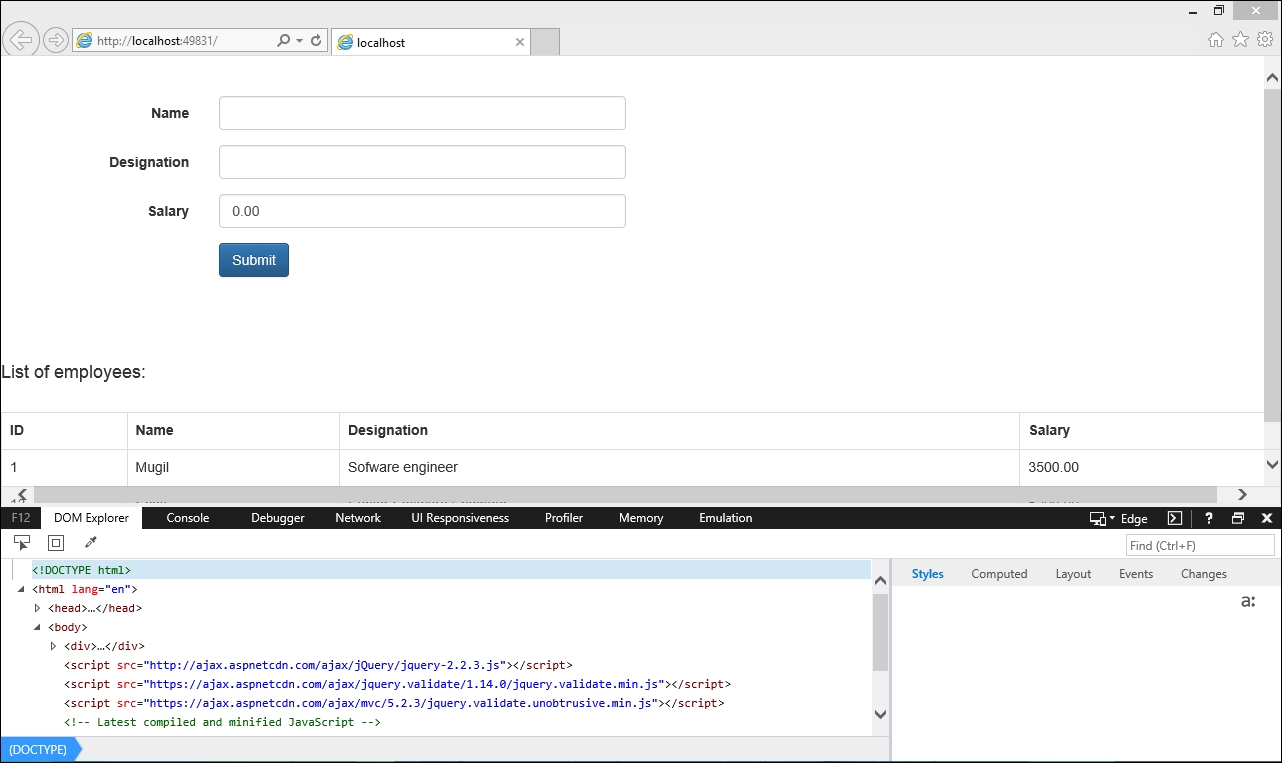

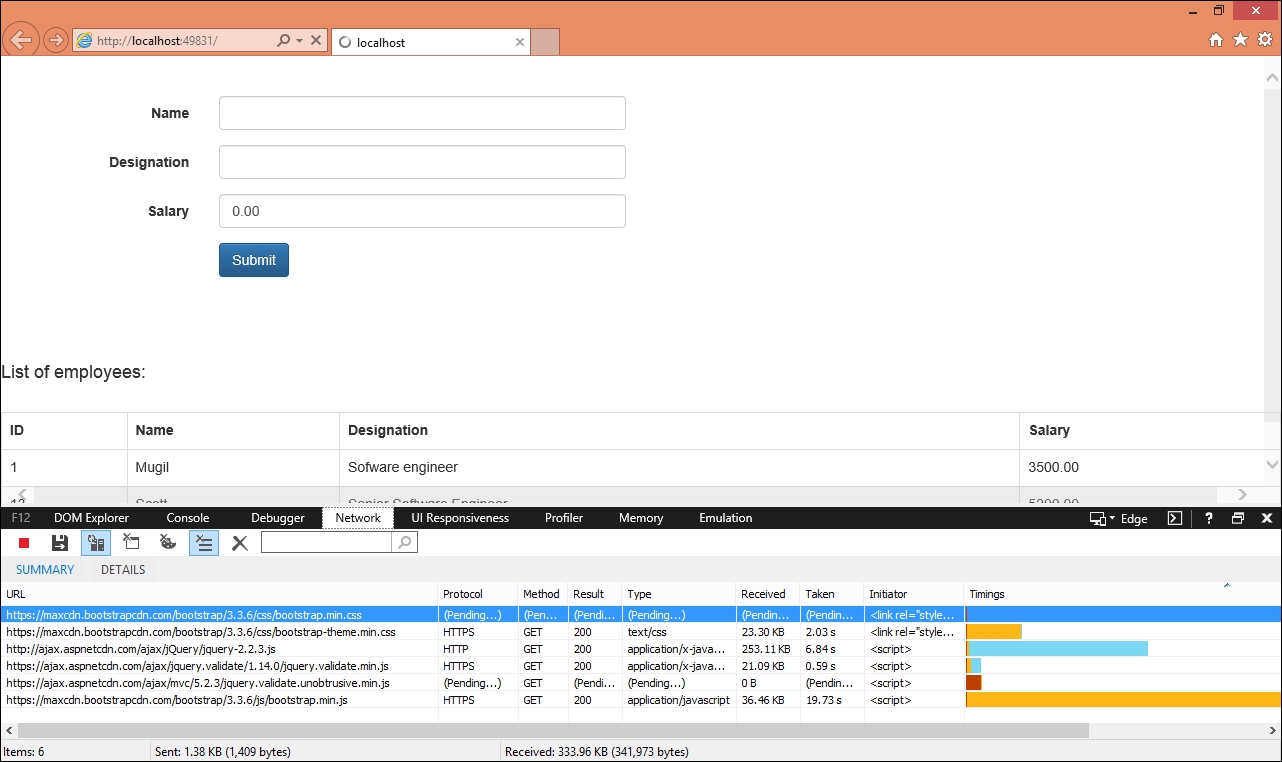

When you press F12 in Internet Explorer, the Developer Tools window will open at the bottom of the Internet Explorer window, as shown in the following screenshot:

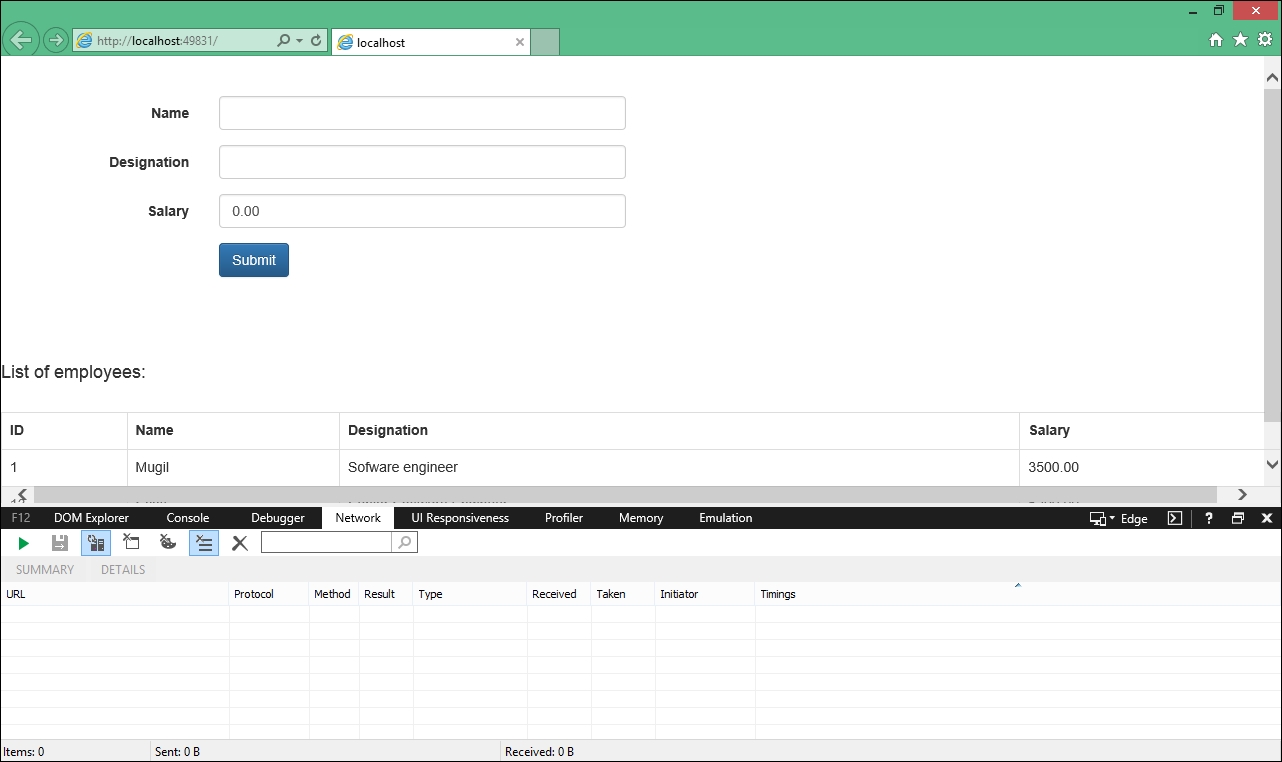

Click on the Network tab. Before entering the URL in the browser, click the Start button (the green play button), or click the green play button and refresh the page:

Once you press the Network tab's start button, Internet Explorer's Network tab will listen to each of the requests that are fired from the current tab. Each request will contain information, such as the URL, protocol, method, result (the HTTP status code), and other information.

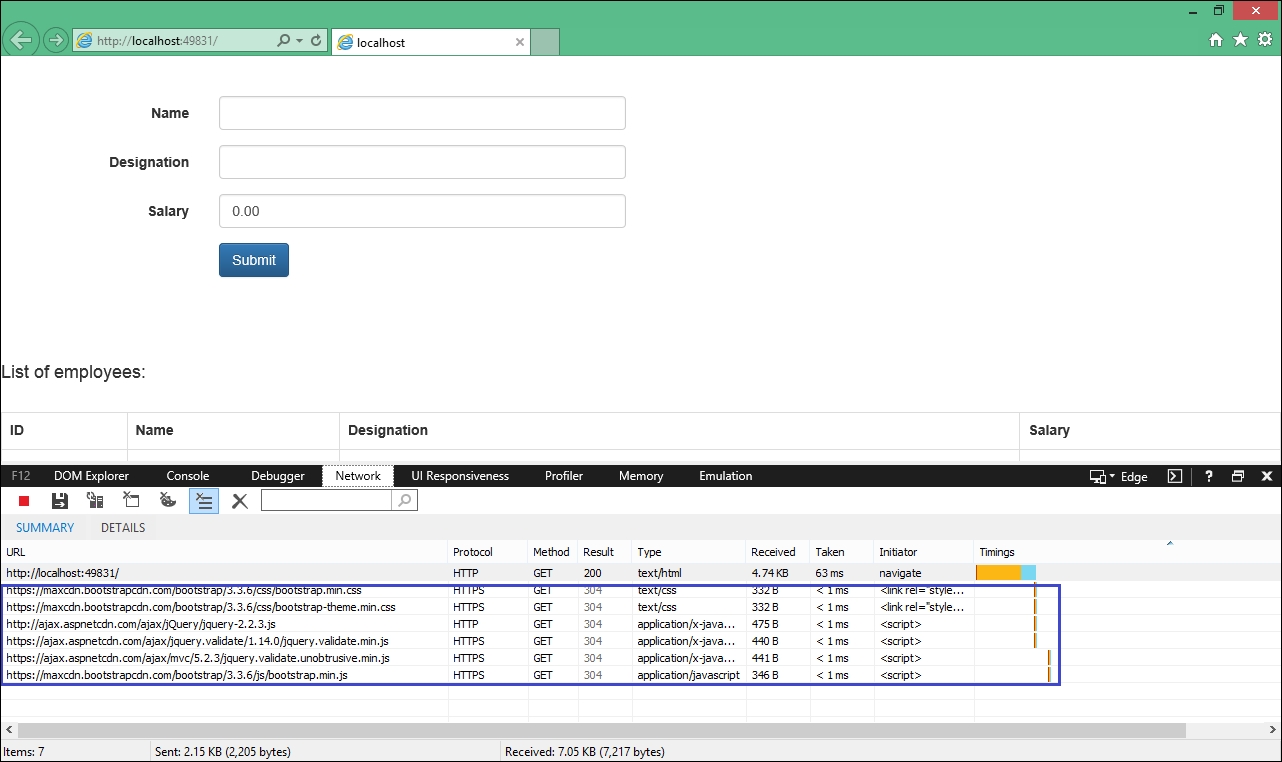

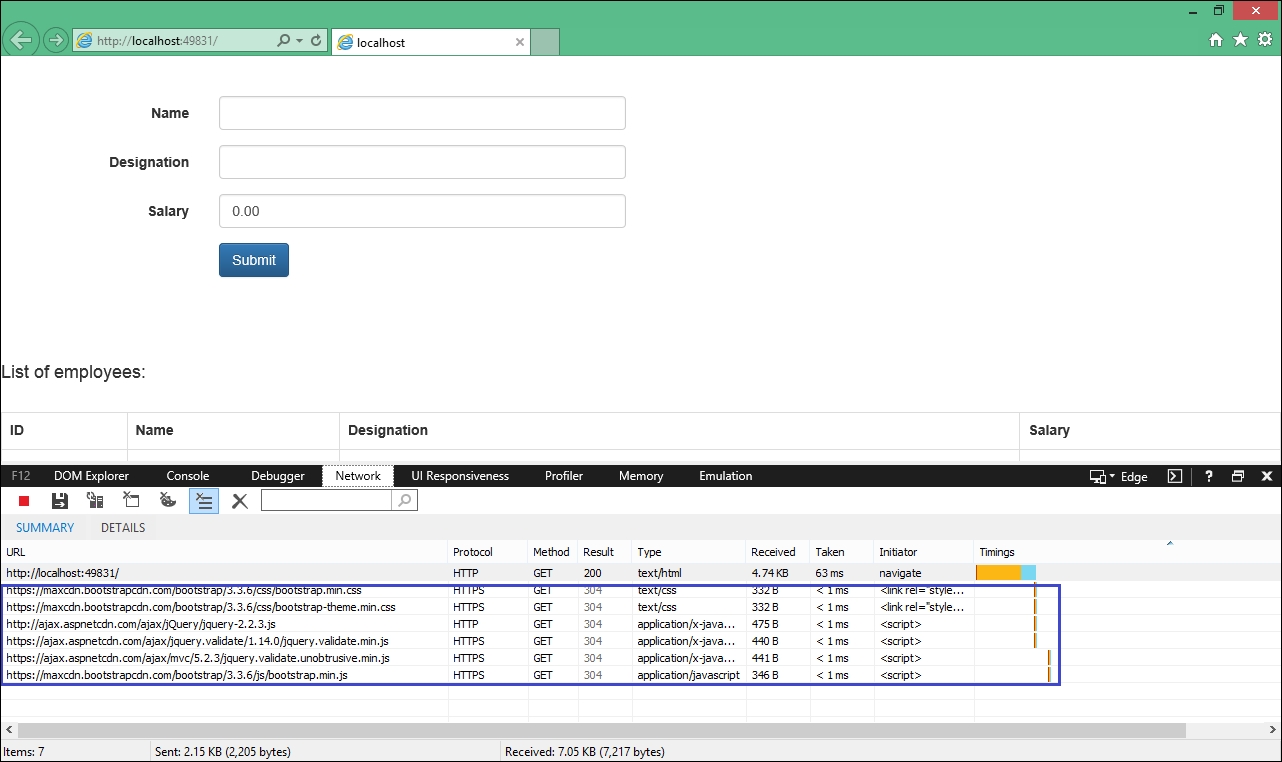

I ran the application again with (Tracking Network Requests option ON) and I could see the requests being tracked, as shown in the following screenshot:

There are many useful pieces of data available in the Network tab. To begin with, the URL column shows the resource that is being accessed. The Protocol column, as the name implies, shows the protocol being used for accessing the resource.

To begin with, the URL column shows the resource that is being accessed. The Protocol column, as the name implies, shows the protocol being used for accessing the resource. The Method column shows the type of request, and in the Result column, we can see the HTTP status code of the request (HTTP 200 response means a successful GET request).

The Type column shows the type of resource that is being accessed, and the Taken column shows how much time it has taken to receive the file from the server. The Received column shows the size of the file that was downloaded as part of the request.

Using GZip compression

When you are serving the content, you can compress the content using GZip so that a smaller amount of data will be sent across the wire. You need to add the appropriate HTTP headers so that the browser can understand the mode of content being delivered. In IIS, this option is enabled for static resources by default. You can verify this by accessing the applicationHost.config file at the path C:\Windows\System32\inetsrv\config:

<httpCompression directory="%SystemDrive%\inetpub\temp\IIS Temporary Compressed Files">

<scheme name="gzip" dll="%Windir%\system32\inetsrv\gzip.dll" />

<staticTypes>

<add mimeType="text/*" enabled="true" />

<add mimeType="message/*" enabled="true" />

<add mimeType="application/x-javascript" enabled="true" />

<add mimeType="application/atom+xml" enabled="true" />

<add mimeType="application/xaml+xml" enabled="true" />

<add mimeType="*/*" enabled="false" />

</staticTypes>

</httpCompression>If it is not available in your applicationHost.config file, you have to make the necessary changes.

Using the Content Delivery Network (CDN)

A Content Delivery Network is a system of distributed servers situated across the globe to serve the content based on the geographical location from where the content is accessed. Amazon's CloudFront is one example of a CDN. Amazon has edge locations (locations where servers are located) all over the world so that content can be served to users from the nearest location.

In the following line, we are accessing the jQuery from the CDN provided by the official jQuery website:

<script src="https://code.jquery.com/jquery-3.1.1.min.js" ></script>

Using JavaScript wherever possible

If you can use JavaScript to achieve a functionality, then do it. For example, before validating the data of the form on the server, always try to do client-side validation first. This approach has a couple of advantages—the site will be very fast, as everything is done at the client-side itself, and the server would handle a larger number of requests, as some of the requests are handled on the client-side.

Using CSS stylesheets

As the browser renders the web page progressively (the browser will display whatever content it has, as soon as it receives it), it is better to place the stylesheets at the top rather than at the end of the web page. If we place the stylesheets at the bottom, it prohibits the progressive rendering as the browser has to redraw the content with the styles.

Most of the browsers will block parallel downloads when it comes to downloading the JavaScript files, so it is better to place the script at the bottom. This means that your content is shown to the user while the browser downloads the scripts. The following is the sample layout file created in an ASP.NET Core application where CSS files are referenced at the top and JavaScript files are referenced at the bottom:

<!DOCTYPE html>

<html lang="en">

<head>

<meta name="viewport" content="width=device-width" />

<title>@ViewBag.Title</title>

<!-- Latest compiled and minified CSS -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap.min.css" integrity="sha384-1q8mTJOASx8j1Au+a5WDVnPi2lkFfwwEAa8hDDdjZlpLegxhjVME1fgjWPGmkzs7" crossorigin="anonymous">

<!-- Optional theme -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap-theme.min.css" integrity="sha384-fLW2N01lMqjakBkx3l/M9EahuwpSfeNvV63J5ezn3uZzapT0u7EYsXMjQV+0En5r" crossorigin="anonymous">

</head>

<body>

<div>

@RenderBody()

</div>

<script src="http://ajax.aspnetcdn.com/ajax/jQuery/jquery-2.2.3.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/jquery.validate/1.14.0/jquery.validate.min.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/mvc/5.2.3/jquery.validate.unobtrusive.min.js"></script>

<!-- Latest compiled and minified JavaScript -->

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/js/bootstrap.min.js" integrity="sha384-0mSbJDEHialfmuBBQP6A4Qrprq5OVfW37PRR3j5ELqxss1yVqOtnepnHVP9aJ7xS" crossorigin="anonymous"></script>

</body>

</html>Using GZip compression

When you are serving the content, you can compress the content using GZip so that a smaller amount of data will be sent across the wire. You need to add the appropriate HTTP headers so that the browser can understand the mode of content being delivered. In IIS, this option is enabled for static resources by default. You can verify this by accessing the applicationHost.config file at the path C:\Windows\System32\inetsrv\config:

<httpCompression directory="%SystemDrive%\inetpub\temp\IIS Temporary Compressed Files">

<scheme name="gzip" dll="%Windir%\system32\inetsrv\gzip.dll" />

<staticTypes>

<add mimeType="text/*" enabled="true" />

<add mimeType="message/*" enabled="true" />

<add mimeType="application/x-javascript" enabled="true" />

<add mimeType="application/atom+xml" enabled="true" />

<add mimeType="application/xaml+xml" enabled="true" />

<add mimeType="*/*" enabled="false" />

</staticTypes>

</httpCompression>If it is not available in your applicationHost.config file, you have to make the necessary changes.

Using the Content Delivery Network (CDN)

A Content Delivery Network is a system of distributed servers situated across the globe to serve the content based on the geographical location from where the content is accessed. Amazon's CloudFront is one example of a CDN. Amazon has edge locations (locations where servers are located) all over the world so that content can be served to users from the nearest location.

In the following line, we are accessing the jQuery from the CDN provided by the official jQuery website:

<script src="https://code.jquery.com/jquery-3.1.1.min.js" ></script>

Using JavaScript wherever possible

If you can use JavaScript to achieve a functionality, then do it. For example, before validating the data of the form on the server, always try to do client-side validation first. This approach has a couple of advantages—the site will be very fast, as everything is done at the client-side itself, and the server would handle a larger number of requests, as some of the requests are handled on the client-side.

Using CSS stylesheets

As the browser renders the web page progressively (the browser will display whatever content it has, as soon as it receives it), it is better to place the stylesheets at the top rather than at the end of the web page. If we place the stylesheets at the bottom, it prohibits the progressive rendering as the browser has to redraw the content with the styles.

Most of the browsers will block parallel downloads when it comes to downloading the JavaScript files, so it is better to place the script at the bottom. This means that your content is shown to the user while the browser downloads the scripts. The following is the sample layout file created in an ASP.NET Core application where CSS files are referenced at the top and JavaScript files are referenced at the bottom:

<!DOCTYPE html>

<html lang="en">

<head>

<meta name="viewport" content="width=device-width" />

<title>@ViewBag.Title</title>

<!-- Latest compiled and minified CSS -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap.min.css" integrity="sha384-1q8mTJOASx8j1Au+a5WDVnPi2lkFfwwEAa8hDDdjZlpLegxhjVME1fgjWPGmkzs7" crossorigin="anonymous">

<!-- Optional theme -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap-theme.min.css" integrity="sha384-fLW2N01lMqjakBkx3l/M9EahuwpSfeNvV63J5ezn3uZzapT0u7EYsXMjQV+0En5r" crossorigin="anonymous">

</head>

<body>

<div>

@RenderBody()

</div>

<script src="http://ajax.aspnetcdn.com/ajax/jQuery/jquery-2.2.3.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/jquery.validate/1.14.0/jquery.validate.min.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/mvc/5.2.3/jquery.validate.unobtrusive.min.js"></script>

<!-- Latest compiled and minified JavaScript -->

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/js/bootstrap.min.js" integrity="sha384-0mSbJDEHialfmuBBQP6A4Qrprq5OVfW37PRR3j5ELqxss1yVqOtnepnHVP9aJ7xS" crossorigin="anonymous"></script>

</body>

</html>Using the Content Delivery Network (CDN)

A Content Delivery Network is a system of distributed servers situated across the globe to serve the content based on the geographical location from where the content is accessed. Amazon's CloudFront is one example of a CDN. Amazon has edge locations (locations where servers are located) all over the world so that content can be served to users from the nearest location.

In the following line, we are accessing the jQuery from the CDN provided by the official jQuery website:

<script src="https://code.jquery.com/jquery-3.1.1.min.js" ></script>

Using JavaScript wherever possible

If you can use JavaScript to achieve a functionality, then do it. For example, before validating the data of the form on the server, always try to do client-side validation first. This approach has a couple of advantages—the site will be very fast, as everything is done at the client-side itself, and the server would handle a larger number of requests, as some of the requests are handled on the client-side.

Using CSS stylesheets

As the browser renders the web page progressively (the browser will display whatever content it has, as soon as it receives it), it is better to place the stylesheets at the top rather than at the end of the web page. If we place the stylesheets at the bottom, it prohibits the progressive rendering as the browser has to redraw the content with the styles.

Most of the browsers will block parallel downloads when it comes to downloading the JavaScript files, so it is better to place the script at the bottom. This means that your content is shown to the user while the browser downloads the scripts. The following is the sample layout file created in an ASP.NET Core application where CSS files are referenced at the top and JavaScript files are referenced at the bottom:

<!DOCTYPE html>

<html lang="en">

<head>

<meta name="viewport" content="width=device-width" />

<title>@ViewBag.Title</title>

<!-- Latest compiled and minified CSS -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap.min.css" integrity="sha384-1q8mTJOASx8j1Au+a5WDVnPi2lkFfwwEAa8hDDdjZlpLegxhjVME1fgjWPGmkzs7" crossorigin="anonymous">

<!-- Optional theme -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap-theme.min.css" integrity="sha384-fLW2N01lMqjakBkx3l/M9EahuwpSfeNvV63J5ezn3uZzapT0u7EYsXMjQV+0En5r" crossorigin="anonymous">

</head>

<body>

<div>

@RenderBody()

</div>

<script src="http://ajax.aspnetcdn.com/ajax/jQuery/jquery-2.2.3.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/jquery.validate/1.14.0/jquery.validate.min.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/mvc/5.2.3/jquery.validate.unobtrusive.min.js"></script>

<!-- Latest compiled and minified JavaScript -->

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/js/bootstrap.min.js" integrity="sha384-0mSbJDEHialfmuBBQP6A4Qrprq5OVfW37PRR3j5ELqxss1yVqOtnepnHVP9aJ7xS" crossorigin="anonymous"></script>

</body>

</html>Using JavaScript wherever possible

If you can use JavaScript to achieve a functionality, then do it. For example, before validating the data of the form on the server, always try to do client-side validation first. This approach has a couple of advantages—the site will be very fast, as everything is done at the client-side itself, and the server would handle a larger number of requests, as some of the requests are handled on the client-side.

Using CSS stylesheets

As the browser renders the web page progressively (the browser will display whatever content it has, as soon as it receives it), it is better to place the stylesheets at the top rather than at the end of the web page. If we place the stylesheets at the bottom, it prohibits the progressive rendering as the browser has to redraw the content with the styles.

Most of the browsers will block parallel downloads when it comes to downloading the JavaScript files, so it is better to place the script at the bottom. This means that your content is shown to the user while the browser downloads the scripts. The following is the sample layout file created in an ASP.NET Core application where CSS files are referenced at the top and JavaScript files are referenced at the bottom:

<!DOCTYPE html>

<html lang="en">

<head>

<meta name="viewport" content="width=device-width" />

<title>@ViewBag.Title</title>

<!-- Latest compiled and minified CSS -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap.min.css" integrity="sha384-1q8mTJOASx8j1Au+a5WDVnPi2lkFfwwEAa8hDDdjZlpLegxhjVME1fgjWPGmkzs7" crossorigin="anonymous">

<!-- Optional theme -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap-theme.min.css" integrity="sha384-fLW2N01lMqjakBkx3l/M9EahuwpSfeNvV63J5ezn3uZzapT0u7EYsXMjQV+0En5r" crossorigin="anonymous">

</head>

<body>

<div>

@RenderBody()

</div>

<script src="http://ajax.aspnetcdn.com/ajax/jQuery/jquery-2.2.3.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/jquery.validate/1.14.0/jquery.validate.min.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/mvc/5.2.3/jquery.validate.unobtrusive.min.js"></script>

<!-- Latest compiled and minified JavaScript -->

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/js/bootstrap.min.js" integrity="sha384-0mSbJDEHialfmuBBQP6A4Qrprq5OVfW37PRR3j5ELqxss1yVqOtnepnHVP9aJ7xS" crossorigin="anonymous"></script>

</body>

</html>Using CSS stylesheets

As the browser renders the web page progressively (the browser will display whatever content it has, as soon as it receives it), it is better to place the stylesheets at the top rather than at the end of the web page. If we place the stylesheets at the bottom, it prohibits the progressive rendering as the browser has to redraw the content with the styles.

Most of the browsers will block parallel downloads when it comes to downloading the JavaScript files, so it is better to place the script at the bottom. This means that your content is shown to the user while the browser downloads the scripts. The following is the sample layout file created in an ASP.NET Core application where CSS files are referenced at the top and JavaScript files are referenced at the bottom:

<!DOCTYPE html>

<html lang="en">

<head>

<meta name="viewport" content="width=device-width" />

<title>@ViewBag.Title</title>

<!-- Latest compiled and minified CSS -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap.min.css" integrity="sha384-1q8mTJOASx8j1Au+a5WDVnPi2lkFfwwEAa8hDDdjZlpLegxhjVME1fgjWPGmkzs7" crossorigin="anonymous">

<!-- Optional theme -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/css/bootstrap-theme.min.css" integrity="sha384-fLW2N01lMqjakBkx3l/M9EahuwpSfeNvV63J5ezn3uZzapT0u7EYsXMjQV+0En5r" crossorigin="anonymous">

</head>

<body>

<div>

@RenderBody()

</div>

<script src="http://ajax.aspnetcdn.com/ajax/jQuery/jquery-2.2.3.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/jquery.validate/1.14.0/jquery.validate.min.js"></script>

<script src="https://ajax.aspnetcdn.com/ajax/mvc/5.2.3/jquery.validate.unobtrusive.min.js"></script>

<!-- Latest compiled and minified JavaScript -->

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/js/bootstrap.min.js" integrity="sha384-0mSbJDEHialfmuBBQP6A4Qrprq5OVfW37PRR3j5ELqxss1yVqOtnepnHVP9aJ7xS" crossorigin="anonymous"></script>

</body>

</html>Minification of JavaScript and CSS files and their combination

The time taken to download the related resources of a web page is directly proportional to the size of the files that are downloaded. If we reduce the size of the file without changing the actual content, it will greatly increase the performance. Minification is the process of changing the content of the file in order to reduce the size of the file. Removing the extraneous white spaces and changing the variable names to shorter names are both common techniques used in the minification process.

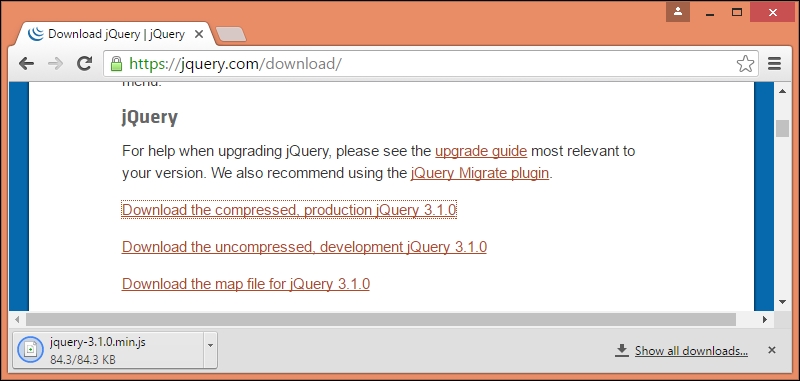

Popular JavaScript libraries such as jQuery and frontend frameworks provide minified files by default. You can use them as they are. In the following screenshot, I have downloaded the compressed version of jQuery. You can minify the custom JavaScript and CSS files that you have written for your application:

Bundling is the process where you can combine two or more files into one. Bundling and minification, when used together, will reduce the size of the payload, thereby increasing the performance of the application.

You can install the Bundler & Minifier Visual Studio extension from the following URL:

https://visualstudiogallery.msdn.microsoft.com/9ec27da7-e24b-4d56-8064-fd7e88ac1c40

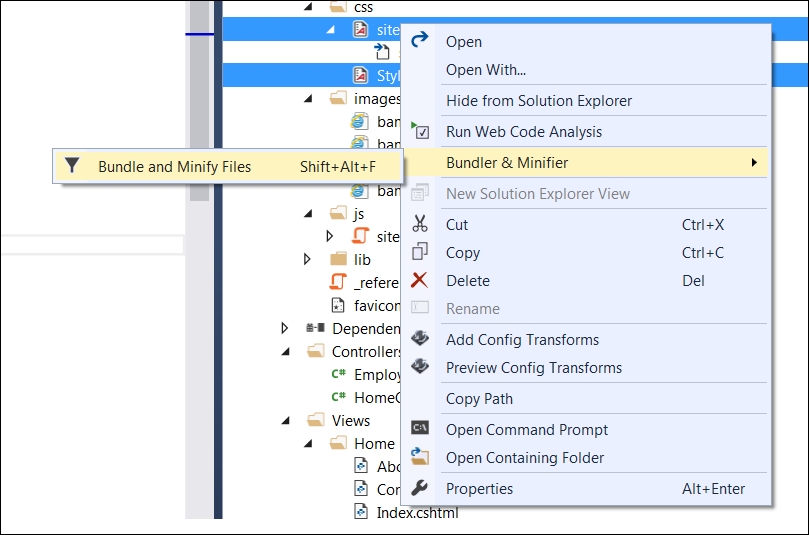

Once you have installed this Visual Studio extension, you can select the files that you want to bundle and minify by selecting the files and selecting the Bundler & Minifier option from the Context menu, brought up by right-clicking. It is shown in the following screenshot:

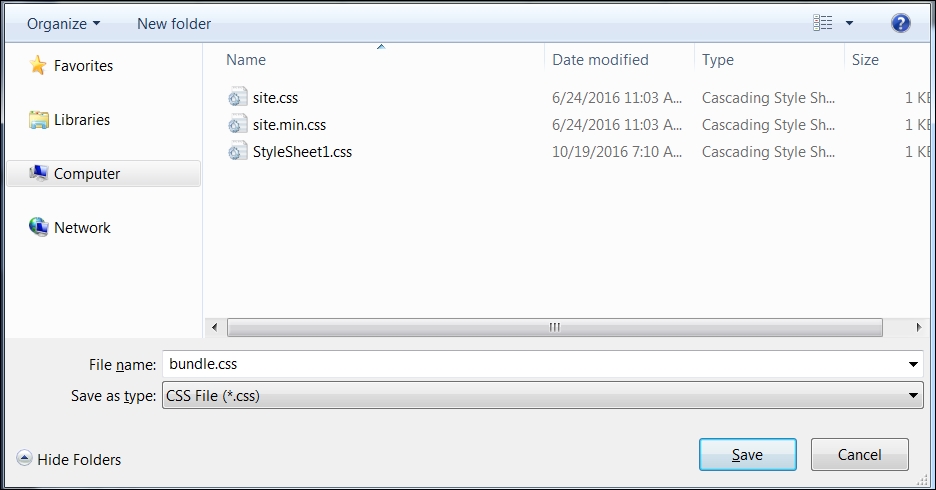

Once you select the Bundle and Minify Files option, it will ask you to save the bundled file as shown in the following screenshot:

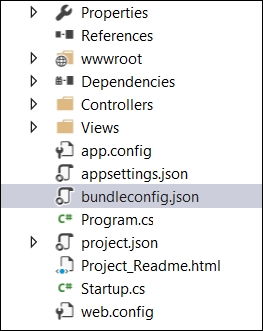

You can name the file of your wish and save the file. Once you save the file, another file would have been created in your solution—in our case, it is the bundleconfig.json file:

This file will have the information on the input files and the bundled output file. The following is one such example:

[

{

"outputFileName": "wwwroot/css/site.min.css",

"inputFiles": [

"wwwroot/css/site.css"

]

},

{

"outputFileName": "wwwroot/js/site.min.js",

"inputFiles": [

"wwwroot/js/site.js"

],

"minify": {

"enabled": true,

"renameLocals": true

}

},

{

"outputFileName": "wwwroot/css/bundle.css",

"inputFiles": [

"wwwroot/css/site.css",

"wwwroot/css/StyleSheet1.css"

]

}

]You can use this bundled file in your application, resulting in increased performance.

The caching process

Caching is the process of copying the data and having it in memory instead of getting the data again through an external resource, such as a network, file, or database. The data used in caching is ephemeral and can be removed at any time. As we are directly accessing the data, caching can greatly improve the performance of the application.

Caching can be done in any of the layers—client-side at the browser, at the proxy server (or at some middleware), or at the web/application server. For database layer caching, we might not need to do any custom coding. Based on the type of database server being used, you might need to make some configuration changes. However, most of the databases these days are powerful enough to cache the data as and when it is needed.

Client-side caching

We can cache at the client-side if we add the appropriate HTTP response headers. For example, if we want to cache all the static assets, such as CSS, images, and JavaScript files, we can add the max-age response header in the Cache-Control header:

In the preceding screenshot of the Developer Tool window's Network tab, when the requests are fired again, we get HTTP 304 response (Not modified) as the response. This means the same files are not transferred back twice across the wire, as they are available in the browser itself.

Implementing browser caching for static files is pretty easy, and it involves just a couple of steps—adding dependencies and configuring the application.

Add the following NuGet package to the list of dependencies in the project.json file:

"Microsoft.AspNet.StaticFiles": "1.0.0-rc1-final"

Add the following namespaces to the Startup.cs file and configure the application to use those static files:

using Microsoft.AspNet.StaticFiles;

using Microsoft.Net.Http.Headers;

public void Configure(IApplicationBuilder app)

{

app.UseIISPlatformHandler();

app.UseMvc();

app.UseMvc(routes =>

{

routes.MapRoute(name:"default", template:"{controller=Employee}/{action=Index}/{id?}");});

app.UseStaticFiles(new StaticFileOptions

()

{

OnPrepareResponse = (context) =>

{

var headers = context.Context.Response.GetTypedHeaders();

headers.CacheControl = new CacheControlHeaderValue()

{

MaxAge = TimeSpan.FromSeconds(60),

};

}

});

}Response caching

In response caching, cache-related HTTP headers are added to HTTP responses when MVC actions are returned. The Cache-Control header is the primary HTTP header that gets added to the response.

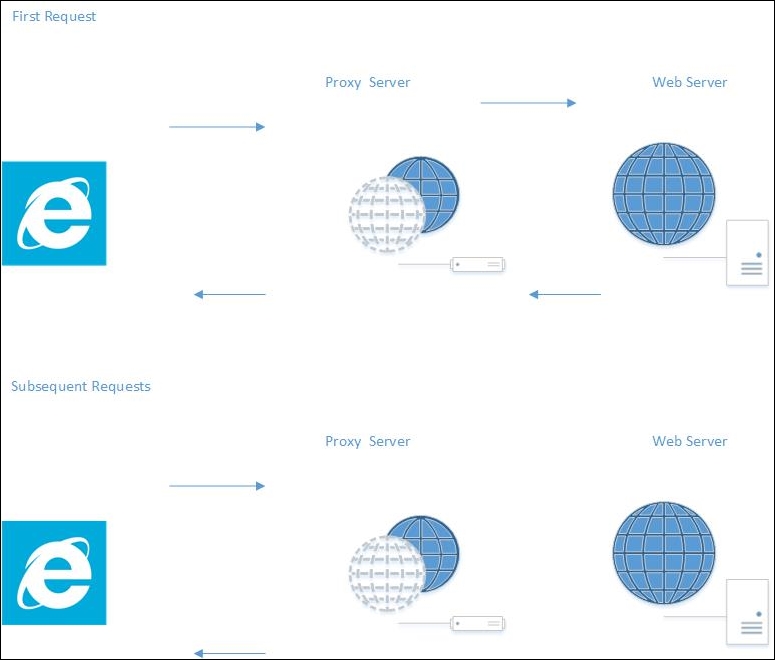

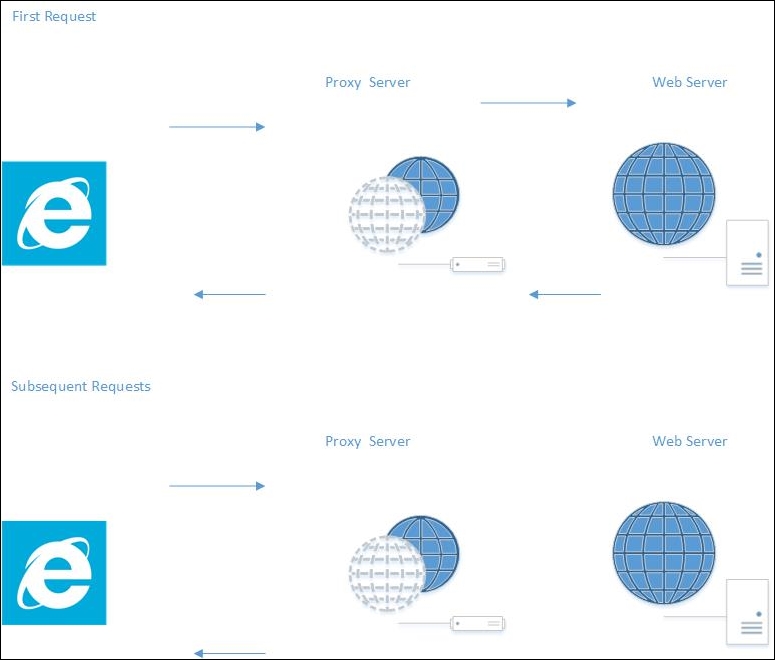

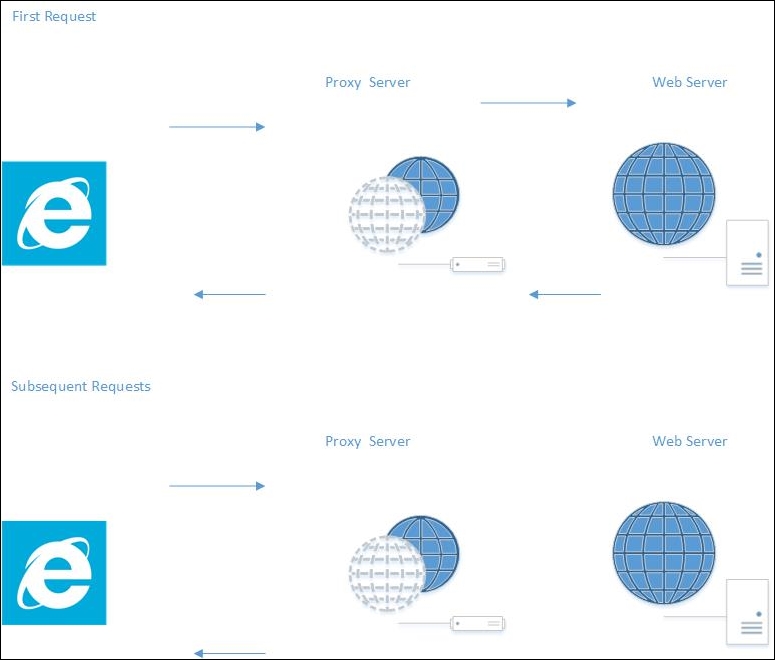

The preceding diagram shows response caching in action. In the first request, we are calling a Controller's action method; the request comes from the client and passes through the proxy server, actually hitting the web server. As we have added a response cache, any subsequent requests will not be forwarded to the web server, and the responses will be returned from the proxy server itself. This will reduce the number of requests to the web server, which in turn will reduce the load on the web server.

Caching the response of the Controller's action method is pretty easy. Just add the ResponseCache attribute with a duration parameter. In the following action method, we have added the response cache with a duration of 60 seconds, so that, for the next 60 seconds, if any requests come again, the responses will be returned from the proxy server itself instead of going to the web server:

[ResponseCache(Duration = 60)]

public IActionResult Index()

{

EmployeeAddViewModel employeeAddViewModel = new EmployeeAddViewModel();

using (var db = new EmployeeDbContext())

{

employeeAddViewModel.EmployeesList = db.Employees.ToList();

}

return View(employeeAddViewModel);

}Client-side caching

We can cache at the client-side if we add the appropriate HTTP response headers. For example, if we want to cache all the static assets, such as CSS, images, and JavaScript files, we can add the max-age response header in the Cache-Control header:

In the preceding screenshot of the Developer Tool window's Network tab, when the requests are fired again, we get HTTP 304 response (Not modified) as the response. This means the same files are not transferred back twice across the wire, as they are available in the browser itself.

Implementing browser caching for static files is pretty easy, and it involves just a couple of steps—adding dependencies and configuring the application.

Add the following NuGet package to the list of dependencies in the project.json file:

"Microsoft.AspNet.StaticFiles": "1.0.0-rc1-final"

Add the following namespaces to the Startup.cs file and configure the application to use those static files:

using Microsoft.AspNet.StaticFiles;

using Microsoft.Net.Http.Headers;

public void Configure(IApplicationBuilder app)

{

app.UseIISPlatformHandler();

app.UseMvc();

app.UseMvc(routes =>

{

routes.MapRoute(name:"default", template:"{controller=Employee}/{action=Index}/{id?}");});

app.UseStaticFiles(new StaticFileOptions

()

{

OnPrepareResponse = (context) =>

{

var headers = context.Context.Response.GetTypedHeaders();

headers.CacheControl = new CacheControlHeaderValue()

{

MaxAge = TimeSpan.FromSeconds(60),

};

}

});

}Response caching

In response caching, cache-related HTTP headers are added to HTTP responses when MVC actions are returned. The Cache-Control header is the primary HTTP header that gets added to the response.

The preceding diagram shows response caching in action. In the first request, we are calling a Controller's action method; the request comes from the client and passes through the proxy server, actually hitting the web server. As we have added a response cache, any subsequent requests will not be forwarded to the web server, and the responses will be returned from the proxy server itself. This will reduce the number of requests to the web server, which in turn will reduce the load on the web server.

Caching the response of the Controller's action method is pretty easy. Just add the ResponseCache attribute with a duration parameter. In the following action method, we have added the response cache with a duration of 60 seconds, so that, for the next 60 seconds, if any requests come again, the responses will be returned from the proxy server itself instead of going to the web server:

[ResponseCache(Duration = 60)]

public IActionResult Index()

{

EmployeeAddViewModel employeeAddViewModel = new EmployeeAddViewModel();

using (var db = new EmployeeDbContext())

{

employeeAddViewModel.EmployeesList = db.Employees.ToList();

}

return View(employeeAddViewModel);

}Response caching

In response caching, cache-related HTTP headers are added to HTTP responses when MVC actions are returned. The Cache-Control header is the primary HTTP header that gets added to the response.

The preceding diagram shows response caching in action. In the first request, we are calling a Controller's action method; the request comes from the client and passes through the proxy server, actually hitting the web server. As we have added a response cache, any subsequent requests will not be forwarded to the web server, and the responses will be returned from the proxy server itself. This will reduce the number of requests to the web server, which in turn will reduce the load on the web server.

Caching the response of the Controller's action method is pretty easy. Just add the ResponseCache attribute with a duration parameter. In the following action method, we have added the response cache with a duration of 60 seconds, so that, for the next 60 seconds, if any requests come again, the responses will be returned from the proxy server itself instead of going to the web server:

[ResponseCache(Duration = 60)]

public IActionResult Index()

{

EmployeeAddViewModel employeeAddViewModel = new EmployeeAddViewModel();

using (var db = new EmployeeDbContext())

{

employeeAddViewModel.EmployeesList = db.Employees.ToList();

}

return View(employeeAddViewModel);

}The web/application layer

The web/application layer is composed of whatever happens between receiving the request from the client and sending back the response (or querying the DB layer to get the required data). Most of the web/application layer will be in a server-side language, such as C#, so when you try to optimize the web/application layer, you need to incorporate the best practices of ASP.NET MVC and C#.

No business logic in Views

A View is what is rendered to the browser, and it can contain presentation logic. Presentation logic represents where and how the data is to be displayed. ViewModels (actually, models specific to the View) are models that hold the data for a particular view.

Neither Views nor ViewModels should contain any business logic as this violates the separation of concerns principle.

Look at the following Razor View code. We are just looping through the list in the model and presenting the data in tabular format—nothing else:

<h4> List of employees:</h4> <br />

<table class="table table-bordered">

<tr>

<th> ID </th>

<th> Name </th>

<th> Designation </th>

<th> Salary </th>

</tr>

@foreach (var employee in Model.EmployeesList)

{

<tr>

<td>@employee.EmployeeId</td>

<td>@employee.Name</td>

<td>@employee.Designation</td>

<td>@employee.Salary</td>

</tr>

}

</table>In some code, there might be a repository layer in ViewModel, which should never be the case. Please be extra cautious about what is there in the View/ViewModel code.

Using asynchronous logging

Try to use asynchronous logging, wherever possible, to improve the performance. Most logging frameworks, such as Log4Net, provide an option for logging asynchronously. With respect to the ASP.NET Core, you can implement the logging through a Dependency Injection.

The following is a typical example of the implementation of a logging framework in an MVC Controller:

public class EmployeeController : Controller

{

private readonly IEmployeeRepository _employeeRepo;

private readonly ILogger<EmployeeController> _logger;

public EmployeeController(IEmployeeRepository employeeRepository,

ILogger<EmployeeController> logger)

{

_employeeRepo = employeeRepository;

_logger = logger;

}

[HttpGet]

public IEnumerable<Employee> GetAll()

{

_logger.LogInformation(LoggingEvents.LIST_ITEMS, "Listing all employees");

return _employeeRepo.GetAll();

}

}The DB layer

Though the DB layer is not directly related to ASP.NET Core applications, it is the developer's responsibility to take complete ownership of the application's performance, and that includes taking care of the database's performance as well. We will now look at a few of the areas in the DB layer that we need to consider when improving the performance of an ASP.NET Core application.

Understanding the queries generated by the ORM

In most applications these days, we use Object-Relational Mapping (ORM), such as Entity Framework or NHibernate. As you might know, the primary objective of the ORM is to enable you to write the data access layer using domain-based classes and objects instead of writing queries directly. However, it does not mean that you never need to understand the basics of the SQL queries generated, or the optimization of these queries. Sometimes, the generated query from Entity Framework may not be optimized, so a better practice would be to run the profiler, analyze the generated queries, and tune them as per your needs. You can use the interceptors in Entity Framework to log the SQL queries.

Using classic ADO.NET if you really want to

ASP.NET Core is just a web development framework, and it is not tied to any data access framework or technology. If the ORM that you use in your application does not support the performance that you expect it to, you can use the classic ADO.NET and manually write the queries/stored procedures.

Return only the required data

Always return only the data that you need nothing more, nothing less. This approach reduces the data that we send across the wire (from the database server to the web/application server).

For example, we would not use the following:

Select * from employees

Instead, we would use this:

Select FirstName,LastName from employees

The latter query would get only the required fields from the table, and, thus, only the required data is passed across to the calling client.

Fine tuning the indices

Beginners tend to add indices whenever they face a problem with the database. Adding an index to every column in the table is bad practice, and will reduce performance. The right approach is to take the list of queries that are most frequently executed. Once you have this list, try to fine tune them—remove unnecessary joins, avoid correlated subqueries, and so on. Only when you have tried and exhausted all query tuning options at your end should you start adding the indices. The important thing to note here is that you should add indices only on the required number of columns.

Using the correct column type and size for your database columns

When you want to use int as a datatype for a column, use an integer. Don't use double. This will save a lot of space if you have lots of rows in your table.

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

No business logic in Views

A View is what is rendered to the browser, and it can contain presentation logic. Presentation logic represents where and how the data is to be displayed. ViewModels (actually, models specific to the View) are models that hold the data for a particular view.

Neither Views nor ViewModels should contain any business logic as this violates the separation of concerns principle.

Look at the following Razor View code. We are just looping through the list in the model and presenting the data in tabular format—nothing else:

<h4> List of employees:</h4> <br />

<table class="table table-bordered">

<tr>

<th> ID </th>

<th> Name </th>

<th> Designation </th>

<th> Salary </th>

</tr>

@foreach (var employee in Model.EmployeesList)

{

<tr>

<td>@employee.EmployeeId</td>

<td>@employee.Name</td>

<td>@employee.Designation</td>

<td>@employee.Salary</td>

</tr>

}

</table>In some code, there might be a repository layer in ViewModel, which should never be the case. Please be extra cautious about what is there in the View/ViewModel code.

Using asynchronous logging

Try to use asynchronous logging, wherever possible, to improve the performance. Most logging frameworks, such as Log4Net, provide an option for logging asynchronously. With respect to the ASP.NET Core, you can implement the logging through a Dependency Injection.

The following is a typical example of the implementation of a logging framework in an MVC Controller:

public class EmployeeController : Controller

{

private readonly IEmployeeRepository _employeeRepo;

private readonly ILogger<EmployeeController> _logger;

public EmployeeController(IEmployeeRepository employeeRepository,

ILogger<EmployeeController> logger)

{

_employeeRepo = employeeRepository;

_logger = logger;

}

[HttpGet]

public IEnumerable<Employee> GetAll()

{

_logger.LogInformation(LoggingEvents.LIST_ITEMS, "Listing all employees");

return _employeeRepo.GetAll();

}

}The DB layer

Though the DB layer is not directly related to ASP.NET Core applications, it is the developer's responsibility to take complete ownership of the application's performance, and that includes taking care of the database's performance as well. We will now look at a few of the areas in the DB layer that we need to consider when improving the performance of an ASP.NET Core application.

Understanding the queries generated by the ORM

In most applications these days, we use Object-Relational Mapping (ORM), such as Entity Framework or NHibernate. As you might know, the primary objective of the ORM is to enable you to write the data access layer using domain-based classes and objects instead of writing queries directly. However, it does not mean that you never need to understand the basics of the SQL queries generated, or the optimization of these queries. Sometimes, the generated query from Entity Framework may not be optimized, so a better practice would be to run the profiler, analyze the generated queries, and tune them as per your needs. You can use the interceptors in Entity Framework to log the SQL queries.

Using classic ADO.NET if you really want to

ASP.NET Core is just a web development framework, and it is not tied to any data access framework or technology. If the ORM that you use in your application does not support the performance that you expect it to, you can use the classic ADO.NET and manually write the queries/stored procedures.

Return only the required data

Always return only the data that you need nothing more, nothing less. This approach reduces the data that we send across the wire (from the database server to the web/application server).

For example, we would not use the following:

Select * from employees

Instead, we would use this:

Select FirstName,LastName from employees

The latter query would get only the required fields from the table, and, thus, only the required data is passed across to the calling client.

Fine tuning the indices

Beginners tend to add indices whenever they face a problem with the database. Adding an index to every column in the table is bad practice, and will reduce performance. The right approach is to take the list of queries that are most frequently executed. Once you have this list, try to fine tune them—remove unnecessary joins, avoid correlated subqueries, and so on. Only when you have tried and exhausted all query tuning options at your end should you start adding the indices. The important thing to note here is that you should add indices only on the required number of columns.

Using the correct column type and size for your database columns

When you want to use int as a datatype for a column, use an integer. Don't use double. This will save a lot of space if you have lots of rows in your table.

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

Using asynchronous logging

Try to use asynchronous logging, wherever possible, to improve the performance. Most logging frameworks, such as Log4Net, provide an option for logging asynchronously. With respect to the ASP.NET Core, you can implement the logging through a Dependency Injection.

The following is a typical example of the implementation of a logging framework in an MVC Controller:

public class EmployeeController : Controller

{

private readonly IEmployeeRepository _employeeRepo;

private readonly ILogger<EmployeeController> _logger;

public EmployeeController(IEmployeeRepository employeeRepository,

ILogger<EmployeeController> logger)

{

_employeeRepo = employeeRepository;

_logger = logger;

}

[HttpGet]

public IEnumerable<Employee> GetAll()

{

_logger.LogInformation(LoggingEvents.LIST_ITEMS, "Listing all employees");

return _employeeRepo.GetAll();

}

}The DB layer

Though the DB layer is not directly related to ASP.NET Core applications, it is the developer's responsibility to take complete ownership of the application's performance, and that includes taking care of the database's performance as well. We will now look at a few of the areas in the DB layer that we need to consider when improving the performance of an ASP.NET Core application.

Understanding the queries generated by the ORM

In most applications these days, we use Object-Relational Mapping (ORM), such as Entity Framework or NHibernate. As you might know, the primary objective of the ORM is to enable you to write the data access layer using domain-based classes and objects instead of writing queries directly. However, it does not mean that you never need to understand the basics of the SQL queries generated, or the optimization of these queries. Sometimes, the generated query from Entity Framework may not be optimized, so a better practice would be to run the profiler, analyze the generated queries, and tune them as per your needs. You can use the interceptors in Entity Framework to log the SQL queries.

Using classic ADO.NET if you really want to

ASP.NET Core is just a web development framework, and it is not tied to any data access framework or technology. If the ORM that you use in your application does not support the performance that you expect it to, you can use the classic ADO.NET and manually write the queries/stored procedures.

Return only the required data

Always return only the data that you need nothing more, nothing less. This approach reduces the data that we send across the wire (from the database server to the web/application server).

For example, we would not use the following:

Select * from employees

Instead, we would use this:

Select FirstName,LastName from employees

The latter query would get only the required fields from the table, and, thus, only the required data is passed across to the calling client.

Fine tuning the indices

Beginners tend to add indices whenever they face a problem with the database. Adding an index to every column in the table is bad practice, and will reduce performance. The right approach is to take the list of queries that are most frequently executed. Once you have this list, try to fine tune them—remove unnecessary joins, avoid correlated subqueries, and so on. Only when you have tried and exhausted all query tuning options at your end should you start adding the indices. The important thing to note here is that you should add indices only on the required number of columns.

Using the correct column type and size for your database columns

When you want to use int as a datatype for a column, use an integer. Don't use double. This will save a lot of space if you have lots of rows in your table.

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

The DB layer

Though the DB layer is not directly related to ASP.NET Core applications, it is the developer's responsibility to take complete ownership of the application's performance, and that includes taking care of the database's performance as well. We will now look at a few of the areas in the DB layer that we need to consider when improving the performance of an ASP.NET Core application.

Understanding the queries generated by the ORM

In most applications these days, we use Object-Relational Mapping (ORM), such as Entity Framework or NHibernate. As you might know, the primary objective of the ORM is to enable you to write the data access layer using domain-based classes and objects instead of writing queries directly. However, it does not mean that you never need to understand the basics of the SQL queries generated, or the optimization of these queries. Sometimes, the generated query from Entity Framework may not be optimized, so a better practice would be to run the profiler, analyze the generated queries, and tune them as per your needs. You can use the interceptors in Entity Framework to log the SQL queries.

Using classic ADO.NET if you really want to

ASP.NET Core is just a web development framework, and it is not tied to any data access framework or technology. If the ORM that you use in your application does not support the performance that you expect it to, you can use the classic ADO.NET and manually write the queries/stored procedures.

Return only the required data

Always return only the data that you need nothing more, nothing less. This approach reduces the data that we send across the wire (from the database server to the web/application server).

For example, we would not use the following:

Select * from employees

Instead, we would use this:

Select FirstName,LastName from employees

The latter query would get only the required fields from the table, and, thus, only the required data is passed across to the calling client.

Fine tuning the indices

Beginners tend to add indices whenever they face a problem with the database. Adding an index to every column in the table is bad practice, and will reduce performance. The right approach is to take the list of queries that are most frequently executed. Once you have this list, try to fine tune them—remove unnecessary joins, avoid correlated subqueries, and so on. Only when you have tried and exhausted all query tuning options at your end should you start adding the indices. The important thing to note here is that you should add indices only on the required number of columns.

Using the correct column type and size for your database columns

When you want to use int as a datatype for a column, use an integer. Don't use double. This will save a lot of space if you have lots of rows in your table.

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

Understanding the queries generated by the ORM

In most applications these days, we use Object-Relational Mapping (ORM), such as Entity Framework or NHibernate. As you might know, the primary objective of the ORM is to enable you to write the data access layer using domain-based classes and objects instead of writing queries directly. However, it does not mean that you never need to understand the basics of the SQL queries generated, or the optimization of these queries. Sometimes, the generated query from Entity Framework may not be optimized, so a better practice would be to run the profiler, analyze the generated queries, and tune them as per your needs. You can use the interceptors in Entity Framework to log the SQL queries.

Using classic ADO.NET if you really want to

ASP.NET Core is just a web development framework, and it is not tied to any data access framework or technology. If the ORM that you use in your application does not support the performance that you expect it to, you can use the classic ADO.NET and manually write the queries/stored procedures.

Return only the required data

Always return only the data that you need nothing more, nothing less. This approach reduces the data that we send across the wire (from the database server to the web/application server).

For example, we would not use the following:

Select * from employees

Instead, we would use this:

Select FirstName,LastName from employees

The latter query would get only the required fields from the table, and, thus, only the required data is passed across to the calling client.

Fine tuning the indices

Beginners tend to add indices whenever they face a problem with the database. Adding an index to every column in the table is bad practice, and will reduce performance. The right approach is to take the list of queries that are most frequently executed. Once you have this list, try to fine tune them—remove unnecessary joins, avoid correlated subqueries, and so on. Only when you have tried and exhausted all query tuning options at your end should you start adding the indices. The important thing to note here is that you should add indices only on the required number of columns.

Using the correct column type and size for your database columns

When you want to use int as a datatype for a column, use an integer. Don't use double. This will save a lot of space if you have lots of rows in your table.

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

Using classic ADO.NET if you really want to

ASP.NET Core is just a web development framework, and it is not tied to any data access framework or technology. If the ORM that you use in your application does not support the performance that you expect it to, you can use the classic ADO.NET and manually write the queries/stored procedures.

Return only the required data

Always return only the data that you need nothing more, nothing less. This approach reduces the data that we send across the wire (from the database server to the web/application server).

For example, we would not use the following:

Select * from employees

Instead, we would use this:

Select FirstName,LastName from employees

The latter query would get only the required fields from the table, and, thus, only the required data is passed across to the calling client.

Fine tuning the indices

Beginners tend to add indices whenever they face a problem with the database. Adding an index to every column in the table is bad practice, and will reduce performance. The right approach is to take the list of queries that are most frequently executed. Once you have this list, try to fine tune them—remove unnecessary joins, avoid correlated subqueries, and so on. Only when you have tried and exhausted all query tuning options at your end should you start adding the indices. The important thing to note here is that you should add indices only on the required number of columns.

Using the correct column type and size for your database columns

When you want to use int as a datatype for a column, use an integer. Don't use double. This will save a lot of space if you have lots of rows in your table.

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

Return only the required data

Always return only the data that you need nothing more, nothing less. This approach reduces the data that we send across the wire (from the database server to the web/application server).

For example, we would not use the following:

Select * from employees

Instead, we would use this:

Select FirstName,LastName from employees

The latter query would get only the required fields from the table, and, thus, only the required data is passed across to the calling client.

Fine tuning the indices

Beginners tend to add indices whenever they face a problem with the database. Adding an index to every column in the table is bad practice, and will reduce performance. The right approach is to take the list of queries that are most frequently executed. Once you have this list, try to fine tune them—remove unnecessary joins, avoid correlated subqueries, and so on. Only when you have tried and exhausted all query tuning options at your end should you start adding the indices. The important thing to note here is that you should add indices only on the required number of columns.

Using the correct column type and size for your database columns

When you want to use int as a datatype for a column, use an integer. Don't use double. This will save a lot of space if you have lots of rows in your table.

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

Fine tuning the indices

Beginners tend to add indices whenever they face a problem with the database. Adding an index to every column in the table is bad practice, and will reduce performance. The right approach is to take the list of queries that are most frequently executed. Once you have this list, try to fine tune them—remove unnecessary joins, avoid correlated subqueries, and so on. Only when you have tried and exhausted all query tuning options at your end should you start adding the indices. The important thing to note here is that you should add indices only on the required number of columns.

Using the correct column type and size for your database columns

When you want to use int as a datatype for a column, use an integer. Don't use double. This will save a lot of space if you have lots of rows in your table.

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

Using the correct column type and size for your database columns

When you want to use int as a datatype for a column, use an integer. Don't use double. This will save a lot of space if you have lots of rows in your table.

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

Avoiding correlated subqueries

Correlated subqueries use values from their parent query, which in turn makes it run row by row. This would significantly affect the query performance.

The following is one such example of a correlated subquery:

SELECT e.Name, e.City, (SELECT DepartmentName FROM EmployeeDepartment WHERE ID = e.DepartmentId) AS DepartmentName FROM Employee e

Generic performance improvement tips

Here are a couple of pointers to improve the overall application performance in an ASP.NET Core Web Application.

Avoiding the Response.Redirect method

When we want to do client-side redirection, developers can call the Response.Redirect method with the URL passed as a parameter. But there is a small problem with this approach. If we use Response.Redirect, the browser will send the request to the server again, which needs another round trip to the server. So, if possible, it is better to avoid the Response.Redirect method and instead use RedirectToAction method if possible.

Using string builder

If your application involves a lot of string manipulation, it is preferable to use string builder instead of the usual string concatenation. String concatenation results in creating a new string object for each of the operations, whereas string builder works on the single object itself. We can achieve significantly better performance when we use string builder in large string manipulation operations.

Avoiding the Response.Redirect method

When we want to do client-side redirection, developers can call the Response.Redirect method with the URL passed as a parameter. But there is a small problem with this approach. If we use Response.Redirect, the browser will send the request to the server again, which needs another round trip to the server. So, if possible, it is better to avoid the Response.Redirect method and instead use RedirectToAction method if possible.

Using string builder

If your application involves a lot of string manipulation, it is preferable to use string builder instead of the usual string concatenation. String concatenation results in creating a new string object for each of the operations, whereas string builder works on the single object itself. We can achieve significantly better performance when we use string builder in large string manipulation operations.

Using string builder

If your application involves a lot of string manipulation, it is preferable to use string builder instead of the usual string concatenation. String concatenation results in creating a new string object for each of the operations, whereas string builder works on the single object itself. We can achieve significantly better performance when we use string builder in large string manipulation operations.

Summary

In this chapter, we have learned how to analyze the performance of web applications and which layers to target when improving the performance. Then we discussed how to improve the performance in each of the layers—the UI layer, the web/application layer, and the DB layer.