These principles confirm the fundamental pillars on which well-architected and well-designed systems must be made:

- Enable scalability:

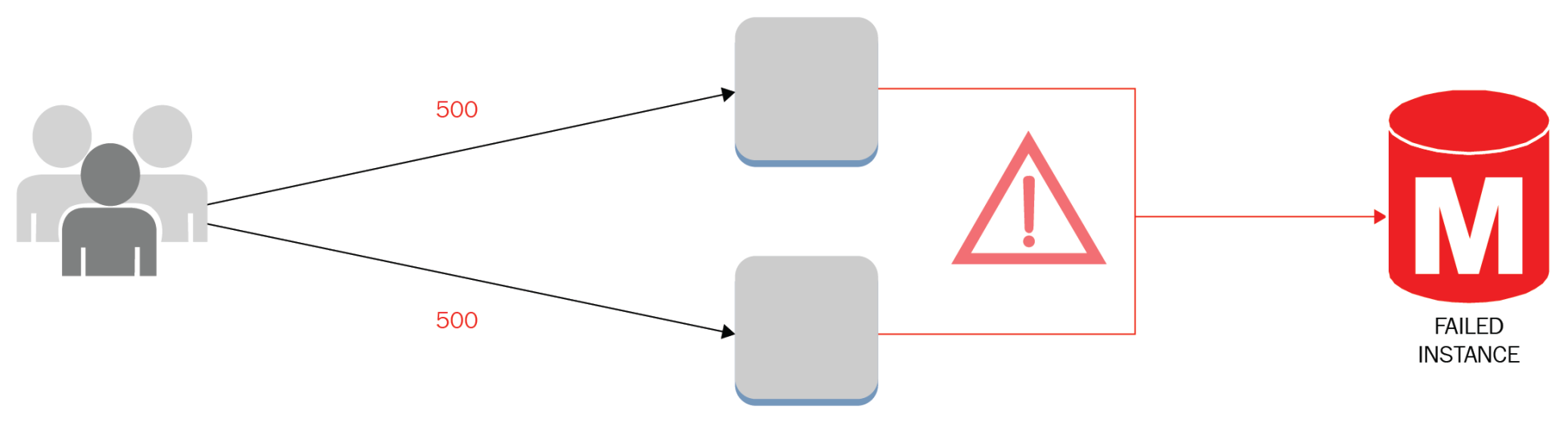

- Antipattern: Manual operation to aggregate capacity reactively and not proactively. Passive detection of failures and service limits can result in downtimes for applications and is prone to human errors due to limited reaction timespans:

From the diagram, we can see that instances take time to be fully usable, and the process is human-dependent.

-

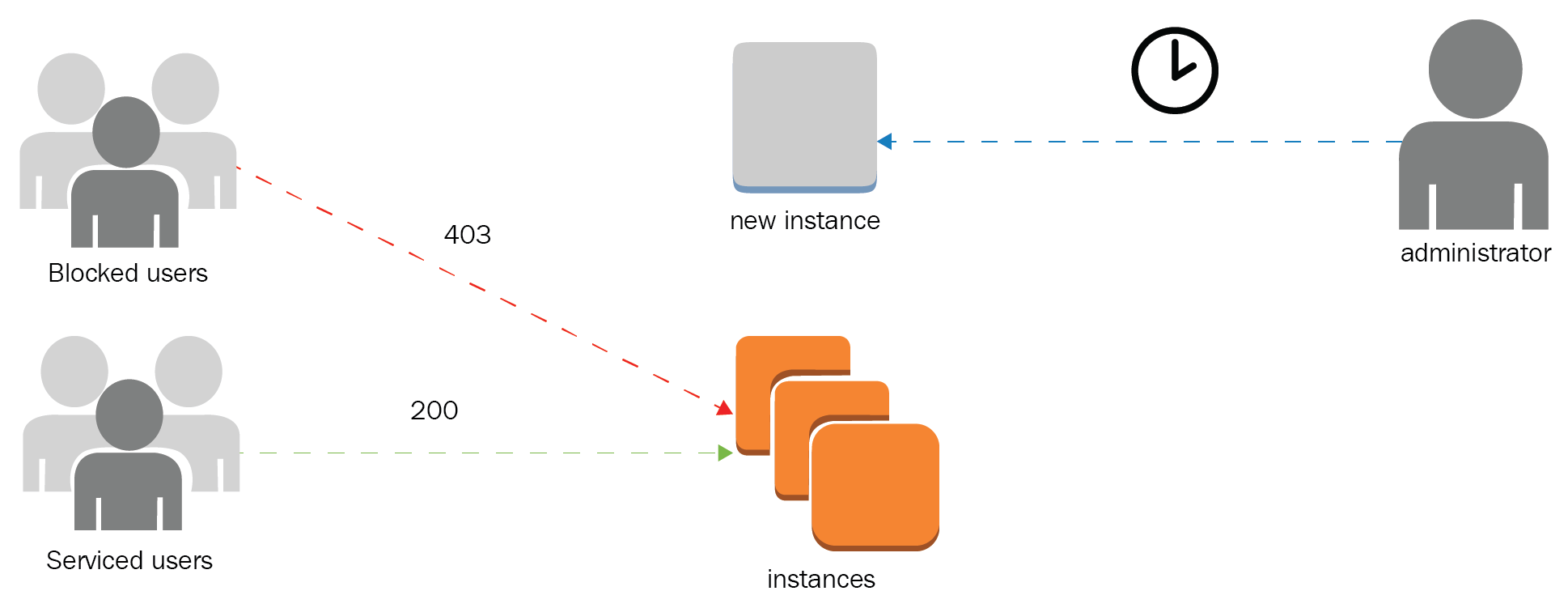

- Best practice: The elastic nature of AWS services makes it possible to manage changes in demand and adapt to the consumer patterns with the possibility to reach global audiences. When a resource is not elastic, it is possible to use Auto Scaling or serverless approaches:

Auto Scaling automatically spins up instances to compensate for demand.

- Automate your environment:

- Antipattern: Ignoring configuration changes and the lack of a configuration management database (CMDB) can result in erratic behavior, visibility loss, and have a high impact on critical production systems. The absence of robust monitoring solutions results in fragile systems and slow responses to change requests compromising security and the system's stability:

AWS Config records every change in the resources and provides a unique source of truth for configuration changes.

-

- Best practice: Relying on automation, from scripts to specialized monitoring services, will help us to gain reliability and make every cloud operation secure and consistent. The early detection of failures and deviation from normal parameters will support in the process of fixing bugs and avoiding issues to become risks. It is possible to establish a monitoring strategy that accounts for every layer of our systems. Artificial intelligence can be used for analyzing the stream of changes in real time and reacting to these events as they occur, facilitating agile operations in the cloud:

Config can be used to durably store configuration changes for later inspection, react in real time with push notifications, and visualize real-time operations dashboards.

- Use disposable resources:

- Antipattern: Running instances with low utilization or over capacity can result in higher costs. The poor understanding of every service feature and capability can result in higher expenses, lower performance, and administration overhead. Maybe you are not using the right tool for the job. Immutable infrastructure is a determinant aspect of using disposable resources, so you can create and replace components in a declarative way:

Tagging will help you gain control, and provide means to orchestrate change management. The previous diagram shows how tagging can help to discover compute resources to stop and start only in office hours for different regions.

-

- Best practice: Using reserved instances promotes a fast Return on Investment (ROI). Running instances only when they are needed or using services such as Auto Scaling and AWS Lambda will strengthen usage only when needed, thus optimizing costs and operations. Practices such as Infrastructure as Code (IaC) give us the ability to create full-scale environments and perform production-level testing. When tests are over, you can tear down the testing environment:

Auto Scaling lets the customer specify the scaling needs without incurring additional costs.

- Loosely couple your components:

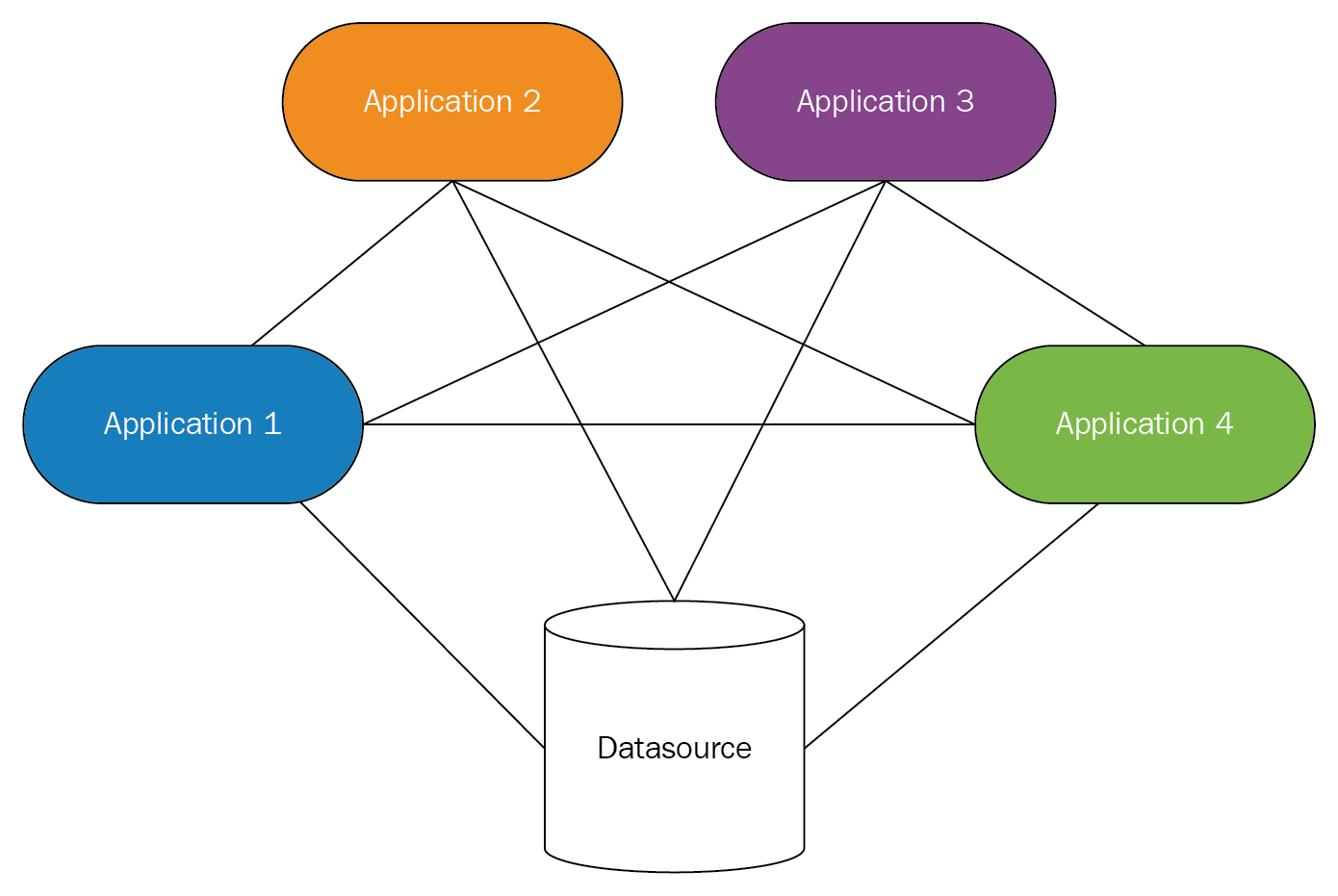

- Antipattern: Tightly coupled systems avoid scalability and create a high degree of dependencies at the component and service levels. Systems become more rigid, less portable, and it is very complicated to introduce changes and improvements. Coupling not only happens at the software or infrastructure levels, but also with service providers by using proprietary solutions or services that create dependencies with a brand forcing us to consume their products with the inherent restrictions of the maker:

Software changes constantly, and we need to keep ourselves updated to avoid the erosion of the operating systems and technology stacks.

-

- Best practice: Using indirection levels will avoid direct communications and less state sharing between components, thus keeping low coupling and high cohesion. Extracting configuration data and exposing it as services will permit evolution, flexibility, and adaptability to changes in the future.

It is possible to replace a component with another if the interface has not changed significantly. It is fundamental to use standards-based technologies and protocols that bring interoperability such as HTTP and RESTful web services:

A good strategy to decouple is to use managed services.

- Design services, not servers:

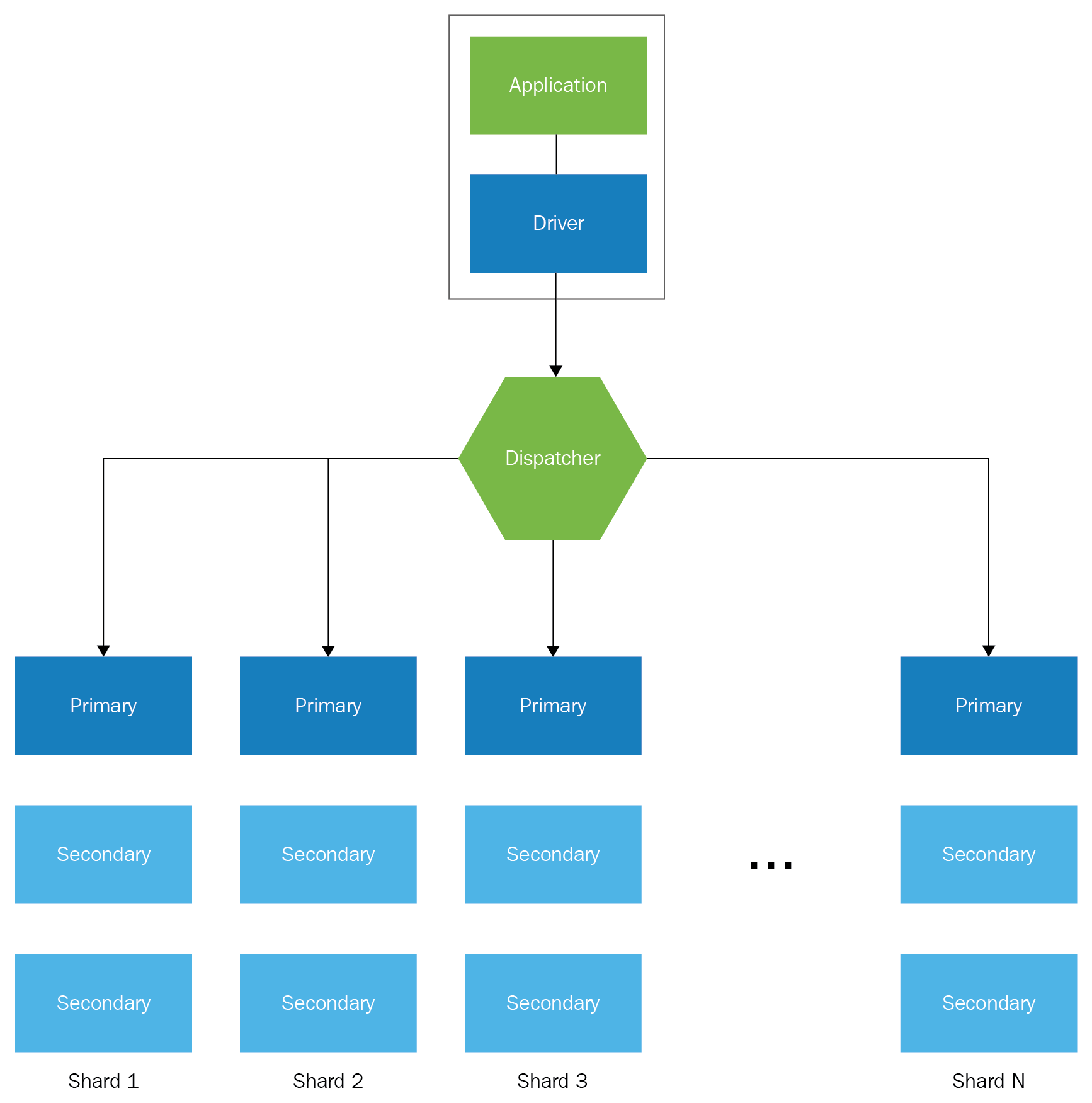

- Antipattern: Investing time and effort in the management of storage, caching, balancing, analysis, streaming, and so on, is time-consuming and deviates us from the main purpose of the business: the creation of valuable solutions for our customers. We need to focus on product development and not on infrastructure and operations. Large-scale in-house solutions are complicated, and they require a high level of expertise and budget for research and fine-tuning:

This image depicts the architecture needed for a scalable NoSQL database

-

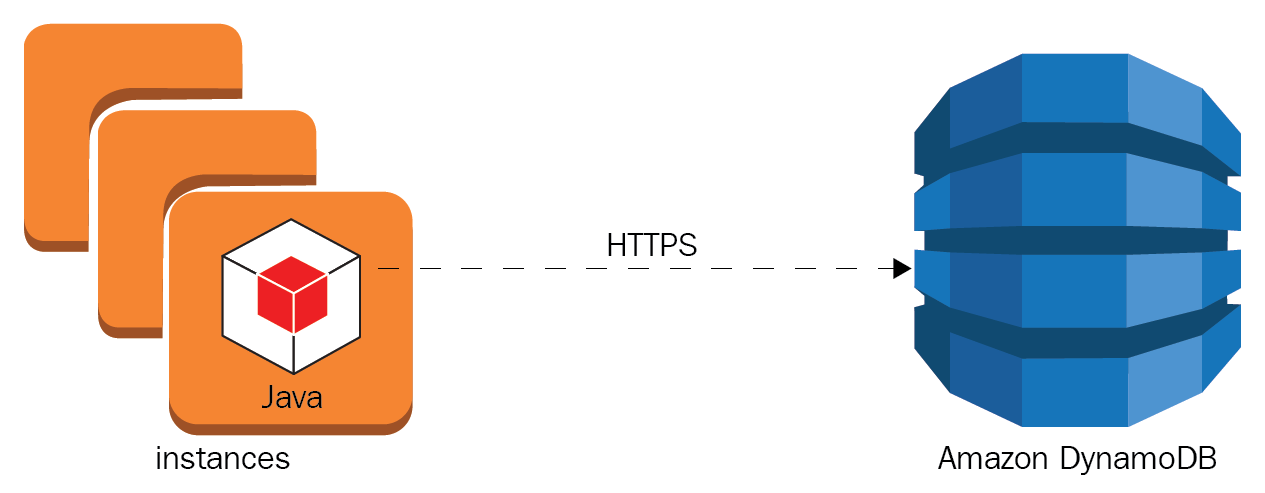

- Best practice: Cloud-based services abstract the problem of the operation of this specialized services on a large-scale and offload the operations management to a third party. Many AWS services are backed by Service Level Agreements (SLAs), and these SLAs are passed down to our customers. In the end, this improves the brand's reputation and our security posture; also, this will enable organizations to reach higher levels of compliance:

Communication in-transit is secure between AWS services

- Choose the right database solutions:

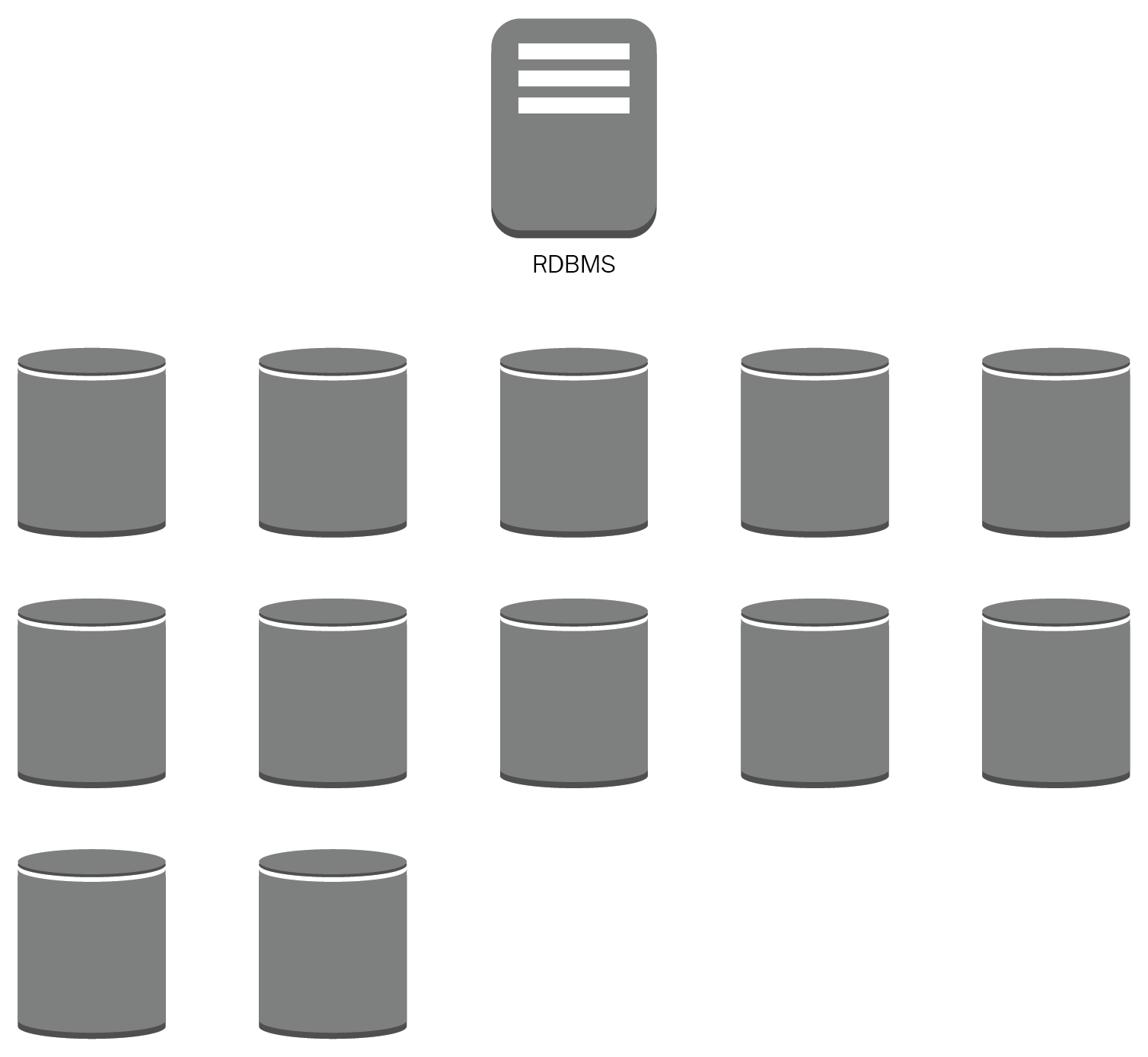

- Antipattern: Taking a relational database management system (RDBMS) as a silver bullet and using the same approach to solve any kind of problem, from temporal storage to Big Data. Not taking into account the usage patterns of our data, not having a governance data model, and an incorrect classification can leave data exposed to third parties. Working in silos will result in increased costs associated with the extract, transform, and load (ETL) pipelines from dispersed data avoiding valuable knowledge:

Big Data and analytics workloads will require a lot of effort and capacity from an RDBMS datastore.

-

- Best practice: Classify information according to its level of sensibility and risk, to establish controls that allow clear security objectives, such as confidentiality, integrity, and availability (CIA). Designing solutions with specific-purpose services and ad hoc use cases such as caching and distributed search engines and non-relational databases. All of these will contribute to the flexibility and adaptability of the business. Understanding data temperature will improve the efficiency of storage and recovery solutions while optimizing costs. Working with managed databases will make it possible to analyze data at petabyte scale, process huge quantities of information in parallel, and create data-processing pipelines for batch and real-time patterns:

Some specific-purpose storage services in AWS

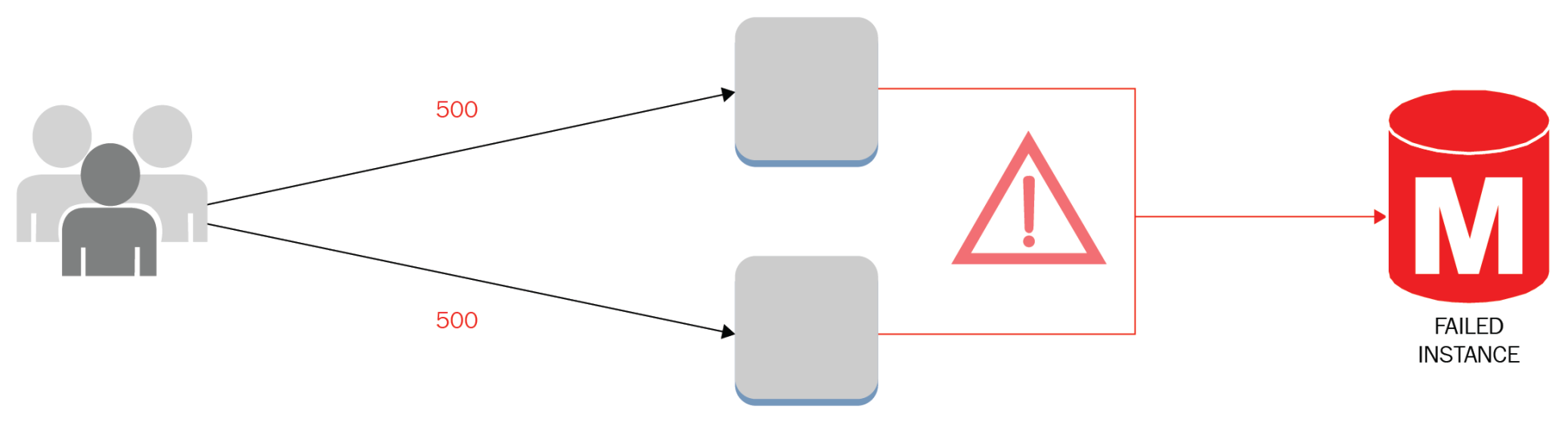

- Avoid single points of failure:

- Antipattern: A chain is as strong as its weakest link. Monolithic applications, a low throughput network card, and web servers without enough RAM memory can bring a whole system down. Non-scalable resources can represent single points of failure or even a database license that prevents the use of more CPU cores. Points of failure can also be related to people by performing unsupervised activities and processes without the proper documentation; also the lack of agility could represent a constraint in critical production operations:

-

- Best practice: Active-passive architectures avoid complete service outages, and redundant components enable business continuity by performing switchover and failover when necessary. The data and control planes must take this N+1 design paradigm, avoiding bottlenecks and single component failures that compromise the full operation. Managed services offer up to 99.95% availability regionally, offloading responsibilities from the customer. Experienced AWS solutions architects can design sophisticated solutions with SLAs of up to five nines:

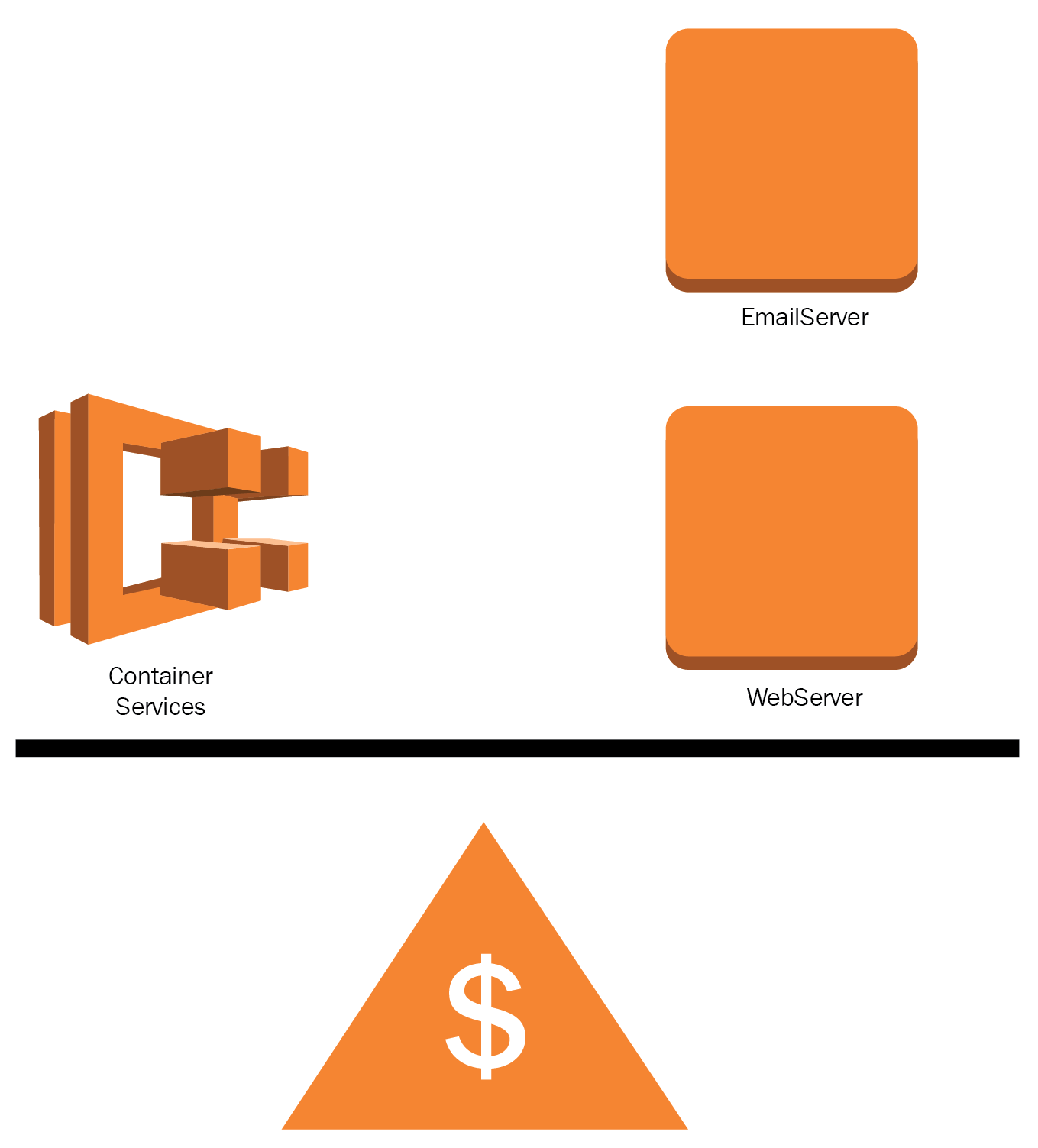

- Optimize for cost:

- Antipattern: Using big servers for simple compute functions, such as authentication or email relay, could lead to elevated costs in the long term and keeping instances running 24/7 when traffic is intermittent. Adding bigger instances to improve performance without a performance objective and proper tuning won't solve the problem. Even poorly designed storage solutions will cost more than expected. Billing can go out of control if expenses are not monitored in detail.

It is common to provision resources for testing purposes or to leave instances idle, forgetting to remove them. Sometimes, instances need to communicate with resources or services in another geographic region increasing complexity and costs due to inter-region transfer rates:

-

- Best practice: Replacing traditional servers with containers or serverless solutions. Consider using Docker to maximize the instance resources and AWS Lambda; use recurring compute resources only when needed. Reserving compute capacity can decrease your costs significantly, by up to 95%. Leverage managed services features that can store transient data such as sessions, messages, streams, system metrics, and logs:

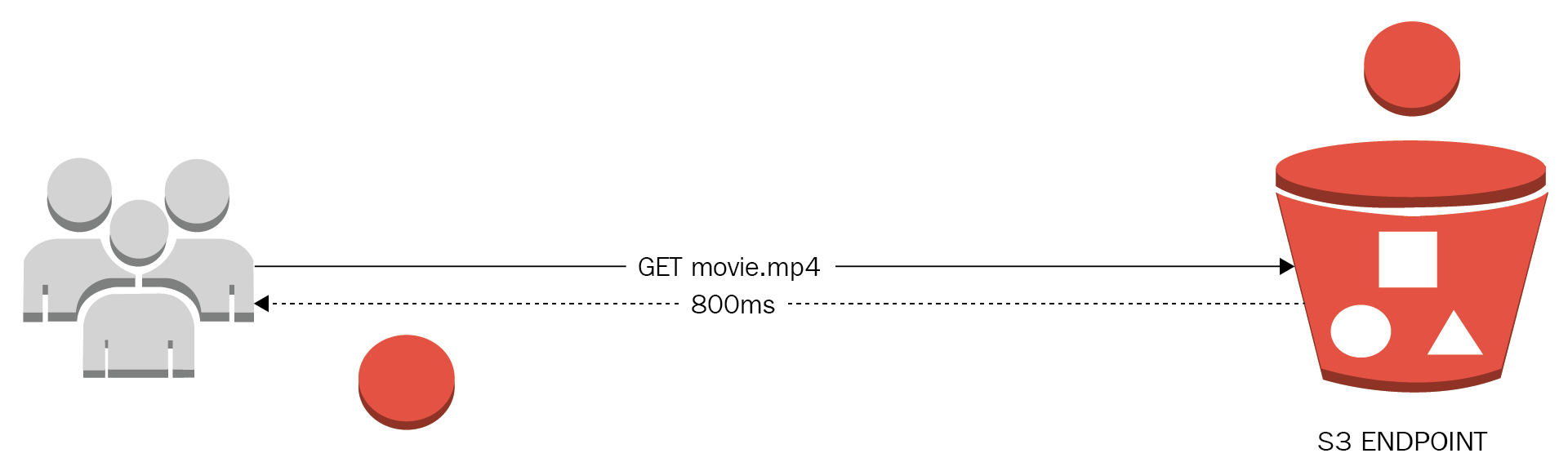

- Use caching:

- Antipattern: Repeatedly accessing the same group of data or storing this data in a medium not optimized for reading workloads, applications dealing with the physical distance between the client, and the service endpoint. The user receives a pretty bad experience when the network is not available.

Increasing costs due to redundant read requests and cross-region transfer rates, also not having a life cycle for storage and no metrics that can warn about the usage patterns of data:

-

- Best practice: Identify the most used queries and objects to optimize this information by transferring a copy of this data to the closest location to your end users. By using memory storage technologies it is possible to achieve microsecond latencies and the ability to retrieve huge amounts of data. It is necessary to use caching strategies in multiple levels, even storing commonly accessed data directly on the client, for example mobile applications using search catalogs.

Caching services can lower your costs and offload backend stress by moving this data closer to the consumer. It is even possible to offer degraded experiences without the total service disruption in the case of a backend failure:

- Secure your infrastructure everywhere:

- Antipattern: Trusting in the operating system's firewalls and being naive about the idea that every workload in the cloud is 100% secure out of the box. Waiting until a security breach is made to take corrective measures maybe thinking that only Fortune 500 companies are victims of Distributed Denial of Service (DDoS) attacks. Implementing HTTPS but no security at-rest compromise greatly the organization assets and become an easy prey of ransomware attacks.

Using the root account and the lack of logs management structures will take visibility and auditability away. Not having an InfoSec department or ignoring security best practices can lead to a complete loss of credibility and of our clients:

-

- Best practice: Security must be an holistic labor. It must be implemented at every layer using a systemic approach. Security needs to be automated to be able to react immediately when a breach or unusual activity is found; this way, it is possible to remediate as detected. In AWS, it is possible to segregate network traffic and use managed services that help us to protect valuable assets with complete visibility of operations and management.

At-rest data can be safeguarded by using encryption semantics using cryptographic keys generated on demand delegating the management and usage of these keys to users designated for these jobs. Data in transit must be protected by standard transmission protocols such as IPSec and TLS:

The CIA triad is a commonly used model to achieve information security

© 2018 Packt Publishing Limited All Rights Reserved

© 2018 Packt Publishing Limited All Rights Reserved