It is important to understand how different services in OpenStack work together, leading to a running virtual machine. We have already seen how a request is processed in OpenStack via APIs.

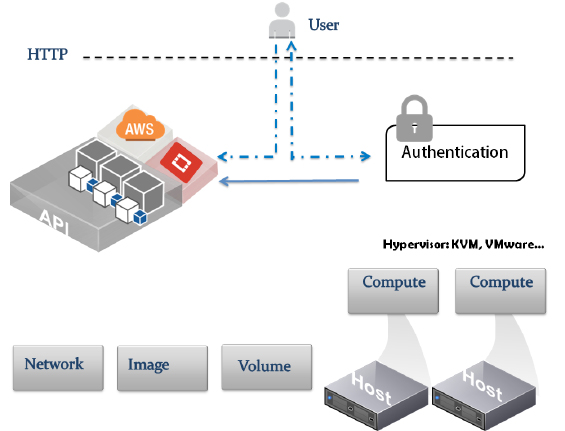

Let's figure out how things work by referring to the following simple architecture diagram:

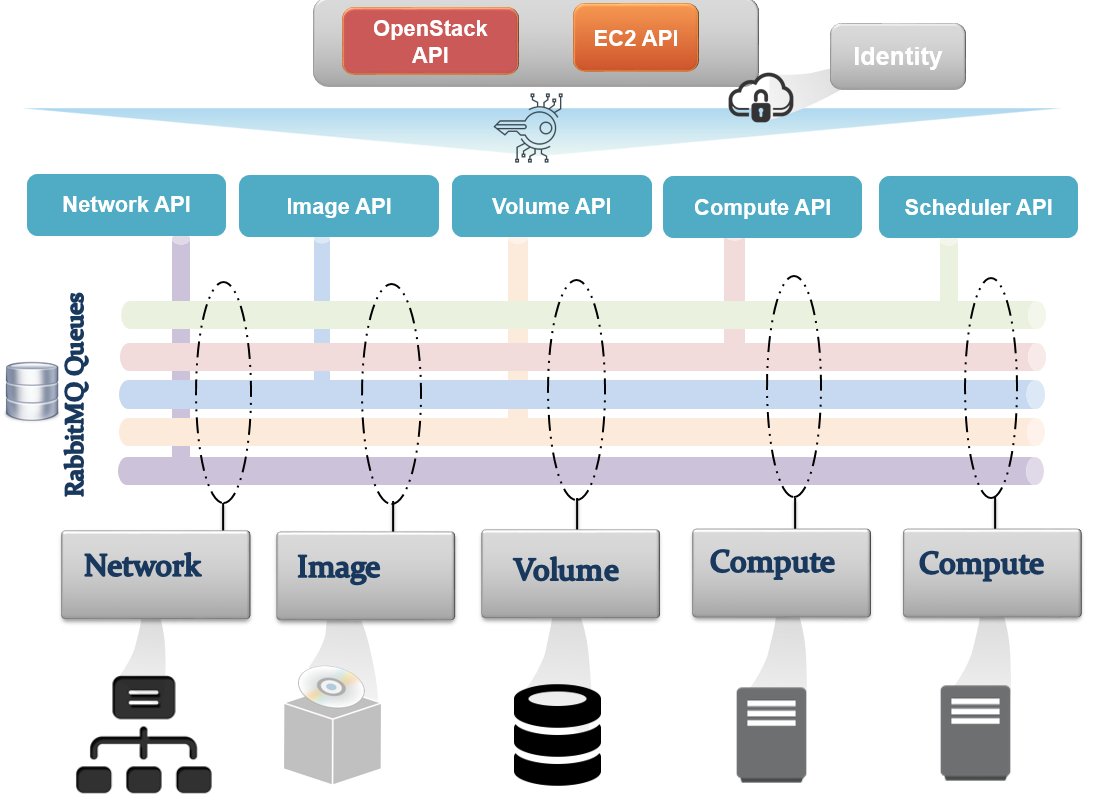

The process of launching a virtual machine involves the interaction of the main OpenStack services that form the building blocks of an instance including compute, network, storage, and the base image. As shown in the previous diagram, OpenStack services interact with each other via a message bus to submit and retrieve RPC calls. The information of each step of the provisioning process is verified and passed by different OpenStack services via the message bus. From an architecture perspective, sub system calls are defined and treated in OpenStack API endpoints involving: Nova, Glance, Cinder, and Neutron.

On the other hand, the inter-communication of APIs within OpenStack requires an authentication mechanism to be trusted, which involves Keystone.

Starting with the identity service, the following steps summarize briefly the provisioning workflow based on API calls in OpenStack:

- Calling the identity service for authentication

- Generating a token to be used for subsequent calls

- Contacting the image service to list and retrieve a base image

- Processing the request to the compute service API

- Processing compute service calls to determine security groups and keys

- Calling the network service API to determine available networks

- Choosing the hypervisor node by the compute scheduler service

- Calling the block storage service API to allocate volume to the instance

- Spinning up the instance in the hypervisor via the compute service API call

- Calling the network service API to allocate network resources to the instance

It is important to keep in mind that handling tokens in OpenStack on every API call and service request is a time limited operation. One of the major causes of a failed provisioning operation in OpenStack is the expiration of the token during subsequent API calls. Additionally, the management of tokens has faced a few changes within different OpenStack releases. This includes two different approaches used in OpenStack prior to the Liberty release including:

- Universally Unique Identifier (UUID): Within Keystone version 2, an UUID token will be generated and passed along every API call between client services and back to Keystone for validation. This version has proven performance degradation of the identity service.

- Public Key Infrastructure (PKI): Within Keystone version 3, tokens are no longer validated at each API call by Keystone. API endpoints can verify the token by checking the Keystone signature added when initially generating the token.

Starting from the Kilo release, handling tokens in Keystone has progressed by introducing more sophisticated cryptographic authentication token methods, such as Fernet. The new implementation will help to tackle the token performance issue noticed in UUID and PKI tokens. Fernet is fully supported in the Mitaka release and the community is pushing to adopt it as the default. On the other hand, PKI tokens are deprecated in favor of Fernet tokens in further releases of Kilo OpenStack.

More advanced topics regarding additions introduced in Keystone are covered briefly in Chapter 3, OpenStack Cluster – The Cloud Controller and Common Services.