What is Kubernetes?

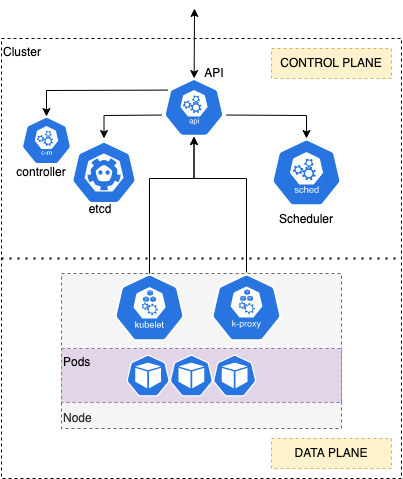

Kubernetes is an open source container orchestrator originally developed by Google but now seen as the de facto container platform for many organizations. Kubernetes is deployed as clusters containing a control plane that provides an API that exposes the Kubernetes operations, a scheduler that schedules containers (Pods are discussed next) across the worker nodes, a datastore to store all cluster data and state (etcd), and a controller that manages jobs, failures, and restarts.

Figure 1.5 – An overview of Kubernetes

The cluster is also composed of many worker nodes that make up the data plane. Each node runs the kubelet agent, which makes sure that containers are running on a specific node, and kube-proxy, which manages the networking for the node.

One of the major advantages of Kubernetes is that all the resources are defined as objects that can be created, read, updated, and deleted. The next section will review the major K8s objects, or “kinds” as they are called, that you will typically be working with.

Key Kubernetes API resources

Containerized applications will be deployed and launched on a worker node(s) using the API. The API provides an abstract object called a Pod, which is defined as one or more containers sharing the same Linux namespace, cgroups, network, and storage resources. Let’s look at a simple example of a Pod:

apiVersion: v1 kind: Pod metadata: name: nginx spec: containers: - name: nginx image: nginx:1.14.2 ports: - containerPort: 80

In this example, kind defines the API object, a single Pod, and metadata contains the name of the Pod, in this case, nginx. The spec section contains one container, which will use the nginx 1.14.2 image and expose a port (80).

In most cases, you want to deploy multiple Pods across multiple nodes and maintain that number of Pods even if you have node failures. To do this, you use a Deployment, which will keep your Pods running. A Deployment is a Kubernetes kind that allows you to define the number of replicas or Pods you want, along with the Pod specification we saw previously. Let’s look at an example that builds on the nginx Pod we discussed previously:

ApiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.14.2 ports: - containerPort: 80

Finally, you want to expose your Pods outside the clusters! This is because, by default, Pods and Deployments are only accessible from inside the cluster’s other Pods. There are various services, but let’s discuss the NodePort service here, which exposes a dynamic port on all nodes in the cluster.

To do this, you will use the kind of Service, an example of which is shown here:

kind: Service apiVersion: v1 metadata: name: nginx-service spec: type: NodePort selector: app: nginx ports: port: 80 nodePort: 30163

In the preceding example, Service exposes port 30163 on any host in the cluster and maps it back to any Pod that has label app=nginx (set in the Deployment), even if a host is not running on that Pod. It translates the port value to port 80, which is what the nginx Pod is listening on.

In this section, we’ve looked at the basic Kubernetes architecture and some basic API objects. In the final section, we will review some standard deployment architectures.