Why do software projects fail?

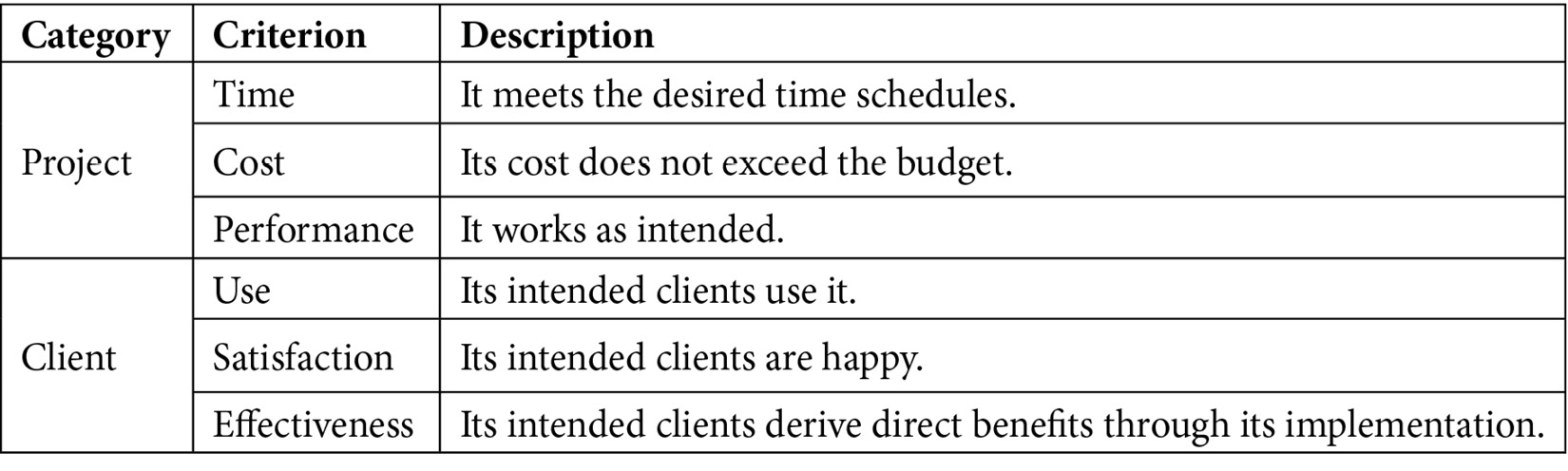

According to the project success report published in the Project Management Journal of the PMI, the following six factors need to be true for a project to be deemed successful:

Table 1.1 – Project success factors

With all of these criteria being applied to assess project success, a large percentage of projects fail for one reason or another. Let’s examine some of the top reasons in more detail.

Inaccurate requirements

PMI’s Pulse of the Profession report from 2017 highlights a very stark fact—a vast majority of projects fail due to inaccurate or misinterpreted requirements. It follows that it is impossible to build something that clients can use, they are happy with, and that makes them more effective at their jobs if the wrong thing gets built—even much less for the project to be built on time and within budget.

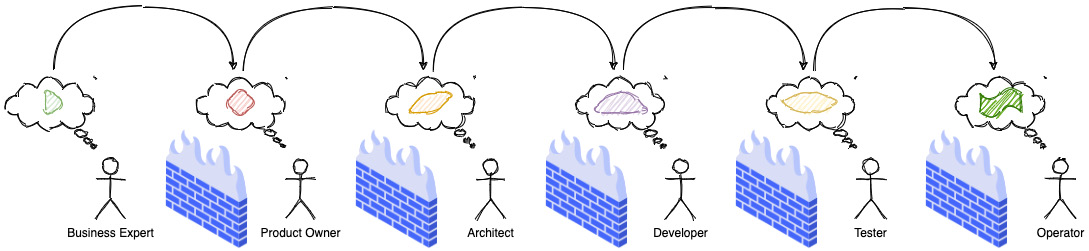

IT teams, especially in large organizations, are staffed with mono-skilled roles, such as UX designer, developer, tester, architect, business analyst, project manager, product owner, and business sponsor. In a lot of cases, these people are parts of distinct organization units/departments—each with its own set of priorities and motivations. To make matters even worse, the geographical separation between these people only keeps increasing. The need to keep costs down and the recent COVID-19 ecosystem does not help matters either.

Figure 1.1 – Silo mentality and the loss of information fidelity

All this results in a loss in fidelity of information at every stage in the assembly line, which then results in misconceptions, inaccuracies, delays, and eventually failure!

Too much architecture

Writing complex software is quite a task. You cannot just hope to sit down and start typing code—although that approach might work in some trivial cases. Before translating business ideas into working software, a thorough understanding of the problem at hand is necessary. For example, it is not possible (or is at least extremely hard) to build credit card software without understanding how credit cards work in the first place. To communicate your understanding of a problem, it is not uncommon to create software models of the problem before writing code. This model or collection of models represents the understanding of the problem and the architecture of the solution.

Efforts to create a perfect model of the problem—one that is accurate in a very broad context—are not dissimilar to the proverbial holy grail quest. Those accountable for producing the architecture can get stuck in analysis paralysis and/or big design upfront, producing artifacts that are one or more of too high level, wishful, gold-plated, buzzword-driven, or disconnected from the real world—while not solving any real business problems. This kind of lock-in can be especially detrimental during the early phases of the project when the knowledge levels of team members are still up and coming. Needless to say, projects adopting such approaches find it hard to reach success consistently.

Tip

For a more comprehensive list of modeling anti-patterns, refer to Scott W. Ambler’s website (http://agilemodeling.com/essays/enterpriseModelingAntiPatterns.htm) and book, Agile Modeling: Effective Practices for eXtreme Programming and the Unified Process, dedicated to the subject.

Too little architecture

Agile software delivery methods manifested themselves in the late 90s and early 2000s in response to heavyweight processes collectively known as waterfall. These processes seemed to favor big design upfront and abstract ivory tower thinking based on wishful, ideal-world scenarios. This was based on the premise that thinking things out well in advance ends up saving serious development headaches later on as the project progresses.

In contrast, agile methods seem to favor a much more nimble and iterative approach to software development with a high focus on working software over other artifacts, such as documentation. Most teams these days claim to practice some form of iterative software development. However, with this obsession to claim conformance to a specific family of agile methodologies as opposed to the underlying principles, a lot of teams misconstrue having just enough architecture with having no perceptible architecture. This results in a situation where adding new features or enhancing existing ones takes a lot longer than what it previously used to—which then accelerates the devolution of the solution to become the dreaded big ball of mud (http://www.laputan.org/mud/mud.html#BigBallOfMud).

Excessive incidental complexity

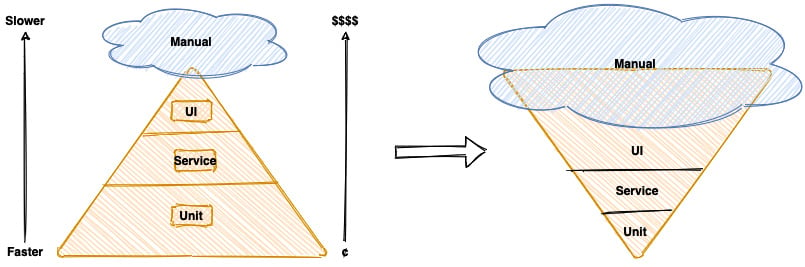

Mike Cohn popularized the notion of the test pyramid, where he talks about how a large number of unit tests should form the foundation of a sound testing strategy—with numbers decreasing significantly as you move up the pyramid. The rationale here is that as you move up the pyramid, the cost of upkeep goes up copiously while the speed of execution slows down manifold. In reality, though, a lot of teams seem to adopt a strategy that is the exact opposite of this—known as the testing ice cream cone, as depicted here:

Figure 1.2 – Testing strategy: expectation versus reality

The testing ice cream cone is a classic case of what Fred Brooks calls incidental complexity in his seminal paper titled No Silver Bullet—Essence and Accident in Software Engineering (http://worrydream.com/refs/Brooks-NoSilverBullet.pdf). All software has some amount of essential complexity that is inherent to the problem being solved. This is especially true when creating solutions for non-trivial problems. However, incidental or accidental complexity is not directly attributable to the problem itself—but is caused by the limitations of the people involved, their skill levels, the tools, and/or abstractions being used. Not keeping tabs on incidental complexity causes teams to veer away from focusing on the real problems, solving which provide the most value. It naturally follows that such teams minimize their odds of success appreciably.

Uncontrolled technical debt

Financial debt is the act of borrowing money from an outside party to quickly finance the operations of a business—with the promise to repay the principal plus the agreed-upon rate of interest in a timely manner. Under the right circumstances, this can accelerate the growth of a business considerably while allowing the owner to retain ownership, reduced taxes, and lower interest rates. On the other hand, the inability to pay back this debt on time can adversely affect credit rating, result in higher interest rates, cash flow difficulties, and other restrictions.

Technical debt is what results when development teams take arguably suboptimal actions to expedite the delivery of a set of features or projects. For a period of time, just like borrowed money allows you to do things sooner than you could otherwise, technical debt can result in short-term speed. In the long term, however, software teams will have to dedicate a lot more time and effort toward simply managing complexity as opposed to thinking about producing architecturally sound solutions. This can result in a vicious negative cycle, as illustrated in the following diagram:

Figure 1.3 – Technical debt: implications

In a recent McKinsey survey (https://www.mckinsey.com/business-functions/mckinsey-digital/our-insights/tech-debt-reclaiming-tech-equity) sent out to CIOs, around 60% reported that the amount of technical debt increased over the past 3 years. At the same time, over 90% of CIOs allocated less than a fifth of their tech budget toward paying it off. Martin Fowler explores (https://martinfowler.com/articles/is-quality-worth-cost.html#WeAreUsedToATrade-offBetweenQualityAndCost) the deep correlation between high software quality (or the lack thereof) and the ability to enhance software predictably. While carrying a certain amount of technical debt is inevitable and part of doing business, not having a plan to systematically pay off this debt can have significantly detrimental effects on team productivity and the ability to deliver value.

Ignoring non-functional requirements

Stakeholders often want software teams to spend the majority (if not all) of their time working on features that provide enhanced functionality. This is understandable given that such features provide the highest ROI. These features are called functional requirements.

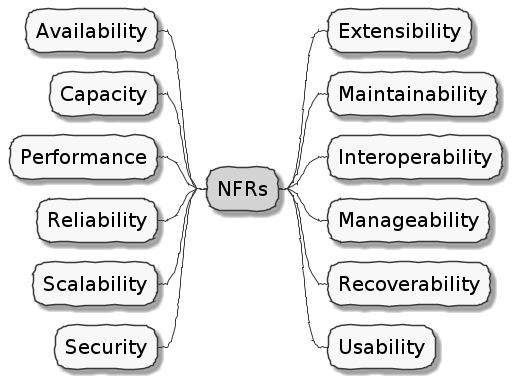

Non-functional requirements (also sometimes known as cross-functional requirements), on the other hand, are those aspects of the system that do not affect functionality directly but have a profound effect on the efficacy of those using and maintaining these systems. There are many kinds of NFRs. A partial list of common NFRs is depicted in the following figure:

Figure 1.4 – NFRs

Very rarely do users explicitly request NFRs, but they almost always expect these features to be part of any system they use. Oftentimes, systems may continue to function without NFRs being met, but not without having an adverse impact on the quality of the user experience. For example, the home page of a website that loads in under 1 second under low load and takes upward of 30 seconds under higher loads may not be usable during those times of stress. Needless to say, not treating NFRs with the same amount of rigor as explicit, value-adding functional features can lead to unusable systems—and subsequently failure.

In this section, we examined some common reasons why software projects to fail. Is it possible to improve our odds? Before we do that, let’s look at the nature of modern software systems and how we can deal with the ensuing complexity.