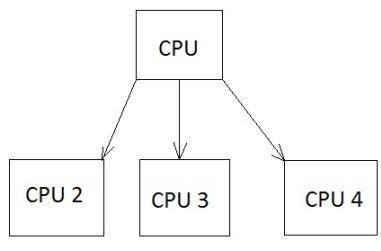

In Python, we can choose to run our code using either multiple threads or multiple processes should we wish to try and improve the performance over a standard single-threaded approach. We can go with a multithreaded approach and be limited to the processing power of one CPU core, or conversely we can go with a multiprocessing approach and utilize the full number of CPU cores available on our machine. In today's modern computers, we tend to have numerous CPUs and cores, so limiting ourselves to just the one, effectively renders the rest of our machine idle. Our goal is to try and extract the full potential from our hardware, and ensure that we get the best value for money and solve our problems faster than anyone else:

With Python's multiprocessing module, we can effectively utilize the full number of cores and CPUs, which can help us to achieve greater performance when it comes to CPU-bounded problems. The preceding figure shows an example of how one CPU core starts delegating tasks to other cores.

In all Python versions less than or equal to 2.6, we can attain the number of CPU cores available to us by using the following code snippet:

# First we import the multiprocessing module

import multiprocessing

# then we call multiprocessing.cpu_count() which

# returns an integer value of how many available CPUs we have

multiprocessing.cpu_count()

Not only does multiprocessing enable us to utilize more of our machine, but we also avoid the limitations that the Global Interpreter Lock imposes on us in CPython.

One potential disadvantage of multiple processes is that we inherently have no shared state, and lack communication. We, therefore, have to pass it through some form of IPC, and performance can take a hit. However, this lack of shared state can make them easier to work with, as you do not have to fight against potential race conditions in your code.