Kinect Studio – capturing Kinect data

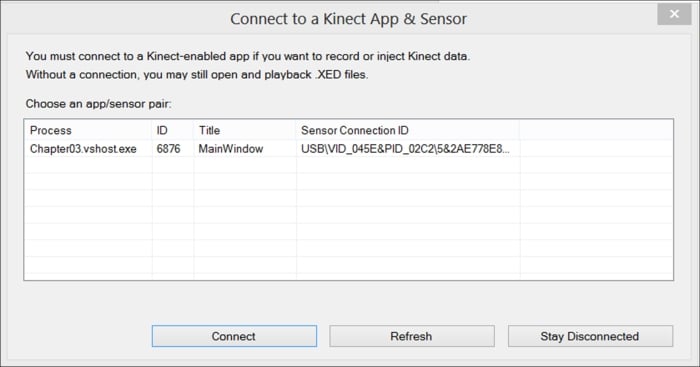

Capturing data streamed out from the Kinect sensor with Kinect Studio is a simple and intuitive process. We need to run Kinect Studio and the Connect to a Kinect App & Sensor window, which will enable us to select the Kinect application from which we want to record the data RGB or depth streams.

Kinect Studio connection dialog

Thanks to this same window we can otherwise select the Kinect application to inject a stream we had saved earlier.

Recording Kinect data is the operation we perform for creating, testing data, or testing the application on the fly.

Injecting Kinect data is the operation we perform mainly for testing our application

We need to launch our application in advance to attach the same to Kinect Studio. In case we haven’t launched our application yet, Kinect Studio will display an empty Choose an app/sensor pair list. Once we launch our application, we can select the Refresh button and the Kinect enabled application will eventually be listed.

Note

By Kinect enabled applications we mean an application using the Kinect Sensor and where we have invoked the

KinectSensor.Start() method.

Kinect Studio is able to track Kinect enabled applications wherever they are running in the debug or release mode. One additional requirement is that Kinect Studio and the Kinect enabled application should have the same level of authorization.

Once the Kinect enabled application is listed in the Choose an app/sensor pair list, we can select it and begin the test activities by clicking on the Connect button.

We are now ready to start our record activity against the color and depth data stream. We will go through the injection operation later.

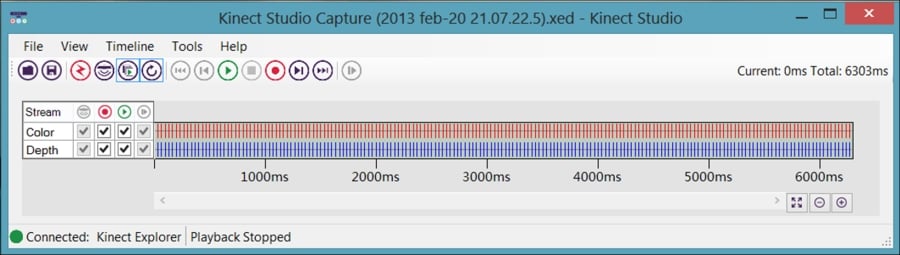

We can select which stream we want to track, visualize, and eventually play back using the Kinect Studio main window.

Note

We can still record the depth stream even in cases where our application is not processing the RGB/depth stream. It is indeed sufficient to initialize the Kinect sensor and does not disable the IR stream in order to track the depth stream.

Usually, applications focused on speech recognition and/or audio beam positioning are not concerned about depth data. We should disable the IR stream in this scenario to improve the Kinect sensor's overall performance.

Let’s now take a look at the scenario where we want to record a set of gestures to perform a repeatable test against our gesture recognition engine:

Main window

Select the Record graphical button (Ctrl + R using the keyboard). Kinect Studio starts to record the data stream from all the input sensors we have selected (color and/or depth). To stop recording the data stream we simply select the Stop graphical button (Shift + F5 using the keyboard). Finally, using the Play/Pause graphical button (Shift + F5 on the keyboard), we can play back the stream data we just recorded. All the data will be rendered in the Depth Viewer, 3D Viewer, and Color Viewer, which provide a visual playback of the stream data. We will look more closely at the viewers window shortly.

We can save the stream data as .xed binary files. By selecting the Save graphical button (Ctrl + S using the keyboard), we make the test data persistent. The saved data can be injected in to the application and allows us to test the application without the need to stand once again in front of the Kinect.

Note

We recommend adopting the approach where we record a single gesture or key movement per single file. This will allow us to unit test the Kinect enabled applications.

Let’s open the first recorded gesture. We can open a .xed file using the Open graphical button (Ctrl + O by the keyboard). We can fetch on a precise frame using the Kinect Studio timeline. Otherwise, we could select a given portion of the stream we want to reproduce. We can do this by selecting the starting point on the Kinect Studio timeline and dragging the mouse to the end point of the interval.

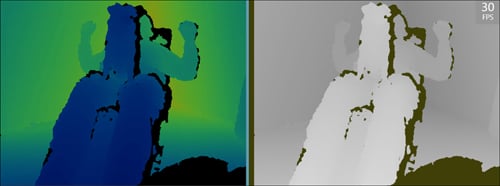

Using the Play/Pause graphical buttons, we can start playing back all the data contained in the recorded streams. The outcome is visualized in the different views as the following:

In the Depth Viewer window we have the stream of the depth camera mapped with a color palette, which, in the SDK Version 1.6 of the Kinect for Windows, with colors between blue (for points close to the sensor) and red (for points far from the sensor). The black color is used for highlighting areas out of the range or not tracked.

Depth Viewer window on left; Color Viewer window on right

In the Color Viewer window we have the stream captured from the RGB camera.

In the 3D Viewer window we have the three-dimensional representation of the scene captured by the Kinect sensor. The viewer enables us to change the camera position and to have a different perspective of the very same scene.

3D Viewer from two different perspectives

What makes Kinect Studio so vital is not only the fact that the recorded stream data is reproduced in the viewer, but indeed the fact that it can inject the same data in to our application. We can, for instance, record the stream data, notice a bug, fix our code, and then inject the very same stream data to ensure that the issue has been fixed. Kinect Studio is also very useful to ensure that the way we are rendering the stream data in our application is faithful. We can compare the graphical output of our application with the ones rendered by Kinect Studio and ensure that they are providing the same result, or rationalize the reason why they differ. For instance, in the following figure, we can understand that the color palette utilized in Kinect Studio for highlighting the depth points value is different from the one utilized in the application we developed in Chapter 2, Starting with Image Streams.

Depth Viewer on the left, Depth frame displayed inside app on the right