What resources should we use?

Industry 4.0 AI has blurred the lines between cloud platforms, frameworks, libraries, languages, and models. Transformers are new, and the range and number of ecosystems are mind-blowing. Google Cloud provides ready-to-use transformer models.

OpenAI has deployed a “Transformer” API that requires practically no programming. Hugging Face provides a cloud library service, and the list is endless.

This chapter will go through a high-level analysis of some of the transformer ecosystems we will be implementing throughout this book.

Your choice of resources to implement transformers for NLP is critical. It is a question of survival in a project. Imagine a real-life interview or presentation. Imagine you are talking to your future employer, your employer, your team, or a customer.

You begin your presentation with an excellent PowerPoint with Hugging Face, for example. You might get an adverse reaction from a manager who may say, “I’m sorry, but we use Google Trax here for this type of project, not Hugging Face. Can you implement Google Trax, please?” If you don’t, it’s game over for you.

The same problem could have arisen by specializing in Google Trax. But, instead, you might get the reaction of a manager who wants to use OpenAI’s GPT-3 engines with an API and no development. If you specialize in OpenAI’s GPT-3 engines with APIs and no development, you might face a project manager or customer who prefers Hugging Face’s AutoML APIs. The worst thing that could happen to you is that a manager accepts your solution, but in the end, it does not work at all for the NLP tasks of that project.

The key concept to keep in mind is that if you only focus on the solution that you like, you will most likely sink with the ship at some point.

Focus on the system you need, not the one you like.

This book is not designed to explain every transformer solution that exists on the market. Instead, this book aims to explain enough transformer ecosystems for you to be flexible and adapt to any situation you face in an NLP project.

In this section, we will go through some of the challenges that you’ll face. But first, let’s begin with APIs.

The rise of Transformer 4.0 seamless APIs

We are now well into the industrialization era of artificial intelligence. Microsoft, Google, Amazon Web Services (AWS), and IBM, among others, offer AI services that no developer or team of developers could hope to outperform. Tech giants have million-dollar supercomputers with massive datasets to train transformer models and AI models in general.

Big tech giants have a wide range of corporate customers that already use their cloud services. As a result, adding a transformer API to an existing cloud architecture requires less effort than any other solution.

A small company or even an individual can access the most powerful transformer models through an API with practically no investment in development. An intern can implement the API in a few days. There is no need to be an engineer or have a Ph.D. for such a simple implementation.

For example, the OpenAI platform now has a SaaS (Software as a Service) API for some of the most effective transformer models on the market.

OpenAI transformer models are so effective and humanlike that the present policy requires a potential user to fill out a request form. Once the request has been accepted, the user can access a universe of natural language processing!

The simplicity of OpenAI’s API takes the user by surprise:

- Obtain an API key in one click

- Import OpenAI in a notebook in one lin

- Enter any NLP task you wish in a prompt

- You will receive the response as a completion in a certain number of tokens (length)

And that’s it! Welcome to the Fourth Industrial Revolution and AI 4.0!

Industry 3.0 developers that focus on code-only solutions will evolve into Industry 4.0 developers with cross-disciplinary mindsets.

The 4.0 developer will learn how to design ways to show a transformer model what is expected and not intuitively tell it what to do, like a 3.0 developer would do. We will explore this new approach through GPT-2 and GPT-3 models in Chapter 7, The Rise of Suprahuman Transformers with GPT-3 Engines.

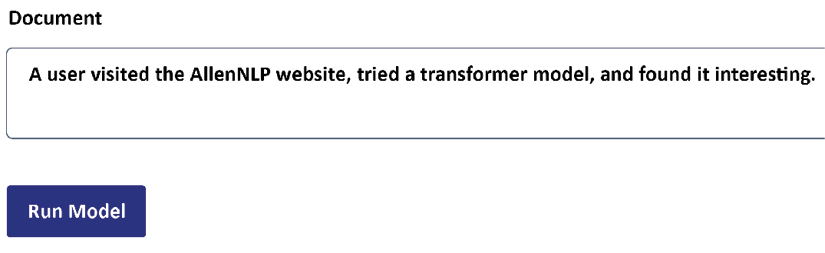

AllenNLP offers the free use of an online educational interface for transformers. AllenNLP also provides a library that can be installed in a notebook. For example, suppose we are asked to implement coreference resolution. We can start by running an example online.

Coreference resolution tasks involve finding the entity to which a word refers, as in the sentence shown in Figure 1.5:

Figure 1.5: Running an NLP task online

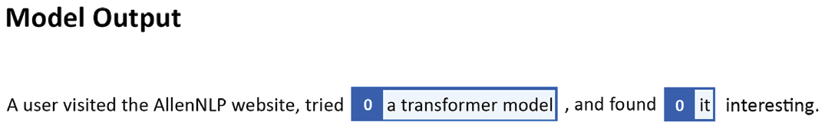

The word “it” could refer to the website or the transformer model. In this case, the BERT-like model decided to link “it” to the transformer model. AllenNLP provides a formatted output, as shown in Figure 1.6:

Figure 1.6: The output of an AllenNLP transformer model

This example can be run at https://demo.allennlp.org/coreference-resolution. Transformer models are continuously updated, so you might obtain a different result.

Though APIs may satisfy many needs, they also have limits. A multipurpose API might be reasonably good in all tasks but not good enough for a specific NLP task. Translating with transformers is no easy task. In that case, a 4.0 developer, consultant, or project manager will have to prove that an API alone cannot solve the specific NLP task required. We need to search for a solid library.

Choosing ready-to-use API-driven libraries

In this book, we will explore several libraries. For example, Google has some of the most advanced AI labs in the world. Google Trax can be installed in a few lines in Google Colab. You can choose free or paid services. We can get our hands on source code, tweak the models, and even train them on our servers or Google Cloud. For example, it’s a step down from ready-to-use APIs to customize a transformer model for translation tasks.

However, it can prove to be both educational and effective in some cases. We will explore the recent evolution of Google in translations and implement Google Trax in Chapter 6, Machine Translation with the Transformer.

We have seen that APIs such as OpenAI require limited developer skills, and libraries such as Google Trax dig a bit deeper into code. Both approaches show that AI 4.0 APIs will require more development on the editor side of the API but much less effort when implementing transformers.

One of the most famous online applications that use transformers, among other algorithms, is Google Translate. Google Translate can be used online or through an API.

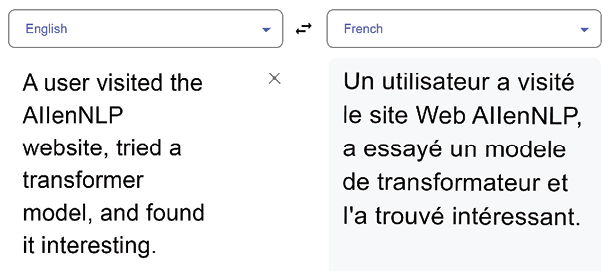

Let’s try to translate a sentence requiring coreference resolution in an English to French translation using Google Translate:

Figure 1.7: Coreference resolution in a translation using Google Translate

Google Translate appears to have solved the coreference resolution, but the word transformateur in French means an electric device. The word transformer is a neologism (new word) in French. An artificial intelligence specialist might be required to have language and linguistic skills for a specific project. Significant development is not required in this case. However, the project might require clarifying the input before requesting a translation.

This example shows that you might have to team up with a linguist or acquire linguistic skills to work on an input context. In addition, it might take a lot of development to enhance the input with an interface for contexts.

So, we still might have to get our hands dirty to add scripts to use Google Translate. Or we might have to find a transformer model for a specific translation need, such as BERT, T5, or other models we will explore in this book.

Choosing a model is no easy task with the increasing range of solutions.

Choosing a Transformer Model

Big tech corporations dominate the NLP market. Google, Facebook, and Microsoft alone run billions of NLP routines per day, increasing their AI models’ unequaled power. The big giants now offer a wide range of transformer models and have top-ranking foundation models.

However, smaller companies, spotting the vast NLP market, have entered the game. Hugging Face now has a free or paid service approach too. It will be challenging for Hugging Face to reach the level of efficiency acquired through the billions of dollars poured into Google’s research labs and Microsoft’s funding of OpenAI. The entry point of foundation models is fully trained transformers on supercomputers such as GPT-3 or Google BERT.

Hugging Face has a different approach and offers a wide range and number of transformer models for a task, which is an interesting philosophy. Hugging Face offers flexible models. In addition, Hugging Face offers high-level APIs and developer-controlled APIs. We will explore Hugging Face in several chapters of this book as an educational tool and a possible solution for specific tasks.

Yet, OpenAI has focused on a handful of the most potent transformer engines globally and can perform many NLP tasks at human levels. We will show the power of OpenAI’s GPT-3 engines in Chapter 7, The Rise of Suprahuman Transformers with GPT-3 Engines.

These opposing and often conflicting strategies leave us with a wide range of possible implementations. We must thus define the role of Industry 4.0 artificial intelligence specialists.

The role of Industry 4.0 artificial intelligence specialists

Industry 4.0 is connecting everything to everything, everywhere. Machines communicate directly with other machines. AI-driven IoT signals trigger automated decisions without human intervention. NLP algorithms send automated reports, summaries, emails, advertisements, and more.

Artificial intelligence specialists will have to adapt to this new era of increasingly automated tasks, including transformer model implementations. Artificial intelligence specialists will have new functions. If we list transformer NLP tasks that an AI specialist will have to do, from top to bottom, it appears that some high-level tasks require little to no development on the part of an artificial intelligence specialist. An AI specialist can be an AI guru, providing design ideas, explanations, and implementations.

The pragmatic definition of what a transformer represents for an artificial intelligence specialist will vary with the ecosystem.

Let’s go through a few examples:

- API: The OpenAI API does not require an AI developer. A web designer can create a form, and a linguist or Subject Matter Expert (SME) can prepare the prompt input texts. The primary role of an AI specialist will require linguistic skills to show, not just tell, the GPT-3 engines how to accomplish a task. Showing, for example, involves working on the context of the input. This new task is named prompt engineering. A prompt engineer has quite a future in AI!

- Library: The Google Trax library requires a limited amount of development to start with ready-to-use models. An AI specialist mastering linguistics and NLP tasks can work on the datasets and the outputs.

- Training and fine-tuning: Some of the Hugging Face functionality requires a limited amount of development, providing both APIs and libraries. However, in some cases, we still have to get our hands dirty. In that case, training, fine-tuning the models, and finding the correct hyperparameters will require the expertise of an artificial intelligence specialist.

- Development-level skills: In some projects, the tokenizers and the datasets do not match, as explained in Chapter 9, Matching Tokenizers and Datasets. In this case, an artificial intelligence developer working with a linguist, for example, can play a crucial role. Therefore, computational linguistics training can come in very handy at this level.

The recent evolution of NLP AI can be termed as “embedded transformers,” which is disrupting the AI development ecosystem:

- GPT-3 transformers are currently embedded in several Microsoft Azure applications with GitHub Copilot, for example.

- The embedded transformers are not accessible directly but provide automatic development support such as automatic code generation.

- The usage of embedded transformers is seamless for the end user with assisted text completion.

To access GPT-3 engines directly, you must first create an OpenAI account. Then you can use the API or directly run examples in the OpenAI user interface.

We will explore this fascinating new world of embedded transformers in Chapter 16. But to get the best out of that chapter, you should first master the previous chapters’ concepts, examples, and programs.

The skillset of an Industry 4.0 AI specialist requires flexibility, cross-disciplinary knowledge, and above all, flexibility. This book will provide the artificial intelligence specialist with a variety of transformer ecosystems to adapt to the new paradigms of the market.

It’s time to summarize the ideas of this chapter before diving into the fascinating architecture of the original Transformer in Chapter 2.