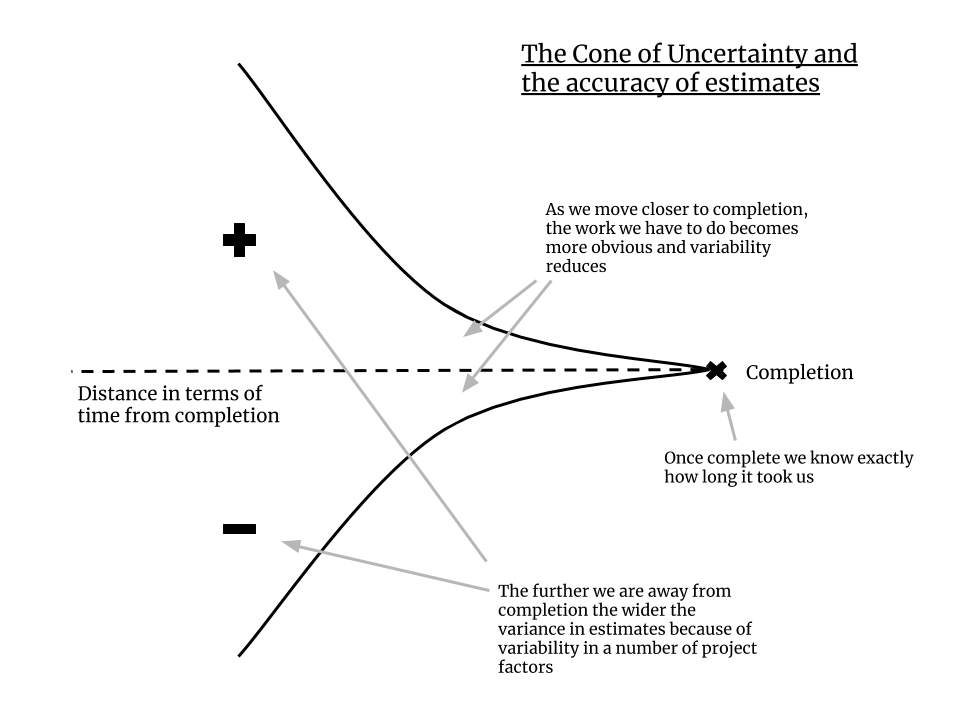

It's not that the project mindset is bad, it's just that the mindset drives us to think that we need a big upfront design approach in order to obtain a precision estimate. As noted, this can lead to a number of issues, particularly when presented with a large chunk of work.

And it isn't that upfront design is bad; it's often needed. It's just the big part, when we try to do too much of it, that causes us problems.

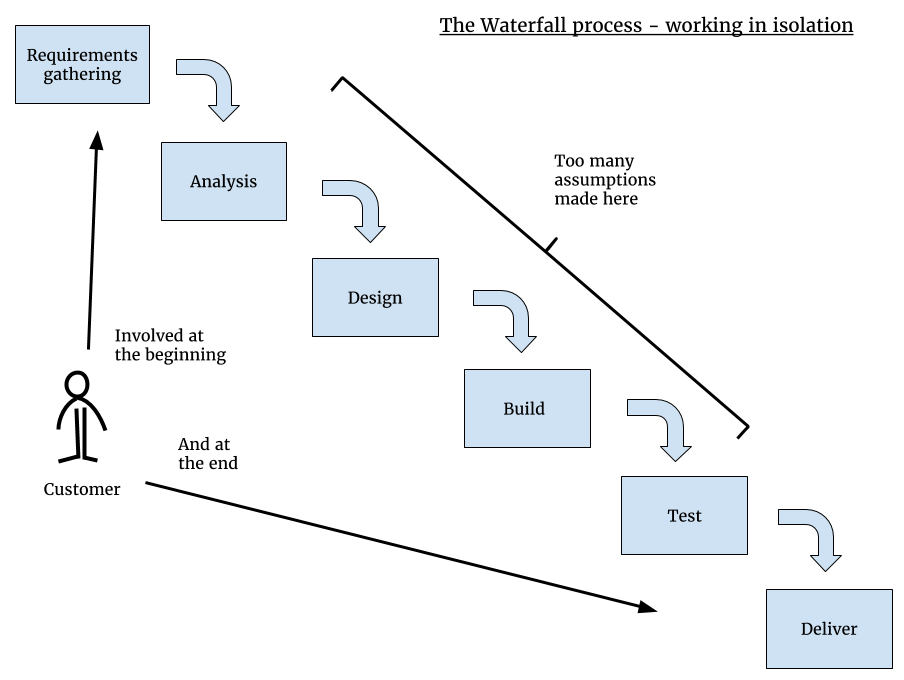

There were a number of bad behaviors feeding the big thinking happening in the industry. One of these is the way that work is funded, as projects. For a particular project to get funded, it has to demonstrate its viability at the annual funding round.

Unfortunately, once people in the management seats saw a hard number, it often became set in stone as an expectation. During the execution of the plan, if new information was discovered that was anything more significant than a small variance, there would be a tendency to try to avoid doing it. The preference would be to try to stick to the plan, rather than incorporate the change. You were seen as a better project manager for doing that.

So, we had a chicken-and-egg scenario; the project approach to funding meant that:

- The business needed to know the cost of something so they could allocate a budget.

- We needed to know the size of something and its technical scope and nature so that we could allocate the right team in terms of size and skill set.

- We had to do this while accepting:

- That our business didn't know exactly what it needed. The nature of software is intangible, most people don't know what they want/need until they see it and use it.

- The business itself wasn't stationary; just because we recorded requirements at a particular moment in time, didn't mean that the business would stop evolving around us.

So, if we can avoid the big part, we're able to reduce the level of uncertainty and subsequent variability in our estimates. We're also able to deliver in a timely fashion, which means there is less likelihood of requirements going out of date or the business changing its mind.

We did try to remedy this by moving to prototyping approaches. This enabled us to make iterative sweeps through the work, refining it with people who could actually use the working prototype and give us direct feedback.

Rapid Application Development, or RAD as it's commonly known, is one example of an early iterative process. Another was the Rational Unified Process (RUP).

Working on the principle that many people didn't know what they wanted until they saw it, we used RAD tools such as Visual Basic/Visual Studio to put semi-working prototypes together quickly.

But we still always managed to bite off more than we could chew. I suspect the main reason for this is that our customers still expected us to deliver something they could use. While prototypes gave the appearance of doing that and did enable us to get feedback early:

- At the end of the session, after getting the feedback we needed, and much to our customer's disappointment, we'd take the prototype away. They had assumed our mockup was a working software.

- We still hadn't delivered anything they could use in their day-to-day life to help them solve real-world problems.

To try to find a remedy to the hit-and-miss approach to software delivery, a group of 17 software luminaries came together in February 2001. The venue was a cabin in Utah, which they chose, as the story goes, so that they could ski, eat, and look for an alternative to the heavyweight, document-driven processes that seemed to dominate the industry.

Among them were representatives from Extreme Programming (XP), Scrum, Dynamic Systems Development Method (DSDM), Adaptive Software Development (ASD), Crystal, Feature-Driven Development, and Pragmatic Programming.

While at least one of them commented that they didn't expect anything substantive to come out of that weekend, what they did in fact formulate was the manifesto for Agile software development.

The manifesto documents four values and twelve principles that uncover "better ways of developing software by doing it and helping others do it".

The group formally signed the Manifesto and named themselves the Agile Alliance. We'll take a look at the Agile Values and Principles in the following sections.