Pix2pix - Image-to-Image translation GAN

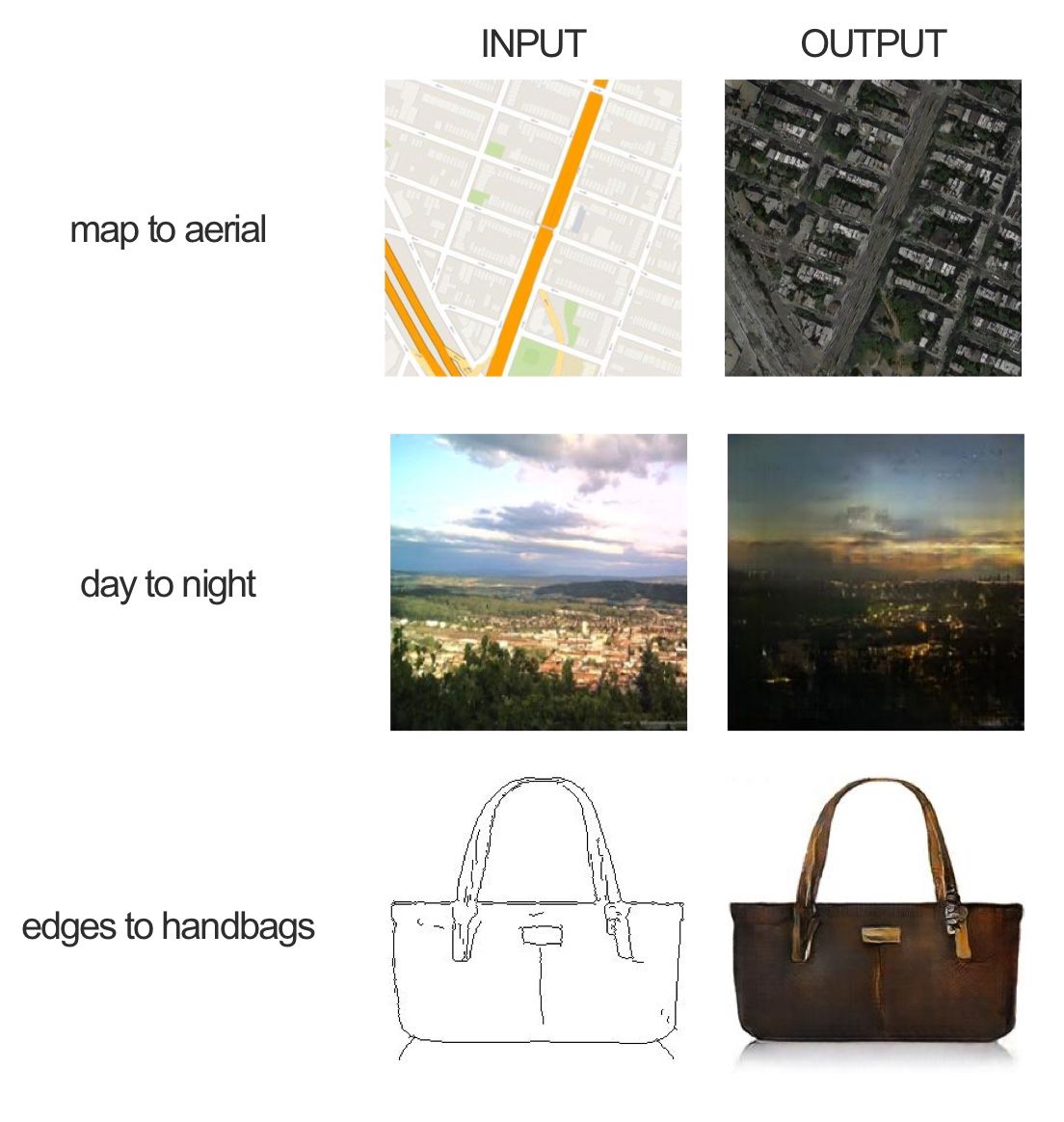

This network uses a conditional generative adversarial network (cGAN) to learn mapping from the input and output of an image. Some of the examples that can be done from the original paper are as follows:

Pix2pix examples of cGANs

In the handbags example, the network learns how to color a black and white image. Here, the training dataset has the input image in black and white and the target image is the color version.

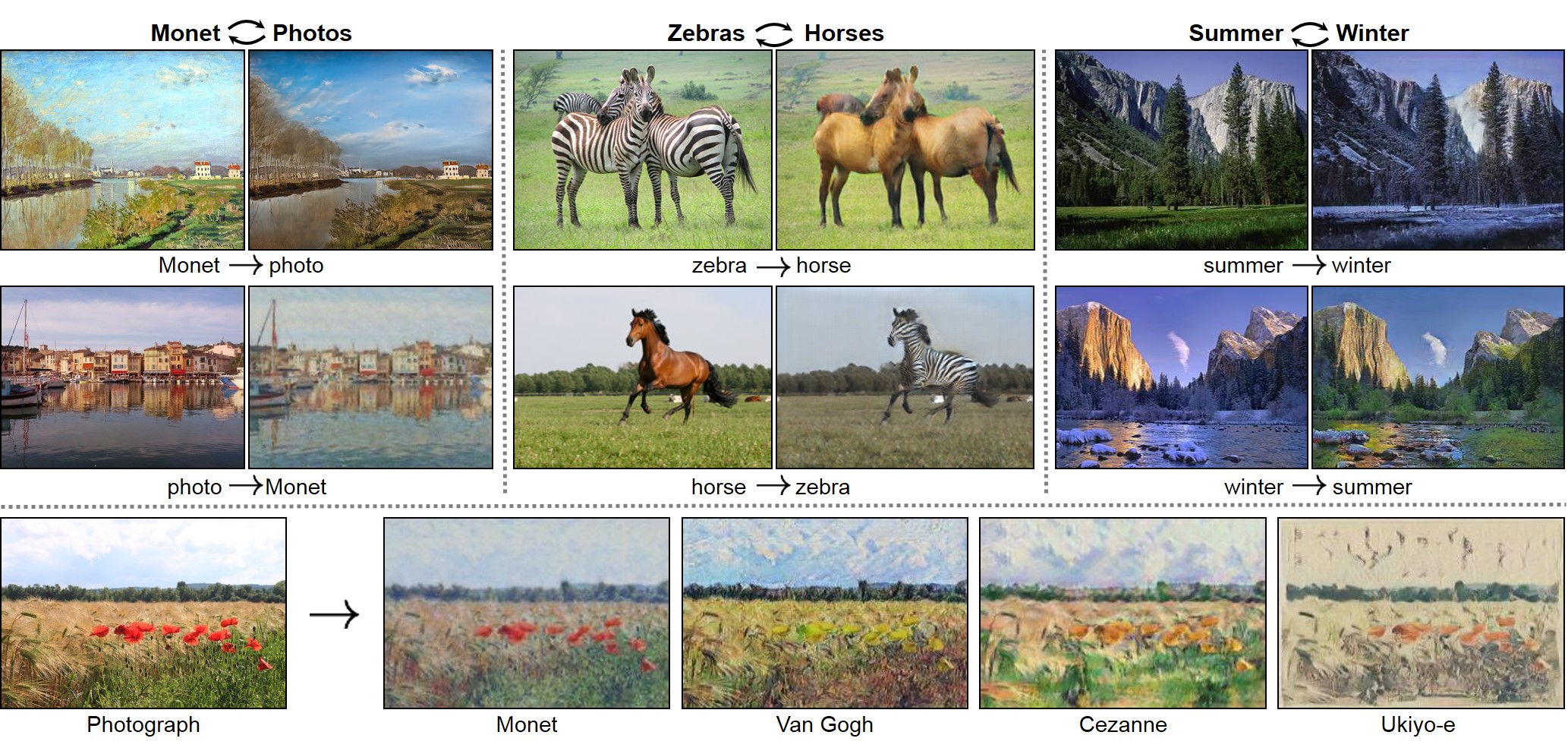

CycleGAN

CycleGAN is also an image-to-image translator but without input/output pairs. For example, to generate photos from paintings, convert a horse image into a zebra image:

Note

In a discriminator network, use of dropout is important. Otherwise, it may produce a poor result.

The generator network takes random noise as input and produces a realistic image as output. Running a generator network for different kinds of random noise produces different types of realistic images. The second network, which is known as the discriminator network...