Why do we demand at least one backup server? The full answer to this question actually has its own recipe in this chapter. However, catastrophic failure is a fact of life and we must be ready for such an event. Even if the separate server is not a fully operational PostgreSQL node, it must exist and should be part of the reference design.

Likewise, we must have at least one PostgreSQL replica. Some of our designs work with either physical or logical replicas, so we won't differentiate between them here. Simply assume that every highly active PostgreSQL cluster must have at least two nodes that can fulfill the role of a primary database. Backups take time to restore, whereas replicas are generally writable in a minute or less.

One replica only really covers the case where switching from one PostgreSQL node to the alternate is a manual procedure. Fully automated failure detection mechanisms require an odd number of nodes for voting purposes. This third node can either be a mere voting entity, or a full PostgreSQL replica. We cover this in greater depth in the Considering quorum recipe.

Once we start accounting for multiple geographic locations at different data centers, things don't change exceptionally. By now, we have at least one PostgreSQL replica that is probably at the first alternate location. If we have three or more active data centers where the application is using PostgreSQL, we'll want a local replica for each.

Then, consider the implications of limiting ourselves to merely one PostgreSQL node per location. This means any minor upgrade or other maintenance task will mean switching to an alternate data center while the maintenance is active. This can introduce unwanted latency that will affect the application. To reduce this, add one replica to the primary location to account for this effect. For symmetrical data centers that have no primary location, add a PostgreSQL replica to each location for the same reasons.

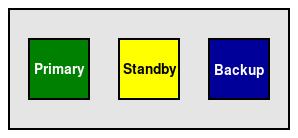

As a quick example, consider two scenarios. Our first company (Company A) only uses two data centers, and doesn't need automated database failover, nor is it necessary to worry about downtime caused by minor upgrades. In this case, they decided to use two PostgreSQL servers and a backup system. This is a minimum of three nodes related to PostgreSQL, and their cluster looks like this:

Company A

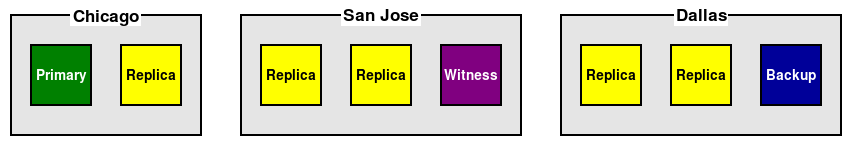

In the second case (Company B), we have a much more demanding financial institution that requires all three of their data centers to be active at all times. They chose to have one Primary PostgreSQL server, two Replicas per data center, a Witness node, and a Backup server. In that extreme case, they used a total of eight nodes dedicated to PostgreSQL.

Their cluster would look like this:

Company B

Nodes denoted here are specifically meant to be running PostgreSQL. Architectures discussed in this chapter will include various abstraction layers and, as a result, will likely require even more nodes in the cluster. PostgreSQL nodes tend to be much larger and do far more work, so are the focus of this recipe.