Chapter 5. Handling Databases

In this chapter, we will cover the following recipes:

- Installing relational databases with MySQL

- Storing and retrieving data with MySQL

- Importing and exporting bulk data

- Adding users and assigning access rights

- Installing web access for MySQL

- Setting backups

- Optimizing MySQL performance – queries

- Optimizing MySQL performance – configuration

- Creating MySQL replicas for scaling and high availability

- Troubleshooting MySQL

- Installing MongoDB

- Storing and retrieving data with MongoDB

Introduction

In this chapter, we will learn how to set up database servers. A database is the backbone of any application, enabling an application to efficiently store and retrieve crucial data to and from persistent storage. We will learn how to install and set up relational databases with MySQL and NoSQL databases with MongoDB.

MySQL is a popular open source database server used by various large scale applications. It is a mature database system that can be scaled to support large volumes of data. MySQL is a relational database and stores data in the form of rows and columns organized in tables. It provides various storage engines, such as MyISAM, InnoDB, and in-memory storage. MariaDB is a fork of a MySQL project and can be used as a drop-in replacement for MySQL. It was started by the developers of MySQL after Oracle took over Sun Microsystems, the owner of the MySQL project. MariaDB is guaranteed to be open source and offers faster security releases and advanced features. It provides additional storage engines, including XtraDB by Percona and Cassandra for the NoSQL backend. PostgreSQL is another well-known name in relational database systems.

NoSQL, on the other hand, is a non-relational database system. It is designed for distributed large-scale data storage requirements. For some types of data, it is not efficient to store it in the tabular form offered by relational database systems, for example, data in the form of a document. NoSQL databases are used for these types of data. Some emerging NoSQL categories are document storage, key value store, BigTable, and the graph database.

In this chapter, we will start by installing MySQL, followed by storing and manipulating data in MySQL. We will also cover user management and access control. After an introduction to relational databases, we will cover some advanced topics on scaling and high availability. We will learn how to set up the web administration tool, PHPMyAdmin, but the focus will be on working with MySQL through command line access. In later recipes, we will also cover the document storage server, MongoDB.

Installing relational databases with MySQL

In this recipe, we will learn how to install and configure the MySQL database on an Ubuntu server.

Getting ready

You will need access to a root account or an account with sudo privileges.

Make sure that the MySQL default port 3306 is available and not blocked by any firewall.

How to do it…

Follow these steps to install the relational database MySQL:

- To install the MySQL server, use the following command:

$ sudo apt-get update $ sudo apt-get install mysql-server-5.7

The installation process will download the necessary packages and then prompt you to enter a password for the MySQL root account. Choose a strong password:

- Once the installation process is complete, you can check the server status with the following command. It should return an output similar to the following:

$ sudo service mysql status mysql.service - MySQL Community Server Loaded: loaded (/lib/systemd/system/mysql.service Active: active (running) since Tue 2016-05-10 05:

- Next, create a copy of the original configuration file:

$ cd /etc/mysql/mysql.conf.d $ sudo cp mysqld.cnf mysqld.cnf.bkp

- Set MySQL to listen for a connection from network hosts. Open the configuration file

/etc/mysql/mysql.conf.d/mysqld.cnfand changebind-addressunder the[mysqld]section to your server’s IP address:$ sudo nano /etc/mysql/mysql.conf.d/mysqld.cnf bind-address = 10.0.2.6

Note

For MySQL 5.5 and 5.6, the configuration file can be found at

/etc/mysql/my.cnf - Optionally, you can change the default port used by the MySQL server. Find the

[mysqld]section in the configuration file and change the value of theportvariable as follows:port = 30356Make sure that the selected port is available and open under firewall.

- Save the changes to the configuration file and restart the MySQL server:

$ sudo service mysql restart - Now open a connection to the server using the MySQL client. Enter the password when prompted:

$ mysql -u root -p - To get a list of available commands, type

\h:mysql> \h

How it works…

MySQL is a default database server available in Ubuntu. If you are installing the Ubuntu server, you can choose MySQL to be installed by default as part of the LAMP stack. In this recipe, we have installed the latest production release of MySQL (5.7) from the Ubuntu package repository. Ubuntu 16.04 contains MySQL 5.7, whereas Ubuntu 14.04 defaults to MySQL version 5.5.

If you prefer to use an older version on Ubuntu 16, then use following command:

$ sudo add-apt-repository ‘deb http://archive.ubuntu.com/ubuntu trusty universe’ $ sudo apt-get update $ sudo apt-get install mysql-server-5.6

After installation, configure the MySQL server to listen for connections from external hosts. Make sure that you open your database installation to trusted networks such as your private network. Making it available on the Internet will open your database to attackers.

There’s more…

Securing MySQL installation

MySQL provides a simple script to configure basic settings related to security. Execute this script before using your server in production:

$ mysql_secure_installation

This command will start a basic security check, starting with changing the root password. If you have not set a strong password for the root account, you can do it now. Other settings include disabling remote access to the root account and removing anonymous users and unused databases.

MySQL is popularly used with PHP. You can easily install PHP drivers for MySQL with the following command:

$ sudo apt-get install php7.0-mysql

See also

- The Ubuntu server guide mysql page at https://help.ubuntu.com/14.04/serverguide/mysql.html

Getting ready

You will need access to a root account or an account with sudo privileges.

Make sure that the MySQL default port 3306 is available and not blocked by any firewall.

How to do it…

Follow these steps to install the relational database MySQL:

- To install the MySQL server, use the following command:

$ sudo apt-get update $ sudo apt-get install mysql-server-5.7

The installation process will download the necessary packages and then prompt you to enter a password for the MySQL root account. Choose a strong password:

- Once the installation process is complete, you can check the server status with the following command. It should return an output similar to the following:

$ sudo service mysql status mysql.service - MySQL Community Server Loaded: loaded (/lib/systemd/system/mysql.service Active: active (running) since Tue 2016-05-10 05:

- Next, create a copy of the original configuration file:

$ cd /etc/mysql/mysql.conf.d $ sudo cp mysqld.cnf mysqld.cnf.bkp

- Set MySQL to listen for a connection from network hosts. Open the configuration file

/etc/mysql/mysql.conf.d/mysqld.cnfand changebind-addressunder the[mysqld]section to your server’s IP address:$ sudo nano /etc/mysql/mysql.conf.d/mysqld.cnf bind-address = 10.0.2.6

Note

For MySQL 5.5 and 5.6, the configuration file can be found at

/etc/mysql/my.cnf - Optionally, you can change the default port used by the MySQL server. Find the

[mysqld]section in the configuration file and change the value of theportvariable as follows:port = 30356Make sure that the selected port is available and open under firewall.

- Save the changes to the configuration file and restart the MySQL server:

$ sudo service mysql restart - Now open a connection to the server using the MySQL client. Enter the password when prompted:

$ mysql -u root -p - To get a list of available commands, type

\h:mysql> \h

How it works…

MySQL is a default database server available in Ubuntu. If you are installing the Ubuntu server, you can choose MySQL to be installed by default as part of the LAMP stack. In this recipe, we have installed the latest production release of MySQL (5.7) from the Ubuntu package repository. Ubuntu 16.04 contains MySQL 5.7, whereas Ubuntu 14.04 defaults to MySQL version 5.5.

If you prefer to use an older version on Ubuntu 16, then use following command:

$ sudo add-apt-repository ‘deb http://archive.ubuntu.com/ubuntu trusty universe’ $ sudo apt-get update $ sudo apt-get install mysql-server-5.6

After installation, configure the MySQL server to listen for connections from external hosts. Make sure that you open your database installation to trusted networks such as your private network. Making it available on the Internet will open your database to attackers.

There’s more…

Securing MySQL installation

MySQL provides a simple script to configure basic settings related to security. Execute this script before using your server in production:

$ mysql_secure_installation

This command will start a basic security check, starting with changing the root password. If you have not set a strong password for the root account, you can do it now. Other settings include disabling remote access to the root account and removing anonymous users and unused databases.

MySQL is popularly used with PHP. You can easily install PHP drivers for MySQL with the following command:

$ sudo apt-get install php7.0-mysql

See also

- The Ubuntu server guide mysql page at https://help.ubuntu.com/14.04/serverguide/mysql.html

How to do it…

Follow these steps to install the relational database MySQL:

- To install the MySQL server, use the following command:

$ sudo apt-get update $ sudo apt-get install mysql-server-5.7

The installation process will download the necessary packages and then prompt you to enter a password for the MySQL root account. Choose a strong password:

- Once the installation process is complete, you can check the server status with the following command. It should return an output similar to the following:

$ sudo service mysql status mysql.service - MySQL Community Server Loaded: loaded (/lib/systemd/system/mysql.service Active: active (running) since Tue 2016-05-10 05:

- Next, create a copy of the original configuration file:

$ cd /etc/mysql/mysql.conf.d $ sudo cp mysqld.cnf mysqld.cnf.bkp

- Set MySQL to listen for a connection from network hosts. Open the configuration file

/etc/mysql/mysql.conf.d/mysqld.cnfand changebind-addressunder the[mysqld]section to your server’s IP address:$ sudo nano /etc/mysql/mysql.conf.d/mysqld.cnf bind-address = 10.0.2.6

Note

For MySQL 5.5 and 5.6, the configuration file can be found at

/etc/mysql/my.cnf - Optionally, you can change the default port used by the MySQL server. Find the

[mysqld]section in the configuration file and change the value of theportvariable as follows:port = 30356Make sure that the selected port is available and open under firewall.

- Save the changes to the configuration file and restart the MySQL server:

$ sudo service mysql restart - Now open a connection to the server using the MySQL client. Enter the password when prompted:

$ mysql -u root -p - To get a list of available commands, type

\h:mysql> \h

How it works…

MySQL is a default database server available in Ubuntu. If you are installing the Ubuntu server, you can choose MySQL to be installed by default as part of the LAMP stack. In this recipe, we have installed the latest production release of MySQL (5.7) from the Ubuntu package repository. Ubuntu 16.04 contains MySQL 5.7, whereas Ubuntu 14.04 defaults to MySQL version 5.5.

If you prefer to use an older version on Ubuntu 16, then use following command:

$ sudo add-apt-repository ‘deb http://archive.ubuntu.com/ubuntu trusty universe’ $ sudo apt-get update $ sudo apt-get install mysql-server-5.6

After installation, configure the MySQL server to listen for connections from external hosts. Make sure that you open your database installation to trusted networks such as your private network. Making it available on the Internet will open your database to attackers.

There’s more…

Securing MySQL installation

MySQL provides a simple script to configure basic settings related to security. Execute this script before using your server in production:

$ mysql_secure_installation

This command will start a basic security check, starting with changing the root password. If you have not set a strong password for the root account, you can do it now. Other settings include disabling remote access to the root account and removing anonymous users and unused databases.

MySQL is popularly used with PHP. You can easily install PHP drivers for MySQL with the following command:

$ sudo apt-get install php7.0-mysql

See also

- The Ubuntu server guide mysql page at https://help.ubuntu.com/14.04/serverguide/mysql.html

How it works…

MySQL is a default database server available in Ubuntu. If you are installing the Ubuntu server, you can choose MySQL to be installed by default as part of the LAMP stack. In this recipe, we have installed the latest production release of MySQL (5.7) from the Ubuntu package repository. Ubuntu 16.04 contains MySQL 5.7, whereas Ubuntu 14.04 defaults to MySQL version 5.5.

If you prefer to use an older version on Ubuntu 16, then use following command:

$ sudo add-apt-repository ‘deb http://archive.ubuntu.com/ubuntu trusty universe’ $ sudo apt-get update $ sudo apt-get install mysql-server-5.6

After installation, configure the MySQL server to listen for connections from external hosts. Make sure that you open your database installation to trusted networks such as your private network. Making it available on the Internet will open your database to attackers.

There’s more…

Securing MySQL installation

MySQL provides a simple script to configure basic settings related to security. Execute this script before using your server in production:

$ mysql_secure_installation

This command will start a basic security check, starting with changing the root password. If you have not set a strong password for the root account, you can do it now. Other settings include disabling remote access to the root account and removing anonymous users and unused databases.

MySQL is popularly used with PHP. You can easily install PHP drivers for MySQL with the following command:

$ sudo apt-get install php7.0-mysql

See also

- The Ubuntu server guide mysql page at https://help.ubuntu.com/14.04/serverguide/mysql.html

There’s more…

Securing MySQL installation

MySQL provides a simple script to configure basic settings related to security. Execute this script before using your server in production:

$ mysql_secure_installation

This command will start a basic security check, starting with changing the root password. If you have not set a strong password for the root account, you can do it now. Other settings include disabling remote access to the root account and removing anonymous users and unused databases.

MySQL is popularly used with PHP. You can easily install PHP drivers for MySQL with the following command:

$ sudo apt-get install php7.0-mysql

See also

- The Ubuntu server guide mysql page at https://help.ubuntu.com/14.04/serverguide/mysql.html

Securing MySQL installation

MySQL provides a simple script to configure basic settings related to security. Execute this script before using your server in production:

$ mysql_secure_installation

This command will start a basic security check, starting with changing the root password. If you have not set a strong password for the root account, you can do it now. Other settings include disabling remote access to the root account and removing anonymous users and unused databases.

MySQL is popularly used with PHP. You can easily install PHP drivers for MySQL with the following command:

$ sudo apt-get install php7.0-mysql

- The Ubuntu server guide mysql page at https://help.ubuntu.com/14.04/serverguide/mysql.html

See also

- The Ubuntu server guide mysql page at https://help.ubuntu.com/14.04/serverguide/mysql.html

Storing and retrieving data with MySQL

In this recipe, we will learn how to create databases and tables and store data in those tables. We will learn the basic Structured Query Language (SQL) required for working with MySQL. We will focus on using the command-line MySQL client for this tutorial, but you can use the same queries with any client software or code.

Getting ready

Ensure that the MySQL server is installed and running. You will need administrative access to the MySQL server. Alternatively, you can use the root account of MySQL.

How to do it…

Follow these steps to store and retrieve data with MySQL:

- First, we will need to connect to the MySQL server. Replace

adminwith a user account on the MySQL server. You can use root as well but it’s not recommended:$ mysql -u admin -h localhost -p - When prompted, enter the password for the

adminaccount. If the password is correct, you will see the following MySQL prompt:

- Create a database with the following query. Note the semi-colon at the end of query:

mysql > create database myblog; - Check all databases with a

showdatabases query. It should listmyblog:mysql > show databases;

- Select a database to work with, in this case

myblog:mysql > use myblog; Database changed

- Now, after the database has changed, we need to create a table to store our data. Use the following query to create a table:

CREATE TABLE `articles` ( `id` int(11) NOT NULL AUTO_INCREMENT, `title` varchar(255) NOT NULL, `content` text NOT NULL, `created_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP, PRIMARY KEY (`id`) ) ENGINE=InnoDB AUTO_INCREMENT=1;

- Again, you can check tables with the

show tablesquery:mysql > show tables;

- Now, let’s insert some data in our table. Use the following query to create a new record:

mysql > INSERT INTO `articles` (`id`, `title`, `content`, `created_at`) VALUES (NULL, ‘My first blog post’, ‘contents of article’, CURRENT_TIMESTAMP);

- Retrieve data from the table. The following query will select all records from the articles table:

mysql > Select * from articles;

- Retrieve the selected records from the table:

mysql > Select * from articles where id = 1;

- Update the selected record:

mysql > update articles set title=”New title” where id=1;

- Delete the record from the

articlestable using the following command:mysql > delete from articles where id = 2;

How it works…

We have created a relational database to store blog data with one table. Actual blog databases will need additional tables for comments, authors, and various entities. The queries used to create databases and tables are known as Data Definition Language (DDL), and queries that are used to select, insert, and update the actual data are known as Data Manipulation Language (DML).

MySQL offers various data types to be used for columns such as tinyint, int, long, double, varchar, text, blob, and so on. Each data type has its specific use and a proper selection may help to improve the performance of your database.

Getting ready

Ensure that the MySQL server is installed and running. You will need administrative access to the MySQL server. Alternatively, you can use the root account of MySQL.

How to do it…

Follow these steps to store and retrieve data with MySQL:

- First, we will need to connect to the MySQL server. Replace

adminwith a user account on the MySQL server. You can use root as well but it’s not recommended:$ mysql -u admin -h localhost -p - When prompted, enter the password for the

adminaccount. If the password is correct, you will see the following MySQL prompt:

- Create a database with the following query. Note the semi-colon at the end of query:

mysql > create database myblog; - Check all databases with a

showdatabases query. It should listmyblog:mysql > show databases;

- Select a database to work with, in this case

myblog:mysql > use myblog; Database changed

- Now, after the database has changed, we need to create a table to store our data. Use the following query to create a table:

CREATE TABLE `articles` ( `id` int(11) NOT NULL AUTO_INCREMENT, `title` varchar(255) NOT NULL, `content` text NOT NULL, `created_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP, PRIMARY KEY (`id`) ) ENGINE=InnoDB AUTO_INCREMENT=1;

- Again, you can check tables with the

show tablesquery:mysql > show tables;

- Now, let’s insert some data in our table. Use the following query to create a new record:

mysql > INSERT INTO `articles` (`id`, `title`, `content`, `created_at`) VALUES (NULL, ‘My first blog post’, ‘contents of article’, CURRENT_TIMESTAMP);

- Retrieve data from the table. The following query will select all records from the articles table:

mysql > Select * from articles;

- Retrieve the selected records from the table:

mysql > Select * from articles where id = 1;

- Update the selected record:

mysql > update articles set title=”New title” where id=1;

- Delete the record from the

articlestable using the following command:mysql > delete from articles where id = 2;

How it works…

We have created a relational database to store blog data with one table. Actual blog databases will need additional tables for comments, authors, and various entities. The queries used to create databases and tables are known as Data Definition Language (DDL), and queries that are used to select, insert, and update the actual data are known as Data Manipulation Language (DML).

MySQL offers various data types to be used for columns such as tinyint, int, long, double, varchar, text, blob, and so on. Each data type has its specific use and a proper selection may help to improve the performance of your database.

How to do it…

Follow these steps to store and retrieve data with MySQL:

- First, we will need to connect to the MySQL server. Replace

adminwith a user account on the MySQL server. You can use root as well but it’s not recommended:$ mysql -u admin -h localhost -p - When prompted, enter the password for the

adminaccount. If the password is correct, you will see the following MySQL prompt:

- Create a database with the following query. Note the semi-colon at the end of query:

mysql > create database myblog; - Check all databases with a

showdatabases query. It should listmyblog:mysql > show databases;

- Select a database to work with, in this case

myblog:mysql > use myblog; Database changed

- Now, after the database has changed, we need to create a table to store our data. Use the following query to create a table:

CREATE TABLE `articles` ( `id` int(11) NOT NULL AUTO_INCREMENT, `title` varchar(255) NOT NULL, `content` text NOT NULL, `created_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP, PRIMARY KEY (`id`) ) ENGINE=InnoDB AUTO_INCREMENT=1;

- Again, you can check tables with the

show tablesquery:mysql > show tables;

- Now, let’s insert some data in our table. Use the following query to create a new record:

mysql > INSERT INTO `articles` (`id`, `title`, `content`, `created_at`) VALUES (NULL, ‘My first blog post’, ‘contents of article’, CURRENT_TIMESTAMP);

- Retrieve data from the table. The following query will select all records from the articles table:

mysql > Select * from articles;

- Retrieve the selected records from the table:

mysql > Select * from articles where id = 1;

- Update the selected record:

mysql > update articles set title=”New title” where id=1;

- Delete the record from the

articlestable using the following command:mysql > delete from articles where id = 2;

How it works…

We have created a relational database to store blog data with one table. Actual blog databases will need additional tables for comments, authors, and various entities. The queries used to create databases and tables are known as Data Definition Language (DDL), and queries that are used to select, insert, and update the actual data are known as Data Manipulation Language (DML).

MySQL offers various data types to be used for columns such as tinyint, int, long, double, varchar, text, blob, and so on. Each data type has its specific use and a proper selection may help to improve the performance of your database.

How it works…

We have created a relational database to store blog data with one table. Actual blog databases will need additional tables for comments, authors, and various entities. The queries used to create databases and tables are known as Data Definition Language (DDL), and queries that are used to select, insert, and update the actual data are known as Data Manipulation Language (DML).

MySQL offers various data types to be used for columns such as tinyint, int, long, double, varchar, text, blob, and so on. Each data type has its specific use and a proper selection may help to improve the performance of your database.

Importing and exporting bulk data

In this recipe, we will learn how to import and export bulk data with MySQL. Many times it happens that we receive data in CSV or XML format and we need to add this data to the database server for further processing. You can always use tools such as MySQL workbench and phpMyAdmin, but MySQL provides command-line tools for the bulk processing of data that are more efficient and flexible.

How to do it…

Follow these steps to import and export bulk data:

- To export a database from the MySQL server, use the following command:

$ mysqldump -u admin -p mytestdb > db_backup.sql - To export specific tables from a database, use the following command:

$ mysqldump -u admin -p mytestdb table1 table2 > table_backup.sql - To compress exported data, use

gzip:$ mysqldump -u admin -p mytestdb | gzip > db_backup.sql.gz - To export selective data to the CSV format, use the following query. Note that this will create

articles.csvon the same server as MySQL and not your local server:SELECT id, title, contents FROM articles INTO OUTFILE ‘/tmp/articles.csv’ FIELDS TERMINATED BY ‘,’ ENCLOSED BY ‘”’ LINES TERMINATED BY ‘\n’;

- To fetch data on your local system, you can use the MySQL client as follows:

- Write your query in a file:

$ nano query.sql select * from articles;

- Now pass this query to the

mysqlclient and collect the output in CSV:$ mysql -h 192.168.2.100 -u admin -p myblog < query.sql > output.csv

The resulting file will contain tab separated values.

- Write your query in a file:

- To import an SQL file to a MySQL database, we need to first create a database:

$ mysqladmin -u admin -p create mytestdb2 - Once the database is created, import data with the following command:

$ mysql -u admin -p mytestdb2 < db_backup.sql - To import a CSV file in a MySQL table, you can use the

Load Dataquery. The following is the sample CSV file:

Now use the following query from the MySQL console to import data from CSV:

LOAD DATA INFILE ‘c:/tmp/articles.csv’ INTO TABLE articles FIELDS TERMINATED BY ‘,’ ENCLOSED BY ‘”’ LINES TERMINATED BY \n IGNORE 1 ROWS;

See also

- MySQL select-into syntax at https://dev.mysql.com/doc/refman/5.6/en/select-into.html

- MySQL load data infile syntax at https://dev.mysql.com/doc/refman/5.6/en/load-data.html

- Importing from and exporting to XML files at https://dev.mysql.com/doc/refman/5.6/en/load-xml.html

How to do it…

Follow these steps to import and export bulk data:

- To export a database from the MySQL server, use the following command:

$ mysqldump -u admin -p mytestdb > db_backup.sql - To export specific tables from a database, use the following command:

$ mysqldump -u admin -p mytestdb table1 table2 > table_backup.sql - To compress exported data, use

gzip:$ mysqldump -u admin -p mytestdb | gzip > db_backup.sql.gz - To export selective data to the CSV format, use the following query. Note that this will create

articles.csvon the same server as MySQL and not your local server:SELECT id, title, contents FROM articles INTO OUTFILE ‘/tmp/articles.csv’ FIELDS TERMINATED BY ‘,’ ENCLOSED BY ‘”’ LINES TERMINATED BY ‘\n’;

- To fetch data on your local system, you can use the MySQL client as follows:

- Write your query in a file:

$ nano query.sql select * from articles;

- Now pass this query to the

mysqlclient and collect the output in CSV:$ mysql -h 192.168.2.100 -u admin -p myblog < query.sql > output.csv

The resulting file will contain tab separated values.

- Write your query in a file:

- To import an SQL file to a MySQL database, we need to first create a database:

$ mysqladmin -u admin -p create mytestdb2 - Once the database is created, import data with the following command:

$ mysql -u admin -p mytestdb2 < db_backup.sql - To import a CSV file in a MySQL table, you can use the

Load Dataquery. The following is the sample CSV file:

Now use the following query from the MySQL console to import data from CSV:

LOAD DATA INFILE ‘c:/tmp/articles.csv’ INTO TABLE articles FIELDS TERMINATED BY ‘,’ ENCLOSED BY ‘”’ LINES TERMINATED BY \n IGNORE 1 ROWS;

See also

- MySQL select-into syntax at https://dev.mysql.com/doc/refman/5.6/en/select-into.html

- MySQL load data infile syntax at https://dev.mysql.com/doc/refman/5.6/en/load-data.html

- Importing from and exporting to XML files at https://dev.mysql.com/doc/refman/5.6/en/load-xml.html

See also

- MySQL select-into syntax at https://dev.mysql.com/doc/refman/5.6/en/select-into.html

- MySQL load data infile syntax at https://dev.mysql.com/doc/refman/5.6/en/load-data.html

- Importing from and exporting to XML files at https://dev.mysql.com/doc/refman/5.6/en/load-xml.html

Adding users and assigning access rights

In this recipe, we will learn how to add new users to the MySQL database server. MySQL provides very flexible and granular user management options. We can create users with full access to an entire database or limit a user to simply read the data from a single database. Again, we will be using queries to create users and grant them access rights. You are free to use any tool of your choice.

Getting ready

You will need a MySQL user account with administrative privileges. You can use the MySQL root account.

How to do it…

Follow these steps to add users to MySQL database server and assign access rights:

- Open the MySQL shell with the following command. Enter the password for the admin account when prompted:

$ mysql -u root -p - From the MySQL shell, use the following command to add a new user to MySQL:

mysql> create user ‘dbuser’@’localhost’ identified by ‘password’; - You can check the user account with the following command:

mysql> select user, host, password from mysql.user where user = ‘dbuser’;

- Next, add some privileges to this user account:

mysql> grant all privileges on *.* to ‘dbuser’@’localhost’ with grant option; - Verify the privileges for the account as follows:

mysql> show grants for ‘dbuser’@’localhost’

- Finally, exit the MySQL shell and try to log in with the new user account. You should log in successfully:

mysql> exit $ mysql -u dbuser -p

How it works…

MySQL uses the same database structure to store user account information. It contains a hidden database named MySQL that contains all MySQL settings along with user accounts. The statements create user and grant work as a wrapper around common insert statements and make it easy to add new users to the system.

In the preceding example, we created a new user with the name dbuser. This user is allowed to log in only from localhost and requires a password to log in to the MySQL server. You can skip the identified by ‘password’ part to create a user without a password, but of course, it’s not recommended.

To allow a user to log in from any system, you need to set the host part to a %, as follows:

mysql> create user ‘dbuser’@’%’ identified by ‘password’;

You can also limit access from a specific host by specifying its FQDN or IP address:

mysql> create user ‘dbuser’@’host1.example.com’ identified by ‘password’;

Or

mysql> create user ‘dbuser’@’10.0.2.51’ identified by ‘password’;

Note that if you have an anonymous user account on MySQL, then a user created with username’@’% will not be able to log in through localhost. You will need to add a separate entry with username’@’localhost.

Next, we give some privileges to this user account using a grant statement. The preceding example gives all privileges on all databases to the user account dbuser. To limit the database, change the database part to dbname.*:

mysql> grant all privileges on dbname.* to ‘dbuser’@’localhost’ with grant option;

To limit privileges to certain tasks, mention specific privileges in a grant statement:

mysql> grant select, insert, update, delete, create -> on dbname.* to ‘dbuser’@’localhost’;

The preceding statement will grant select, insert, update, delete, and create privileges on any table under the dbname database.

There’s more…

Similar to preceding add user example, other user management tasks can be performed with SQL queries as follows:

Removing user accounts

You can easily remove a user account with the drop statement, as follows:

mysql> drop user ‘dbuser’@’localhost’;

Setting resource limits

MySQL allows setting limits on individual accounts:

mysql> grant all on dbname.* to ‘dbuser’@’localhost’ -> with max_queries_per_hour 20 -> max_updates_per_hour 10 -> max_connections_per_hour 5 -> max_user_connections 2;

See also

- MySQL user account management at https://dev.mysql.com/doc/refman/5.6/en/user-account-management.html

Getting ready

You will need a MySQL user account with administrative privileges. You can use the MySQL root account.

How to do it…

Follow these steps to add users to MySQL database server and assign access rights:

- Open the MySQL shell with the following command. Enter the password for the admin account when prompted:

$ mysql -u root -p - From the MySQL shell, use the following command to add a new user to MySQL:

mysql> create user ‘dbuser’@’localhost’ identified by ‘password’; - You can check the user account with the following command:

mysql> select user, host, password from mysql.user where user = ‘dbuser’;

- Next, add some privileges to this user account:

mysql> grant all privileges on *.* to ‘dbuser’@’localhost’ with grant option; - Verify the privileges for the account as follows:

mysql> show grants for ‘dbuser’@’localhost’

- Finally, exit the MySQL shell and try to log in with the new user account. You should log in successfully:

mysql> exit $ mysql -u dbuser -p

How it works…

MySQL uses the same database structure to store user account information. It contains a hidden database named MySQL that contains all MySQL settings along with user accounts. The statements create user and grant work as a wrapper around common insert statements and make it easy to add new users to the system.

In the preceding example, we created a new user with the name dbuser. This user is allowed to log in only from localhost and requires a password to log in to the MySQL server. You can skip the identified by ‘password’ part to create a user without a password, but of course, it’s not recommended.

To allow a user to log in from any system, you need to set the host part to a %, as follows:

mysql> create user ‘dbuser’@’%’ identified by ‘password’;

You can also limit access from a specific host by specifying its FQDN or IP address:

mysql> create user ‘dbuser’@’host1.example.com’ identified by ‘password’;

Or

mysql> create user ‘dbuser’@’10.0.2.51’ identified by ‘password’;

Note that if you have an anonymous user account on MySQL, then a user created with username’@’% will not be able to log in through localhost. You will need to add a separate entry with username’@’localhost.

Next, we give some privileges to this user account using a grant statement. The preceding example gives all privileges on all databases to the user account dbuser. To limit the database, change the database part to dbname.*:

mysql> grant all privileges on dbname.* to ‘dbuser’@’localhost’ with grant option;

To limit privileges to certain tasks, mention specific privileges in a grant statement:

mysql> grant select, insert, update, delete, create -> on dbname.* to ‘dbuser’@’localhost’;

The preceding statement will grant select, insert, update, delete, and create privileges on any table under the dbname database.

There’s more…

Similar to preceding add user example, other user management tasks can be performed with SQL queries as follows:

Removing user accounts

You can easily remove a user account with the drop statement, as follows:

mysql> drop user ‘dbuser’@’localhost’;

Setting resource limits

MySQL allows setting limits on individual accounts:

mysql> grant all on dbname.* to ‘dbuser’@’localhost’ -> with max_queries_per_hour 20 -> max_updates_per_hour 10 -> max_connections_per_hour 5 -> max_user_connections 2;

See also

- MySQL user account management at https://dev.mysql.com/doc/refman/5.6/en/user-account-management.html

How to do it…

Follow these steps to add users to MySQL database server and assign access rights:

- Open the MySQL shell with the following command. Enter the password for the admin account when prompted:

$ mysql -u root -p - From the MySQL shell, use the following command to add a new user to MySQL:

mysql> create user ‘dbuser’@’localhost’ identified by ‘password’; - You can check the user account with the following command:

mysql> select user, host, password from mysql.user where user = ‘dbuser’;

- Next, add some privileges to this user account:

mysql> grant all privileges on *.* to ‘dbuser’@’localhost’ with grant option; - Verify the privileges for the account as follows:

mysql> show grants for ‘dbuser’@’localhost’

- Finally, exit the MySQL shell and try to log in with the new user account. You should log in successfully:

mysql> exit $ mysql -u dbuser -p

How it works…

MySQL uses the same database structure to store user account information. It contains a hidden database named MySQL that contains all MySQL settings along with user accounts. The statements create user and grant work as a wrapper around common insert statements and make it easy to add new users to the system.

In the preceding example, we created a new user with the name dbuser. This user is allowed to log in only from localhost and requires a password to log in to the MySQL server. You can skip the identified by ‘password’ part to create a user without a password, but of course, it’s not recommended.

To allow a user to log in from any system, you need to set the host part to a %, as follows:

mysql> create user ‘dbuser’@’%’ identified by ‘password’;

You can also limit access from a specific host by specifying its FQDN or IP address:

mysql> create user ‘dbuser’@’host1.example.com’ identified by ‘password’;

Or

mysql> create user ‘dbuser’@’10.0.2.51’ identified by ‘password’;

Note that if you have an anonymous user account on MySQL, then a user created with username’@’% will not be able to log in through localhost. You will need to add a separate entry with username’@’localhost.

Next, we give some privileges to this user account using a grant statement. The preceding example gives all privileges on all databases to the user account dbuser. To limit the database, change the database part to dbname.*:

mysql> grant all privileges on dbname.* to ‘dbuser’@’localhost’ with grant option;

To limit privileges to certain tasks, mention specific privileges in a grant statement:

mysql> grant select, insert, update, delete, create -> on dbname.* to ‘dbuser’@’localhost’;

The preceding statement will grant select, insert, update, delete, and create privileges on any table under the dbname database.

There’s more…

Similar to preceding add user example, other user management tasks can be performed with SQL queries as follows:

Removing user accounts

You can easily remove a user account with the drop statement, as follows:

mysql> drop user ‘dbuser’@’localhost’;

Setting resource limits

MySQL allows setting limits on individual accounts:

mysql> grant all on dbname.* to ‘dbuser’@’localhost’ -> with max_queries_per_hour 20 -> max_updates_per_hour 10 -> max_connections_per_hour 5 -> max_user_connections 2;

See also

- MySQL user account management at https://dev.mysql.com/doc/refman/5.6/en/user-account-management.html

How it works…

MySQL uses the same database structure to store user account information. It contains a hidden database named MySQL that contains all MySQL settings along with user accounts. The statements create user and grant work as a wrapper around common insert statements and make it easy to add new users to the system.

In the preceding example, we created a new user with the name dbuser. This user is allowed to log in only from localhost and requires a password to log in to the MySQL server. You can skip the identified by ‘password’ part to create a user without a password, but of course, it’s not recommended.

To allow a user to log in from any system, you need to set the host part to a %, as follows:

mysql> create user ‘dbuser’@’%’ identified by ‘password’;

You can also limit access from a specific host by specifying its FQDN or IP address:

mysql> create user ‘dbuser’@’host1.example.com’ identified by ‘password’;

Or

mysql> create user ‘dbuser’@’10.0.2.51’ identified by ‘password’;

Note that if you have an anonymous user account on MySQL, then a user created with username’@’% will not be able to log in through localhost. You will need to add a separate entry with username’@’localhost.

Next, we give some privileges to this user account using a grant statement. The preceding example gives all privileges on all databases to the user account dbuser. To limit the database, change the database part to dbname.*:

mysql> grant all privileges on dbname.* to ‘dbuser’@’localhost’ with grant option;

To limit privileges to certain tasks, mention specific privileges in a grant statement:

mysql> grant select, insert, update, delete, create -> on dbname.* to ‘dbuser’@’localhost’;

The preceding statement will grant select, insert, update, delete, and create privileges on any table under the dbname database.

There’s more…

Similar to preceding add user example, other user management tasks can be performed with SQL queries as follows:

Removing user accounts

You can easily remove a user account with the drop statement, as follows:

mysql> drop user ‘dbuser’@’localhost’;

Setting resource limits

MySQL allows setting limits on individual accounts:

mysql> grant all on dbname.* to ‘dbuser’@’localhost’ -> with max_queries_per_hour 20 -> max_updates_per_hour 10 -> max_connections_per_hour 5 -> max_user_connections 2;

See also

- MySQL user account management at https://dev.mysql.com/doc/refman/5.6/en/user-account-management.html

There’s more…

Similar to preceding add user example, other user management tasks can be performed with SQL queries as follows:

Removing user accounts

You can easily remove a user account with the drop statement, as follows:

mysql> drop user ‘dbuser’@’localhost’;

Setting resource limits

MySQL allows setting limits on individual accounts:

mysql> grant all on dbname.* to ‘dbuser’@’localhost’ -> with max_queries_per_hour 20 -> max_updates_per_hour 10 -> max_connections_per_hour 5 -> max_user_connections 2;

See also

- MySQL user account management at https://dev.mysql.com/doc/refman/5.6/en/user-account-management.html

Removing user accounts

You can easily remove a user account with the drop statement, as follows:

mysql> drop user ‘dbuser’@’localhost’;

Setting resource limits

MySQL allows setting limits on individual accounts:

mysql> grant all on dbname.* to ‘dbuser’@’localhost’ -> with max_queries_per_hour 20 -> max_updates_per_hour 10 -> max_connections_per_hour 5 -> max_user_connections 2;

- MySQL user account management at https://dev.mysql.com/doc/refman/5.6/en/user-account-management.html

Setting resource limits

MySQL allows setting limits on individual accounts:

mysql> grant all on dbname.* to ‘dbuser’@’localhost’ -> with max_queries_per_hour 20 -> max_updates_per_hour 10 -> max_connections_per_hour 5 -> max_user_connections 2;

- MySQL user account management at https://dev.mysql.com/doc/refman/5.6/en/user-account-management.html

See also

- MySQL user account management at https://dev.mysql.com/doc/refman/5.6/en/user-account-management.html

Installing web access for MySQL

In this recipe, we will set up a well-known web-based MySQL administrative tool—phpMyAdmin.

Getting ready

You will need access to a root account or an account with sudo privileges.

You will need a web server set up to serve PHP contents.

How to do it…

Follow these steps to install web access for MySQL:

- Enable the

mcryptextension for PHP:$ sudo php5enmod mcrypt - Install

phpmyadminwith the following commands:$ sudo apt-get update $ sudo apt-get install phpmyadmin

- The installation process will download the necessary packages and then prompt you to configure

phpmyadmin:

- Choose

<yes>to proceed with the configuration process. - Enter the MySQL admin account password on the next screen:

- Another screen will pop up; this time, you will be asked for the new password for the

phpmyadminuser. Enter the new password and then confirm it on the next screen:

- Next,

phpmyadminwill ask for web server selection:

- Once the installation completes, you can access phpMyAdmin at

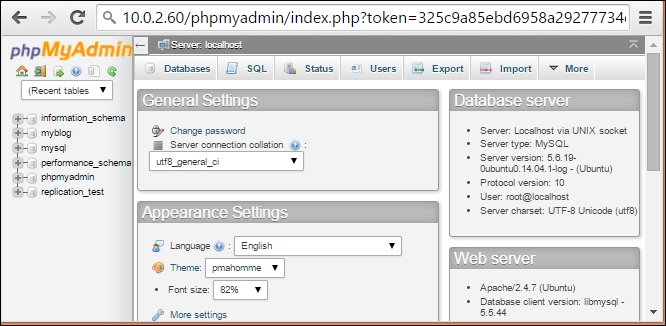

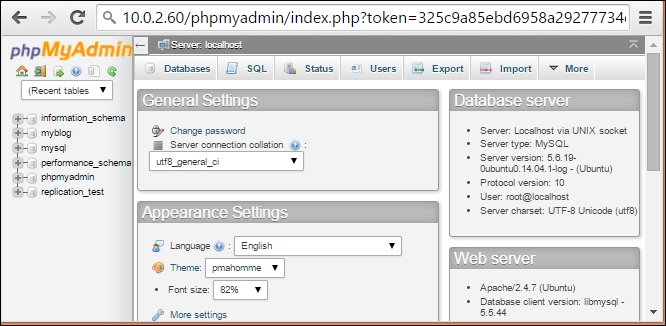

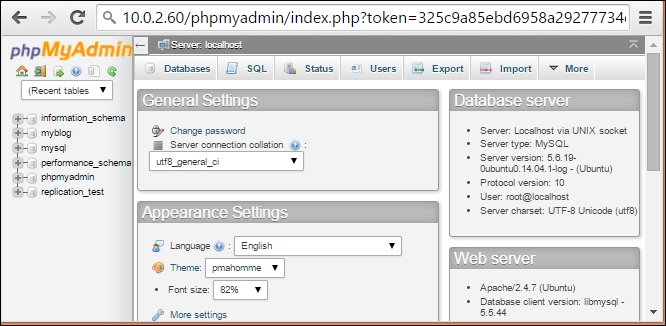

http://server-ip/phpmyadmin. Use your admin login credentials on the login screen. Thephpmyadminscreen will look something like this:

How it works…

PHPMyAdmin is a web-based administrative console for MySQL. It is developed in PHP and works with a web server such as Apache to serve web access. With PHPMyAdmin, you can do database tasks such as create databases and tables; select, insert, update data; modify table definitions; and a lot more. It provides a query console which can be used to type in custom queries and execute them from same screen.

With the addition of the Ubuntu software repository, it has become easy to install PHPMyAdmin with a single command. Once it is installed, a new user is created on the MySQL server. It also supports connecting to multiple servers. You can find all configuration files located in the /etc/phpmyadmin directory.

There’s more…

If you want to install the latest version of phpMyAdmin, you can download it from their official website, https://www.phpmyadmin.net/downloads/. You can extract downloaded contents to your web directory and set MySQL credentials in the config.inc.php file.

See also

- Read more about phpMyAdmin in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/phpmyadmin.html

- Install and secure phpMyAdmin at https://www.digitalocean.com/community/tutorials/how-to-install-and-secure-phpmyadmin-on-ubuntu-14-04

Getting ready

You will need access to a root account or an account with sudo privileges.

You will need a web server set up to serve PHP contents.

How to do it…

Follow these steps to install web access for MySQL:

- Enable the

mcryptextension for PHP:$ sudo php5enmod mcrypt - Install

phpmyadminwith the following commands:$ sudo apt-get update $ sudo apt-get install phpmyadmin

- The installation process will download the necessary packages and then prompt you to configure

phpmyadmin:

- Choose

<yes>to proceed with the configuration process. - Enter the MySQL admin account password on the next screen:

- Another screen will pop up; this time, you will be asked for the new password for the

phpmyadminuser. Enter the new password and then confirm it on the next screen:

- Next,

phpmyadminwill ask for web server selection:

- Once the installation completes, you can access phpMyAdmin at

http://server-ip/phpmyadmin. Use your admin login credentials on the login screen. Thephpmyadminscreen will look something like this:

How it works…

PHPMyAdmin is a web-based administrative console for MySQL. It is developed in PHP and works with a web server such as Apache to serve web access. With PHPMyAdmin, you can do database tasks such as create databases and tables; select, insert, update data; modify table definitions; and a lot more. It provides a query console which can be used to type in custom queries and execute them from same screen.

With the addition of the Ubuntu software repository, it has become easy to install PHPMyAdmin with a single command. Once it is installed, a new user is created on the MySQL server. It also supports connecting to multiple servers. You can find all configuration files located in the /etc/phpmyadmin directory.

There’s more…

If you want to install the latest version of phpMyAdmin, you can download it from their official website, https://www.phpmyadmin.net/downloads/. You can extract downloaded contents to your web directory and set MySQL credentials in the config.inc.php file.

See also

- Read more about phpMyAdmin in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/phpmyadmin.html

- Install and secure phpMyAdmin at https://www.digitalocean.com/community/tutorials/how-to-install-and-secure-phpmyadmin-on-ubuntu-14-04

How to do it…

Follow these steps to install web access for MySQL:

- Enable the

mcryptextension for PHP:$ sudo php5enmod mcrypt - Install

phpmyadminwith the following commands:$ sudo apt-get update $ sudo apt-get install phpmyadmin

- The installation process will download the necessary packages and then prompt you to configure

phpmyadmin:

- Choose

<yes>to proceed with the configuration process. - Enter the MySQL admin account password on the next screen:

- Another screen will pop up; this time, you will be asked for the new password for the

phpmyadminuser. Enter the new password and then confirm it on the next screen:

- Next,

phpmyadminwill ask for web server selection:

- Once the installation completes, you can access phpMyAdmin at

http://server-ip/phpmyadmin. Use your admin login credentials on the login screen. Thephpmyadminscreen will look something like this:

How it works…

PHPMyAdmin is a web-based administrative console for MySQL. It is developed in PHP and works with a web server such as Apache to serve web access. With PHPMyAdmin, you can do database tasks such as create databases and tables; select, insert, update data; modify table definitions; and a lot more. It provides a query console which can be used to type in custom queries and execute them from same screen.

With the addition of the Ubuntu software repository, it has become easy to install PHPMyAdmin with a single command. Once it is installed, a new user is created on the MySQL server. It also supports connecting to multiple servers. You can find all configuration files located in the /etc/phpmyadmin directory.

There’s more…

If you want to install the latest version of phpMyAdmin, you can download it from their official website, https://www.phpmyadmin.net/downloads/. You can extract downloaded contents to your web directory and set MySQL credentials in the config.inc.php file.

See also

- Read more about phpMyAdmin in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/phpmyadmin.html

- Install and secure phpMyAdmin at https://www.digitalocean.com/community/tutorials/how-to-install-and-secure-phpmyadmin-on-ubuntu-14-04

How it works…

PHPMyAdmin is a web-based administrative console for MySQL. It is developed in PHP and works with a web server such as Apache to serve web access. With PHPMyAdmin, you can do database tasks such as create databases and tables; select, insert, update data; modify table definitions; and a lot more. It provides a query console which can be used to type in custom queries and execute them from same screen.

With the addition of the Ubuntu software repository, it has become easy to install PHPMyAdmin with a single command. Once it is installed, a new user is created on the MySQL server. It also supports connecting to multiple servers. You can find all configuration files located in the /etc/phpmyadmin directory.

There’s more…

If you want to install the latest version of phpMyAdmin, you can download it from their official website, https://www.phpmyadmin.net/downloads/. You can extract downloaded contents to your web directory and set MySQL credentials in the config.inc.php file.

See also

- Read more about phpMyAdmin in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/phpmyadmin.html

- Install and secure phpMyAdmin at https://www.digitalocean.com/community/tutorials/how-to-install-and-secure-phpmyadmin-on-ubuntu-14-04

There’s more…

If you want to install the latest version of phpMyAdmin, you can download it from their official website, https://www.phpmyadmin.net/downloads/. You can extract downloaded contents to your web directory and set MySQL credentials in the config.inc.php file.

See also

- Read more about phpMyAdmin in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/phpmyadmin.html

- Install and secure phpMyAdmin at https://www.digitalocean.com/community/tutorials/how-to-install-and-secure-phpmyadmin-on-ubuntu-14-04

See also

- Read more about phpMyAdmin in the Ubuntu server guide at https://help.ubuntu.com/lts/serverguide/phpmyadmin.html

- Install and secure phpMyAdmin at https://www.digitalocean.com/community/tutorials/how-to-install-and-secure-phpmyadmin-on-ubuntu-14-04

Setting backups

In this recipe, we will learn how to back up the MySQL database.

Getting ready

You will need administrative access to the MySQL database.

How to do it…

Follow these steps to set up the backups:

- Backing up the MySQL database is the same as exporting data from the server. Use the

mysqldumptool to back up the MySQL database as follows:$ mysqldump -h localhost -u admin -p mydb > mydb_backup.sql - You will be prompted for the admin account password. After providing the password, the backup process will take time depending on the size of the database.

- To back up all databases, add the

--all-databasesflag to the preceding command:$ mysqldump --all-databases -u admin -p alldb_backup.sql - Next, we can restore the backup created with the

mysqldumptool with the following command:$ mysqladmin -u admin -p create mydb $ mysql -h localhost -u admin -p mydb < mydb_backup.sql

- To restore all databases, skip the database creation part:

$ mysql -h localhost -u admin -p < alldb_backup.sql

How it works…

MySQL provides a very general tool, mysqldump, to export all data from the database server. This tool can be used with any type of database engine, be it MyISAM or InnoDB or any other. To perform an online backup of InnoDB tables, mysqldump provides the --single-transaction option. With this option set, InnoDB tables will not be locked and will be available to other applications while backup is in progress.

Oracle provides the MySQL Enterprise backup tool for MySQL Enterprise edition users. This tool includes features such as incremental and compressed backups. Alternatively, Percona provides an open source utility known as Xtrabackup. It provides incremental and compressed backups and many more features.

Some other backup methods include copying MySQL table files and the mysqlhotcopy script for InnoDB tables. For these methods to work, you may need to pause or stop the MySQL server before backup.

You can also enable replication to mirror all data to the other server. It is a mechanism to maintain multiple copies of data by automatically copying data from one system to another. In this case, the primary server is called Master and the secondary server is called Slave. This type of configuration is known as Master-Slave replication. Generally, applications communicate with the Master server for all read and write requests. The Slave is used as a backup if the Master goes down. Many times, the Master-Slave configuration is used to load balance database queries by routing all read requests to the Slave server and write requests to the Master server. Replication can also be configured in Master-Master mode, where both servers receive read-write requests from clients.

See also

- MySQL backup methods at http://dev.mysql.com/doc/refman/5.6/en/backup-methods.html

- Percona XtraBackup at https://www.percona.com/doc/percona-xtrabackup/2.2/index.html

- MySQL binary log at http://dev.mysql.com/doc/refman/5.6/en/binary-log.html

Getting ready

You will need administrative access to the MySQL database.

How to do it…

Follow these steps to set up the backups:

- Backing up the MySQL database is the same as exporting data from the server. Use the

mysqldumptool to back up the MySQL database as follows:$ mysqldump -h localhost -u admin -p mydb > mydb_backup.sql - You will be prompted for the admin account password. After providing the password, the backup process will take time depending on the size of the database.

- To back up all databases, add the

--all-databasesflag to the preceding command:$ mysqldump --all-databases -u admin -p alldb_backup.sql - Next, we can restore the backup created with the

mysqldumptool with the following command:$ mysqladmin -u admin -p create mydb $ mysql -h localhost -u admin -p mydb < mydb_backup.sql

- To restore all databases, skip the database creation part:

$ mysql -h localhost -u admin -p < alldb_backup.sql

How it works…

MySQL provides a very general tool, mysqldump, to export all data from the database server. This tool can be used with any type of database engine, be it MyISAM or InnoDB or any other. To perform an online backup of InnoDB tables, mysqldump provides the --single-transaction option. With this option set, InnoDB tables will not be locked and will be available to other applications while backup is in progress.

Oracle provides the MySQL Enterprise backup tool for MySQL Enterprise edition users. This tool includes features such as incremental and compressed backups. Alternatively, Percona provides an open source utility known as Xtrabackup. It provides incremental and compressed backups and many more features.

Some other backup methods include copying MySQL table files and the mysqlhotcopy script for InnoDB tables. For these methods to work, you may need to pause or stop the MySQL server before backup.

You can also enable replication to mirror all data to the other server. It is a mechanism to maintain multiple copies of data by automatically copying data from one system to another. In this case, the primary server is called Master and the secondary server is called Slave. This type of configuration is known as Master-Slave replication. Generally, applications communicate with the Master server for all read and write requests. The Slave is used as a backup if the Master goes down. Many times, the Master-Slave configuration is used to load balance database queries by routing all read requests to the Slave server and write requests to the Master server. Replication can also be configured in Master-Master mode, where both servers receive read-write requests from clients.

See also

- MySQL backup methods at http://dev.mysql.com/doc/refman/5.6/en/backup-methods.html

- Percona XtraBackup at https://www.percona.com/doc/percona-xtrabackup/2.2/index.html

- MySQL binary log at http://dev.mysql.com/doc/refman/5.6/en/binary-log.html

How to do it…

Follow these steps to set up the backups:

- Backing up the MySQL database is the same as exporting data from the server. Use the

mysqldumptool to back up the MySQL database as follows:$ mysqldump -h localhost -u admin -p mydb > mydb_backup.sql - You will be prompted for the admin account password. After providing the password, the backup process will take time depending on the size of the database.

- To back up all databases, add the

--all-databasesflag to the preceding command:$ mysqldump --all-databases -u admin -p alldb_backup.sql - Next, we can restore the backup created with the

mysqldumptool with the following command:$ mysqladmin -u admin -p create mydb $ mysql -h localhost -u admin -p mydb < mydb_backup.sql

- To restore all databases, skip the database creation part:

$ mysql -h localhost -u admin -p < alldb_backup.sql

How it works…

MySQL provides a very general tool, mysqldump, to export all data from the database server. This tool can be used with any type of database engine, be it MyISAM or InnoDB or any other. To perform an online backup of InnoDB tables, mysqldump provides the --single-transaction option. With this option set, InnoDB tables will not be locked and will be available to other applications while backup is in progress.

Oracle provides the MySQL Enterprise backup tool for MySQL Enterprise edition users. This tool includes features such as incremental and compressed backups. Alternatively, Percona provides an open source utility known as Xtrabackup. It provides incremental and compressed backups and many more features.

Some other backup methods include copying MySQL table files and the mysqlhotcopy script for InnoDB tables. For these methods to work, you may need to pause or stop the MySQL server before backup.

You can also enable replication to mirror all data to the other server. It is a mechanism to maintain multiple copies of data by automatically copying data from one system to another. In this case, the primary server is called Master and the secondary server is called Slave. This type of configuration is known as Master-Slave replication. Generally, applications communicate with the Master server for all read and write requests. The Slave is used as a backup if the Master goes down. Many times, the Master-Slave configuration is used to load balance database queries by routing all read requests to the Slave server and write requests to the Master server. Replication can also be configured in Master-Master mode, where both servers receive read-write requests from clients.

See also

- MySQL backup methods at http://dev.mysql.com/doc/refman/5.6/en/backup-methods.html

- Percona XtraBackup at https://www.percona.com/doc/percona-xtrabackup/2.2/index.html

- MySQL binary log at http://dev.mysql.com/doc/refman/5.6/en/binary-log.html

How it works…

MySQL provides a very general tool, mysqldump, to export all data from the database server. This tool can be used with any type of database engine, be it MyISAM or InnoDB or any other. To perform an online backup of InnoDB tables, mysqldump provides the --single-transaction option. With this option set, InnoDB tables will not be locked and will be available to other applications while backup is in progress.

Oracle provides the MySQL Enterprise backup tool for MySQL Enterprise edition users. This tool includes features such as incremental and compressed backups. Alternatively, Percona provides an open source utility known as Xtrabackup. It provides incremental and compressed backups and many more features.

Some other backup methods include copying MySQL table files and the mysqlhotcopy script for InnoDB tables. For these methods to work, you may need to pause or stop the MySQL server before backup.

You can also enable replication to mirror all data to the other server. It is a mechanism to maintain multiple copies of data by automatically copying data from one system to another. In this case, the primary server is called Master and the secondary server is called Slave. This type of configuration is known as Master-Slave replication. Generally, applications communicate with the Master server for all read and write requests. The Slave is used as a backup if the Master goes down. Many times, the Master-Slave configuration is used to load balance database queries by routing all read requests to the Slave server and write requests to the Master server. Replication can also be configured in Master-Master mode, where both servers receive read-write requests from clients.

See also

- MySQL backup methods at http://dev.mysql.com/doc/refman/5.6/en/backup-methods.html

- Percona XtraBackup at https://www.percona.com/doc/percona-xtrabackup/2.2/index.html

- MySQL binary log at http://dev.mysql.com/doc/refman/5.6/en/binary-log.html

See also

- MySQL backup methods at http://dev.mysql.com/doc/refman/5.6/en/backup-methods.html

- Percona XtraBackup at https://www.percona.com/doc/percona-xtrabackup/2.2/index.html

- MySQL binary log at http://dev.mysql.com/doc/refman/5.6/en/binary-log.html

Optimizing MySQL performance – queries

MySQL performance optimizations can be divided into two parts. One is query optimization and the other is MySQL server configuration. To get optimum results, you have to work on both of these parts. Without proper configuration, queries will not provide consistent performance; on the other hand, without proper queries and a database structure, queries may take much longer to produce results.

In this recipe, we will learn how to evaluate query performance, set indexes, and identify the optimum database structure for our data.

Getting ready

You will need access to an admin account on the MySQL server.

You will need a large dataset to test queries. Various tools are available to generate test data. I will be using test data available at https://github.com/datacharmer/test_db.

How to do it…

Follow these steps to optimize MySQL performance:

- The first and most basic thing is to identify key columns and add indexes to them:

mysql> alter table salaries add index (salary); - Enable the slow query log to identify long-running queries. Enter the following commands from the MySQL console:

mysql> set global log_slow_queries = 1; mysql> set global slow_query_log_file = ‘/var/log/mysql/slow.log’;

- Once you identify the slow and repeated query, execute that query on the database and record query timings. The following is a sample query:

mysql> select count(*) from salaries where salary between 30000 and 65000 and from_date > ‘1986-01-01’;

- Next, use

explainto view the query execution plan:mysql> explain select count(*) from salaries where salary between 30000 and 65000 and from_date > ‘1986-01-01’;

- Add required indexes, if any, and recheck the query execution plan. Your new index should be listed under

possible_keysand key columns ofexplainoutput:mysql> alter table `salaries` add index ( `from_date` ) ; - If you found that MySQL is not using a proper index or using another index than expected then you can explicitly specify the index to be used or ignored:

mysql> select * from salaries use index (salaries) where salary between 30000 and 65000 and from_date > ‘1986-01-01’; mysql> select * from salaries where salary between 30000 and 65000 and from_date > ‘1986-01-01’ ignore index (from_date);

Now execute the query again and check query timings for any improvements.

- Analyze your data and modify the table structure. The following query will show the minimum and maximum length of data in each column. Add a small amount of buffer space to the reported maximum length and reduce additional space allocation if any:

mysql> select * from `employees` procedure analyse();The following is the partial output for the

analyse()procedure:

- Check the database engines you are using. The two major engines available in MySQL are MyISAM and InnoDB:

mysql> show create table employees;

How it works…

MySQL uses SQL to accept commands for data processing. The query contains the operation, such as select, insert, and update; the target that is a table name; and conditions to match the data. The following is an example query:

select * from employee where id = 1001;

In the preceding query, select * is the operation asking MySQL to select all data for a row. The target is the employee table, and id = 1001 is a condition part.

Once a query is received, MySQL generates query execution plan for it. This step contains various steps such as parsing, preprocessing, and optimization. In parsing and pre-processing, the query is checked for any syntactical errors and the proper order of SQL grammar. The given query can be executed in multiple ways. Query optimizer selects the best possible path for query execution. Finally, the query is executed and the execution plan is stored in the query cache for later use.

The query execution plan can be retrieved from MySQL with the help of the explain query and explain extended. Explain executes the query until the generation of the query execution plan and then returns the execution plan as a result. The execution plan contains table names used in this query, key fields used to search data, the number of rows needed to be scanned, and temporary tables and file sorting used, if any. The query execution plan shows possible keys that can be used for query execution and then shows the actual key column used. Key is a column with an index on it, which can be a primary index, unique index, or non-unique index. You can check the MySQL documentation for more details on query execution plans and explain output.

If a specific column in a table is being used repeatedly, you should consider adding a proper index to that column. Indexes group similar data together, which reduces the look up time and total number of rows to be scanned. Also keep in mind that indexes use large amounts of memory, so be selective while adding indexes.

Secondly, if you have a proper index set on a required column and the query optimization plan does not recognize or use the index, you can force MySQL to use a specific index with the USE INDEX index_name statement. To ignore a specific index, use the statement IGNORE INDEX index_name.

You may get a small improvement with table maintenance commands. Optimize table is useful when a large part of the table is modified or deleted. It reorganizes table index data on physical storage and improves I/O performance. Flush table is used to reload the internal cache. Check table and Analyze table check for table errors and data distribution respectively. The improvements with these commands may not be significant for smaller tables. Reducing the extra space allocated to each column is also a good idea for reducing total physical storage used. Reduced storage will optimize I/O performance as well as cache utilization.

You should also check the storage engines used by specific tables. The two major storage engines used in MySQL are MyISAM and InnoDB. InnnoDB provides full transactional support and uses row-level locking, whereas MyISAM does not have transaction support and uses table-level locking. MyISAM is a good choice for faster reads where you have a large amount of data with limited writes on the table. MySQL does support the addition of external storage engines in the form of plugins. One popular open source storage engine is XtraDB by Percona systems.

There’s more…

If your tables are really large, you should consider partitioning them. Partitioning tables distributes related data across multiple files on disk. Partitioning on frequently used keys can give you a quick boost. MySQL supports various different types of partitioning such as hash partitions, range partitions, list partitions, key partitions, and also sub-partitions.

You can specify hash partitioning with table creation as follows:

create table employees (

id int not null,

fname varchar(30),

lname varchar(30),

store_id int

) partition by hash(store_id) partitions 4;Alternatively, you can also partition an existing table with the following query:

mysql> alter table employees partition by hash(store_id) partitions 4;

Sharding MySQL

You can also shard your database. Sharding is a form of horizontal partitioning where you store part of the table data across multiple instances of a table. The table instance can exist on the same server under separate databases or across different servers. Each table instance contains parts of the total data, thus improving queries that need to access limited data. Sharding enables you to scale a database horizontally across multiple servers.

The best implementation strategy for sharding is to try to avoid it for as long as possible. Sharding requires additional maintenance efforts on the operations side and the use of proxy software to hide sharding from an application, or to make your application itself sharding aware. Sharding also adds limitations on queries that require access to the entire table. You will need to create cross-server joins or process data in the application layer.

See also

- The MySQL optimization guide at https://dev.mysql.com/doc/refman/5.6/en/optimization.html

- MySQL query execution plan information at https://dev.mysql.com/doc/refman/5.6/en/execution-plan-information.html

- InnoDB storage engine at https://dev.mysql.com/doc/refman/5.6/en/innodb-storage-engine.html

- Other storage engines available in MySQL at https://dev.mysql.com/doc/refman/5.6/en/storage-engines.html

- Table maintenance statements at http://dev.mysql.com/doc/refman/5.6/en/table-maintenance-sql.html

- MySQL test database at https://github.com/datacharmer/test_db

Getting ready

You will need access to an admin account on the MySQL server.

You will need a large dataset to test queries. Various tools are available to generate test data. I will be using test data available at https://github.com/datacharmer/test_db.

How to do it…

Follow these steps to optimize MySQL performance:

- The first and most basic thing is to identify key columns and add indexes to them:

mysql> alter table salaries add index (salary); - Enable the slow query log to identify long-running queries. Enter the following commands from the MySQL console:

mysql> set global log_slow_queries = 1; mysql> set global slow_query_log_file = ‘/var/log/mysql/slow.log’;

- Once you identify the slow and repeated query, execute that query on the database and record query timings. The following is a sample query:

mysql> select count(*) from salaries where salary between 30000 and 65000 and from_date > ‘1986-01-01’;

- Next, use

explainto view the query execution plan:mysql> explain select count(*) from salaries where salary between 30000 and 65000 and from_date > ‘1986-01-01’;

- Add required indexes, if any, and recheck the query execution plan. Your new index should be listed under

possible_keysand key columns ofexplainoutput:mysql> alter table `salaries` add index ( `from_date` ) ; - If you found that MySQL is not using a proper index or using another index than expected then you can explicitly specify the index to be used or ignored:

mysql> select * from salaries use index (salaries) where salary between 30000 and 65000 and from_date > ‘1986-01-01’; mysql> select * from salaries where salary between 30000 and 65000 and from_date > ‘1986-01-01’ ignore index (from_date);

Now execute the query again and check query timings for any improvements.

- Analyze your data and modify the table structure. The following query will show the minimum and maximum length of data in each column. Add a small amount of buffer space to the reported maximum length and reduce additional space allocation if any:

mysql> select * from `employees` procedure analyse();The following is the partial output for the

analyse()procedure:

- Check the database engines you are using. The two major engines available in MySQL are MyISAM and InnoDB:

mysql> show create table employees;

How it works…

MySQL uses SQL to accept commands for data processing. The query contains the operation, such as select, insert, and update; the target that is a table name; and conditions to match the data. The following is an example query:

select * from employee where id = 1001;

In the preceding query, select * is the operation asking MySQL to select all data for a row. The target is the employee table, and id = 1001 is a condition part.

Once a query is received, MySQL generates query execution plan for it. This step contains various steps such as parsing, preprocessing, and optimization. In parsing and pre-processing, the query is checked for any syntactical errors and the proper order of SQL grammar. The given query can be executed in multiple ways. Query optimizer selects the best possible path for query execution. Finally, the query is executed and the execution plan is stored in the query cache for later use.