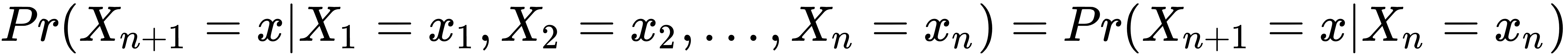

A Markov chain is a type of Markov process in which the time is discrete. However, there is a lot of disagreement among researchers on what categories of Markov process should be called Markov chain. But, most commonly, it is used to refer to discrete-state-space Markov processes. Therefore, a Markov chain is a stochastic process over a discrete state space satisfying the Markov property. More formally, we can say that a discrete-time Markov chain is a sequence of random variables X1, X2, X3, ... that satisfy the Markov property, namely that the probability of moving from the current state to the next state depends only on the present state and not on any of the previous states. In terms of the probability distribution, we can say that, given that the system is at time instance n, the conditional distribution of the states at the next time instance, n + 1, is conditionally independent of the state of the system at time instances {1, 2, . . ., n-1}, given the state of the random variable at time instance n. This can be written as follows:

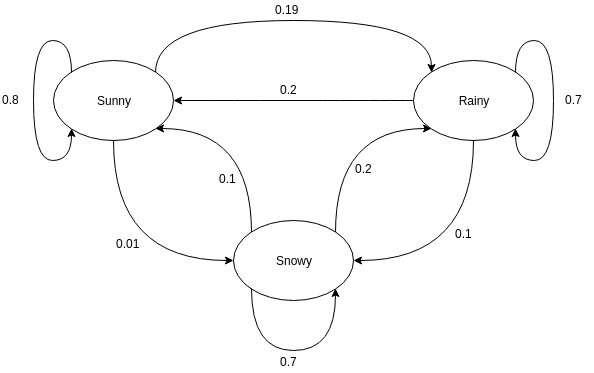

Markov chains are often represented using directed graphs. The nodes in the directed graphs represent the different possible states of the random variables, and the edges represent the probability of the system going from one state to the other in the next time instance. Let's take a simple example of predicting the weather to understand this representation better. We will consider that there are three possible states of the random variable Weather={Sunny, Rainy, Snowy}, and possible Markov chains for this can be represented as shown in Figure 1.1:

One of the main points to understand in Markov chains is that we are modeling the outcomes of a sequence of random variables over time. This is sometimes confusing for people since the model is represented using a single graph, which doesn't mention anything about time. So, the name state transitions is not a particularly good name for this, since the state is not changing for any random variable; rather, we are trying to determine the state of the next random variable given the observed state of our current random variable. Coming back to our example, we can see that the nodes of the graph represent the different possible states of the random variable Weather, and the edges between them show the probability of the next random variable taking the different possible states, given the state of the current random variable. The self-loops show the probability of the model staying in its current state. In the previous Markov chain, let's say we know that the observed state of the current random variable is Sunny, then the probability that the random variable at the next time instance will also take the value Sunny is 0.8. It could also take the value Rainy with a probability of 0.19, or Snowy with a probability of 0.01. One thing to note here is that the sum of all the probability values on all the outward edges from any state should equal 1, since it's an exhaustive event.

Now, let's try to code this simple Markov chain. We will start by defining a simple MarkovChain class, and we will keep on adding methods to this class as we go through this chapter:

import numpy as np

class MarkovChain(object):

def __init__(self, transition_prob):

"""

Initialize the MarkovChain instance.

Parameters

----------

transition_prob: dict

A dict object representing the transition probabilities in

Markov Chain. Should be of the form: {'state1': {'state1':

0.1, 'state2': 0.4}, 'state2': {...}}

"""

self.transition_prob = transition_prob

self.states = list(transition_prob.keys())

def next_state(self, current_state):

"""

Returns the state of the random variable at the next time

instance.

Parameters

----------

current_state: str

The current state of the system.

"""

return np.random.choice(

self.states, p=[self.transition_prob[current_state][next_state]

for next_state in self.states])

def generate_states(self, current_state, no=10):

"""

Generates the next states of the system.

Parameters

----------

current_state: str

The state of the current random variable.

no: int

The number of future states to generate.

"""

future_states = []

for i in range(no):

next_state = self.next_state(current_state)

future_states.append(next_state)

current_state = next_state

return future_states

Now, we can try out our example with this MarkovChain class:

>>> transition_prob = {'Sunny': {'Sunny': 0.8, 'Rainy': 0.19,

'Snowy': 0.01},

'Rainy': {'Sunny': 0.2, 'Rainy': 0.7,

'Snowy': 0.1},

'Snowy': {'Sunny': 0.1, 'Rainy': 0.2,

'Snowy': 0.7}}

>>> weather_chain = MarkovChain(transition_prob=transition_prob)

>>> weather_chain.next_state(current_state='Sunny')

'Sunny'

>>> weather_chain.next_state(current_state='Snowy')

'Snowy'

>>> weather_chain.generate_states(current_state='Snowy', no=10)

['Snowy', 'Snowy', 'Snowy', 'Rainy', 'Snowy', 'Snowy', 'Rainy',

'Rainy', 'Snowy', 'Snowy']

So far in the discussion, we have considered that the probability space of the variables doesn't change over different instances of time. This is known as a time-homogeneous Markov chain, but it is also possible to have a time-inhomogeneous Markov chain, which also has a lot of applications but is outside the scope of this book.

, as the probability of the system returns to state i after n steps:

, as the probability of the system returns to state i after n steps:

is less than 1. If the total sum is less than 1, it would mean that the probability of

is less than 1. If the total sum is less than 1, it would mean that the probability of  is greater than 0 which would mean that the state i is transient. The given state i is called recurrent if it is not transient:

is greater than 0 which would mean that the state i is transient. The given state i is called recurrent if it is not transient: