Digital systems are progressively intertwined with real-world activities. As a consequence, multitudes of data are recorded and reported by information systems. During the last 50 years, the growth in information systems and their capabilities to capture, curate, store, share, transfer, analyze, and visualize data has increased exponentially. Besides these incredible technological advances, people and organizations depend more and more on computerized devices and information sources on the internet. The IDC Digital Universe Study in May 2010 illustrates the spectacular growth of data. This study estimated that the amount of digital information (on personal computers, digital cameras, servers, sensors) stored exceeds 1 zettabyte, and predicted that the digital universe would to grow to 35 zettabytes in 2010. The IDC study characterizes 35 zettabytes as a stack of DVDs reaching halfway to Mars. This is what we refer to as the data explosion.

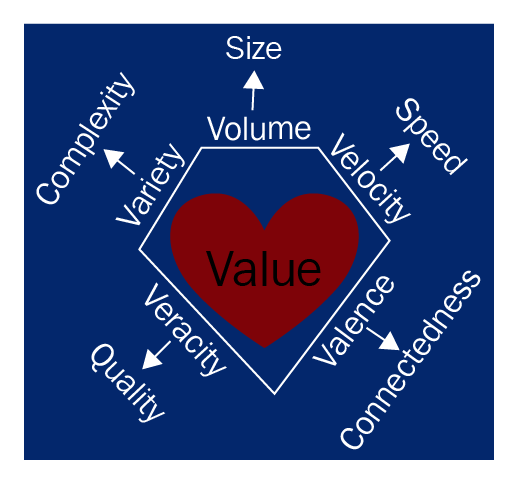

Most of the data stored in the digital universe is very unstructured, and organizations are facing challenges to capture, curate, and analyze it. One of the most challenging tasks for today's organizations is to extract information and value from data stored in their information systems. This data, which is highly complex and too voluminous to be handled by a traditional DBMS, is called big data.

Whether it's day-to-day data, business data, or basis data, if they represent a massive volume of data, either structured or unstructured, the data is relevant for the organization. However, it's not only the dimensions of the data that matters; it's how it's being used by the organization to extract the deeper insights that can drive them to better business and strategic decisions. This voluminous data can be used to determine a quality of research, enhance process flow in an organization, prevent a particular disease, link legal citations, or combat crimes. Big data is everywhere, and with the right tools it can be used to make the data more effective for business analytics.