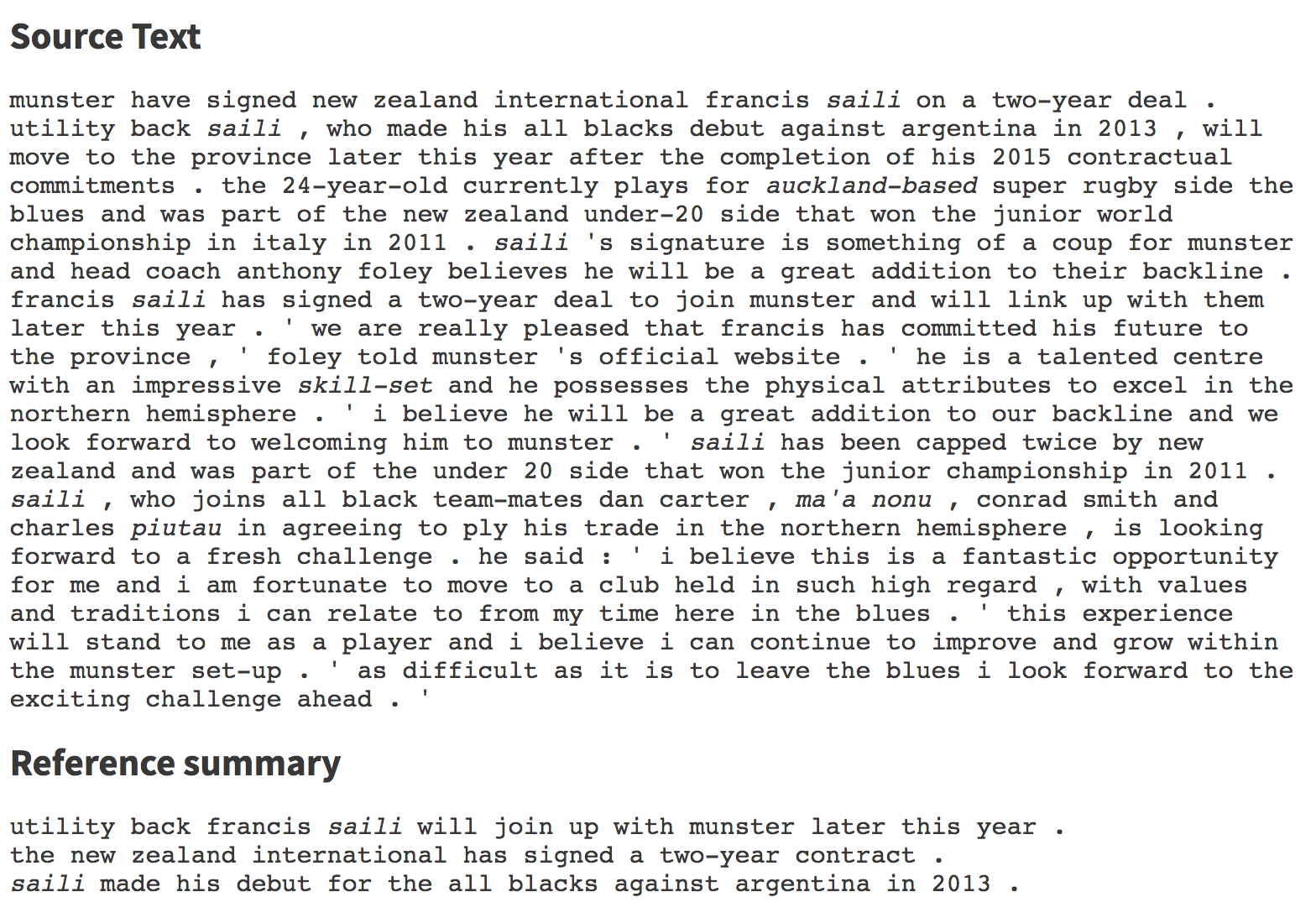

Traditional ML algorithms use handwritten feature extraction to train algorithms, while DL algorithms use modern techniques to extract these features in an automatic fashion.

For example, a DL algorithm predicting whether an image contains a face or not extracts features such as the first layer detecting edges, the second layer detecting shapes such as noses and eyes, and the final layer detecting face shapes or more complex structures. Each layer trains based on the previous layer's representation of the data. It's OK if you find this explanation hard to understand, the later chapters of the book will help you to intuitively build and inspect such networks:

The use of DL has grown tremendously in the last few years with the rise of GPUs, big data, cloud providers such as Amazon Web Services (AWS) and Google Cloud, and frameworks such as Torch, TensorFlow, Caffe, and PyTorch. In addition to this, large companies share algorithms trained on huge datasets, thus helping startups to build state-of-the-art systems on several use cases with little effort.