Chapter 4: Dimensionality Reduction and Unsupervised Learning

Activity 12: Ensemble k-means Clustering and Calculating Predictions

Solution:

After the glass dataset has been imported, shuffled, and standardized (see Exercise 58):

- Instantiate an empty data frame to append each model and save it as the new data frame object labels_df with the following code:

import pandas as pd

labels_df = pd.DataFrame()

- Import the KMeans function outside of the loop using the following:

from sklearn.cluster import KMeans

- Complete 100 iterations as follows:

for i in range(0, 100):

- Save a KMeans model object with two clusters (arbitrarily decided upon, a priori) using:

model = KMeans(n_clusters=2)

- Fit the model to scaled_features using the following:

model.fit(scaled_features)

- Generate the labels array and save it as the labels object, as follows:

labels = model.labels_

- Store labels as a column in labels_df named after the iteration using the code:

labels_df['Model_{}_Labels'.format(i+1)] = labels

- After labels have been generated for each of the 100 models (see Activity 21), calculate the mode for each row using the following code:

row_mode = labels_df.mode(axis=1)

- Assign row_mode to a new column in labels_df, as shown in the following code:

labels_df['row_mode'] = row_mode

- View the first five rows of labels_df

print(labels_df.head(5))

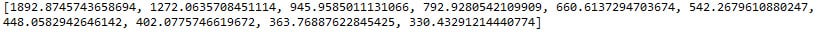

Figure 4.24: First five rows of labels_df

We have drastically increased the confidence in our predictions by iterating through numerous models, saving the predictions at each iteration, and assigning the final predictions as the mode of these predictions. However, these predictions were generated by models using a predetermined number of clusters. Unless we know the number of clusters a priori, we will want to discover the optimal number of clusters to segment our observations.

Activity 13: Evaluating Mean Inertia by Cluster after PCA Transformation

Solution:

- Instantiate a PCA model with the value for the n_components argument equal to best_n_components (that is, remember, best_n_components = 6) as follows:

from sklearn.decomposition import PCA

model = PCA(n_components=best_n_components)

- Fit the model to scaled_features and transform them into the six components, as shown here:

df_pca = model.fit_transform(scaled_features)

- Import numpy and the KMeans function outside the loop using the following code:

from sklearn.cluster import KMeans

import numpy as np

- Instantiate an empty list, inertia_list, for which we will append inertia values after each iteration using the following code:

inertia_list = []

- In the inside for loop, we will iterate through 100 models as follows:

for i in range(100):

- Build our KMeans model with n_clusters=x using:

model = KMeans(n_clusters=x)

Note

The value for x will be dictated by the outer loop which is covered in detail here.

- Fit the model to df_pca as follows:

model.fit(df_pca)

- Get the inertia value and save it to the object inertia using the following code:

inertia = model.inertia_

- Append inertia to inertia_list using the following code:

inertia_list.append(inertia)

- Moving to the outside loop, instantiate another empty list to store the average inertia values using the following code:

mean_inertia_list_PCA = []

- Since we want to check the average inertia over 100 models for n_clusters 1 through 10, we will instantiate the outer loop as follows:

for x in range(1, 11):

- After the inside loop has run through its 100 iterations, and the inertia value for each of the 100 models have been appended to inertia_list, compute the mean of this list, and save the object as mean_inertia using the following code:

mean_inertia = np.mean(inertia_list)

- Append mean_inertia to mean_inertia_list_PCA using the following code:

mean_inertia_list_PCA.append(mean_inertia)

- Print mean_inertia_list_PCA to the console using the following code:

print(mean_inertia_list_PCA)

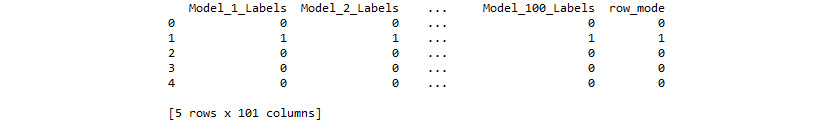

- Notice the output in the following screenshot: